A 36-core chip design with an Internet-style communication network

June 27, 2014

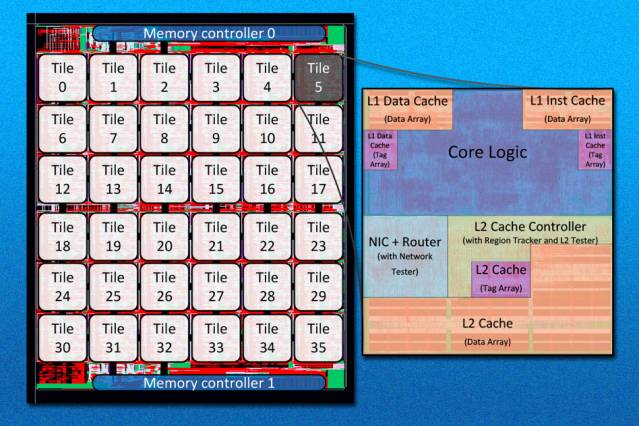

The MIT researchers’ new 36-core chip is “tiled,” meaning that it simply repeats the same circuit layout 36 times. Tiling makes multicore chips much easier to design (Credit: Bhavya K. Daya et al.)

The more cores — or processing units — a computer chip has, the bigger the problem of communication between cores becomes.

Now, Li-Shiuan Peh, the Singapore Research Professor of Electrical Engineering and Computer Science at MIT, speaking at the International Symposium on Computer Architecture, has unveiled a 36-core chip that features a “network-on-chip” to deal with the problem.

The idea: massively multicore chips of the future will need to resemble little Internets, where each core has an associated router, and data travels between cores in packets of fixed size.

The innovation also solves one of the problems that has bedeviled previous attempts to design networks-on-chip: maintaining cache coherence, or ensuring that cores’ locally stored copies of globally accessible data remain up to date.

In today’s chips, all the cores — typically somewhere between two and six — are connected by a single wire, called a bus. When two cores need to communicate, they’re granted exclusive access to the bus. But that approach won’t work as the core count mounts: Cores will spend all their time waiting for the bus to free up, rather than performing computations.

In a network-on-chip, each core is connected only to those immediately adjacent to it. “You can reach your neighbors really quickly,” says Bhavya Daya, an MIT graduate student in electrical engineering and computer science, and first author on the new paper. “You can also have multiple paths to your destination. So if you’re going way across, rather than having one congested path, you could have multiple ones.”

Maintaining cache coherence

One advantage of a bus, however, is that it makes it easier to maintain cache coherence. Every core on a chip has its own cache, a local, high-speed memory bank in which it stores frequently used data. As it performs computations, it updates the data in its cache, and every so often, it undertakes the relatively time-consuming chore of shipping the data back to main memory.

But what happens if another core needs the data before it’s been shipped? Most chips address this question with a protocol called “snoopy,” because it involves snooping on other cores’ communications. When a core needs a particular chunk of data, it broadcasts a request to all the other cores, and whichever one has the data ships it back.

If all the cores share a bus, then when one of them receives a data request, it knows that it’s the most recent request that’s been issued. Similarly, when the requesting core gets data back, it knows that it’s the most recent version of the data.

But in a network-on-chip, data is flying everywhere, and packets will frequently arrive at different cores in different sequences. The implicit ordering that the snoopy protocol relies on breaks down.

The MIT researchers solve this problem by equipping their chips with a second network, which shadows the first. The circuits connected to this network are very simple: All they can do is declare that their associated cores have sent requests for data over the main network. But precisely because those declarations are so simple, nodes in the shadow network can combine them and pass them on without incurring delays.

Groups of declarations reach the routers associated with the cores at discrete intervals — intervals corresponding to the time it takes to pass from one end of the shadow network to another. Each router can thus tabulate exactly how many requests were issued during which interval, and by which other cores. The requests themselves may still take a while to arrive, but their recipients know that they’ve been issued.

During each interval, the chip’s 36 cores are given different, hierarchical priorities. Say, for instance, that during one interval, both core 1 and core 10 issue requests, but core 1 has a higher priority. Core 32’s router may receive core 10’s request well before it receives core 1’s. But it will hold it until it’s passed along 1’s.

This hierarchical ordering simulates the chronological ordering of requests sent over a bus, so the snoopy protocol still works. The hierarchy is shuffled during every interval, however, to ensure that in the long run, all the cores receive equal weight.

The new architecture, called SCORPIO, will be open-source.

Abstract of International Symposium on Computer Architecture presentation

In the many-core era, scalable coherence and on-chip interconnects are crucial for shared memory processors. While snoopy coherence is common in small multicore systems, directory-based coherence is the de facto choice for scalability to many cores, as snoopy relies on ordered interconnects which do not scale. However, directory-based coherence does not scale beyond tens of cores due to excessive directory area overhead or inaccurate sharer tracking. Prior techniques supporting ordering on arbitrary unordered networks are impractical for full multicore chip designs.

We present SCORPIO, an ordered mesh Network-on-Chip (NoC) architecture with a separate fixed-latency, bufferless network to achieve distributed global ordering. Message delivery is decoupled from the ordering, allowing messages to arrive in any order and at any time, and still be correctly ordered.

The architecture is designed to plug-and-play with existing multicore IP and with practicality, timing, area, and power as top concerns. Full-system 36 and 64-core simulations on SPLASH-2 and PARSEC benchmarks show an average application runtime reduction of 24.1% and 12.9%, in comparison to distributed directory and AMD HyperTransport coherence protocols, respectively.

The SCORPIO architecture is incorporated in an 11 mm-by- 13mm chip prototype, fabricated in IBM 45nm SOI technology, comprising 36 Freescale e200 Power ArchitectureTMcores with private L1 and L2 caches interfacing with the NoC via ARM AMBA, along with two Cadence on-chip DDR2 controllers.

The chip prototype achieves a post synthesis operating frequency of 1 GHz (833MHz post-layout) with an estimated power of 28.8W (768mW per tile), while the network consumes only 10% of tile area and 19 % of tile power.