A new blueprint for artificial general intelligence

August 12, 2010 by Amara D. Angelica

Demis Hassabis, a research fellow at the Gatsby Computational Neuroscience Unit, University College London, is out to create a radical new kind of artficial brain.

A former well-known UK videogame designer and programmer, he has produced a number of amazing games, including the legendary Evil Genius — which he denies selling to Microsoft, thus ruining a perfectly good joke. He also won the World Games Championships a record five times.

But in 2005, he decided to move from narrow AI (used in his games) to a bigger challenge: creating artificial general intelligence (AGI). He decided to get a PhD in cognitive neuroscience, because “I felt it would be crazy to ignore the brain as a blueprint for new technologies for creating AGI,” he told me in a Skype chat from London.

Systems-level neuroscience

Hassabis will unveil the blueprint on Saturday August 14 at the Singularity Summit in San Francisco. Unlike the other talks on AGI at the Summit, “my talk with be different, because I’m interested in systems-level neuroscience — the brain’s algorithms — not in the details of how they are implemented on the wetware via spiking neurons or synapses, or specific neurochemicals, etc. I’m interested in what algorithms the brain is using to solve the problems we need to solve to get to AGI.”

Those problems, he said, include: How do we acquire knowledge? How do we go from perceptual information (say, seeing an image) to conceptual information (thinking about the image)?

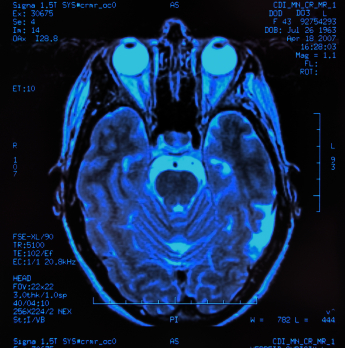

“We don’t have any good machine-learning algorithms for going from perceptual information to abstract information, such as what a city is, or how tall or heavy something is. So at University College London, “We are using all the latest neuroscience techniques, from brain imaging to single-cell recording, to investigate these questions. Our plan is implement these algorithms in an AGI system.”

But that implementation may be completely different from the way the brain physically does it. “The brain has certain constraints in the way it does it, and it may not be appropriate to implement those algorithms in exactly the same way the brain does it, because we are going to implement them in a different substrate: silicon,” he said.

Brain-inspired algorithms, not structure

And that excludes whole-brain emulation — using the neuromorphic models implicit in IBM Almaden’s SyNAPSE project and EPFL’s Blue Brain project. “We are at least 50 years away from doing AGI with that kind of methodology.”

Instead, Hassabis’ research is currently focused on producing more brain-inspired algorithms, like the HMAX visual recognition system developed by Tomaso Poggio at MIT — considered the state of the art in visual recognition systems, according to Hassabis. His research builds on Poggio’s detailed models of two layers of retinal cells, implemented in hardware.

So add a third contender to the famous “cat fight“: Demis Hassabis.