Training computers to understand sentiments conveyed by images

February 12, 2015

Images in the top and bottom rows convey opposite sentiments towards the two political candidates in the 2012 U.S. presidential election (credit: Flickr)

Jiebo Luo, professor of computer science at the University of Rochester, in collaboration with researchers at Adobe Research has come up with a more accurate way than currently possible to train computers to be able to digest big data that comes in the form of images.

In a paper presented at the recent American Association for Artificial Intelligence (AAAI) conference in Austin, Texas, the researchers describe a “progressive training deep convolutional neural network (CNN).”

Once trained, a computer can be used to determine what sentiments* a given image is likely to elicit. Luo says that this information could be useful for things as diverse as measuring economic indicators and predicting elections.

Sentiment analysis of text by computers is itself a challenging task; in social media, where many people express themselves using images and videos, sentiment analysis is even more complicated.

For example, during a political campaign voters will often share their views through pictures. Two different pictures might show the same candidate, but they might be making very different political statements. A human could recognize one as being a positive portrait of the candidate (e.g. the candidate smiling and raising his arms) and the other one being negative (e.g. a picture of the candidate looking defeated).

But no human could look at every picture shared on social media — it is truly “big data.” To be able to make informed guesses about a candidate’s popularity, computers need to be trained to digest this data, which is what Luo and his collaborators’ approach can do more accurately than was possible until now.

Image classification

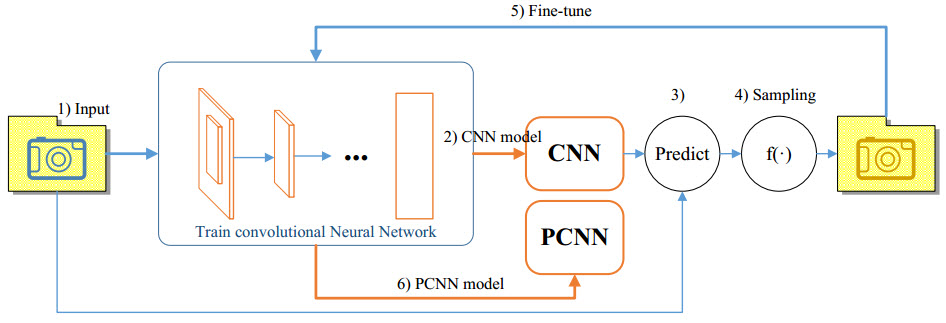

The overall flow of the proposed progressive CNN (PCNN). The researchers first train a CNN on Flickr images. Next, they select training samples according to the prediction score of the trained model on the training data itself. Instead of training from the beginning, they further fine-tune the trained model using these newly selected, and potentially cleaner training instances. This fine-tuned model is their final model for visual sentiment analysis. (credit: Jiebo Luo et al./AAAI)

The researchers treat the task of extracting sentiments from images as an image classification problem. This means that somehow each picture needs to be analyzed and labels applied to it.

To begin the training process, Luo and his collaborators used a huge number of Flickr images that have been loosely labeled by a machine algorithm with specific sentiments, in an existing database known as SentiBank (developed by Professor Shih-Fu Chang’s group at Columbia University).

This gives the computer a starting point to begin understanding what some images can convey. But the machine-generated labels also include a likelihood of that label being true, that is, how sure is the computer that the label is correct?

The key step of the training process comes next, when the researchers discard any images for which the sentiment or sentiments with which they have been labeled might not be true. So they use only the “better” labeled images for further training in a progressively improving manner within the framework of the powerful convolutional neural network. They found that this extra step significantly improved the accuracy of the sentiments with which each picture is labeled.

They also adapted this sentiment analysis engine with some images extracted from Twitter. In this case they employed “crowd intelligence,” with multiple people helping to categorize the images via the Amazon Mechanical Turk platform. They used only a small number of images for fine-tuning the computer and yet, by applying this domain-adaptation process, they showed they could improve on current state of the art methods for sentiment analysis of Twitter images.

One surprising finding is that the accuracy of image sentiment classification has exceeded that of the text sentiment classification on the same Twitter messages.

* The term “sentiment” has a broader meaning in sentiment analysis that includes feelings, attitudes, and opinions on specific topics.

Abstract for Robust image sentiment analysis using progressively trained and domain transferred deep networks

Sentiment analysis of online user generated content is important for many social media analytics tasks. Researchers have largely relied on textual sentiment analysis to develop systems to predict political elections, measure economic indicators, and so on. Recently, social media users are increasingly using images and videos to express their opinions and share their experiences. Sentiment analysis of such large scale visual content can help better extract user sentiments toward events or topics, such as those in image tweets, so that prediction of sentiment from visual content is complementary to textual sentiment analysis. Motivated by the needs in leveraging large scale yet noisy training data to solve the extremely challenging problem of image sentiment analysis, we employ Convolutional Neural Networks (CNN). We first design a suitable CNN architecture for image sentiment analysis. We obtain half a million training samples by using a baseline sentiment algorithm to label Flickr images. To make use of such noisy machine labeled data, we employ a progressive strategy to fine-tune the deep network. Furthermore, we improve the performance on Twitter images by inducing domain transfer with a small number of manually labeled Twitter images. We have conducted extensive experiments on manually labeled Twitter images. The results show that the proposed CNN can achieve better performance in image sentiment analysis than competing algorithms.