An alternative to the Turing test: ‘Winograd Schema Challenge’ annual competition announced

July 28, 2014

Nuance Communications, Inc. announced today an annual competition to develop programs that can solve the Winograd Schema Challenge, an alternative to the Turing test that provides a more accurate measure of genuine machine intelligence, according to its developer, Hector Levesque, Professor of Computer Science at the University of Toronto, and winner of the 2013 IJCAI Award for Research Excellence.

Nuance is sponsoring the yearly competition, which it announced at the ongoing 28th AAAI Conference in Quebec, Canada, in cooperation with CommonsenseReasoning, a research group dedicated to furthering and promoting research in the field of formal commonsense reasoning.

CommonsenseReasoning will organize, administer, and evaluate the Winograd Schema Challenge. The winning program that passes the test will receive a grand prize of $25,000. The test is designed to judge whether a program has truly modeled human level intelligence.

The trouble with the Turing test

Artificial Intelligence (AI) has long been measured by the “Turing test,” proposed in 1950 by Alan Turing, who sought a way to determine whether a computer program exhibited human level intelligence. The test is considered passed if the program can convince a human that he or she is conversing with a human and not a machine.

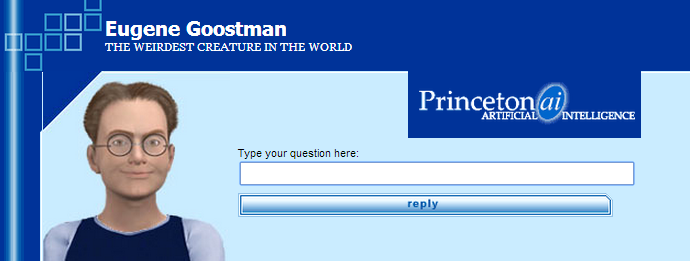

Eugene Goostman chatbot screenshot (credit: Vladimir Veselov and Eugene Demchenko)

No system has ever passed the Turing test, and most existing programs that have tried rely on considerable trickery to fool humans. The recently announced “Eugene Goostman” program modeling a 13-year-old boy is a case in point.

“At its core, the Turing test measures a human’s ability to judge deception: can a machine fool a human into thinking that it too is human?,” according to Commonsense Reasoning. “Chatbots like Eugene Goostman can fool at least some judges into thinking it is human, but that likely reveals more about how easy it is to fool some humans, especially in the course of a short conversation, than the bot’s intelligence.”

The Winograd Schema Challenge design

Rather than base the test on the sort of short free-form conversation suggested by the Turing Test, the Winograd Schema Challenge poses a set of multiple-choice questions that have a form where the answers are expected to be fairly obvious to a layperson, but ambiguous for a machine without human-like reasoning or intelligence.

An example of a Winograd Schema question is the following: “The trophy would not fit in the brown suitcase because it was too big. What was too big? Answer 0: the trophy or Answer 1: the suitcase?” A human who answers these questions correctly typically uses his abilities in spatial reasoning, his knowledge about the typical sizes of objects, and other types of commonsense reasoning, to determine the correct answer.

“There has been renewed interest in AI and natural language processing (NLP) as a means of humanizing the complex technological landscape that we encounter in our day-to-day lives,” said Charles Ortiz, Senior Principal Manager of AI and Senior Research Scientist, Natural Language and Artificial Intelligence Laboratory, Nuance Communications.

“The Winograd Schema Challenge provides us with a tool for concretely measuring research progress in commonsense reasoning, an essential element of our intelligent systems. Competitions such as the Winograd Schema Challenge can help guide more systematic research efforts that will, in the process, allow us to realize new systems that push the boundaries of current AI capabilities and lead to smarter personal assistants and intelligent systems.”

Contest Details

The test will be administered on a yearly basis by CommonsenseReasoning, starting in 2015. The first submission deadline will be October 1, 2015. The 2015 Commonsense Reasoning Symposium, to be held at the 2015 AAAI Spring Symposia at Stanford March 23–25, 2015, will include a special session for presentations and discussions on progress and issues related to this Winograd Schema Challenge. Contest details can be found here.

The winner that meets the baseline for human performance, based on the threshold (to be established), will receive a grand prize of $25,000. In the case of multiple winners, a panel of judges will base their choice on either further testing or examination of traces of program execution. If no program meets those thresholds, a first prize of $3,000 and a second prize of $2,000 will be awarded to the two highest-scoring entries.