Automatic building mapping could help emergency responders

September 24, 2012

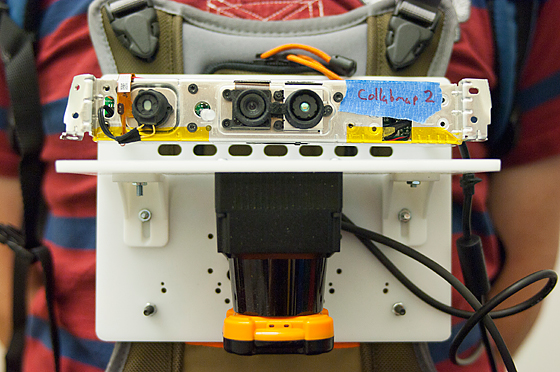

The prototype sensor included a stripped-down Kinect camera (top) and a laser rangefinder (bottom), which looks something like a camera lens seen side-on (credit: Patrick Gillooly)

To help emergency responders coordinate disaster response, MIT researchers have built a wearable sensor system that automatically creates a digital map of the environment through which the wearer is moving.

In experiments, a student wearing the sensor system wandered the MIT halls, and the sensors wirelessly relayed data to a laptop in a distant conference room. Observers in the conference room were able to track the student’s progress on a map that tracked movements.

Connected to the array of sensors is a handheld pushbutton device that the wearer can use to annotate the map. In the prototype system, depressing the button simply designates a particular location as a point of interest. But the researchers envision that emergency responders could use a similar system to add voice or text tags to the map — indicating, say, structural damage or a toxic spill.

How it works

The system uses a laser rangefinder, which sweeps a laser beam around a 270-degree arc and measures the time that it takes the light pulses to return. If the rangefinder is level, it can provide very accurate information about the distance of the nearest walls, but a walking human jostles it much more than a rolling robot does.

Similarly, sensors in a robot’s wheels can provide accurate information about its physical orientation and the distances it covers, but that’s missing with humans. And as emergency workers responding to a disaster might have to move among several floors of a building, the system also has to recognize changes in altitude, so it doesn’t inadvertently overlay the map of one floor with information about a different one.

So in addition to the rangefinder, the researchers also equipped their sensor platform with a cluster of accelerometers and gyroscopes, a camera, and, in one group of experiments, a barometer (changes in air pressure proved to be a surprisingly good indicator of floor transitions). The gyroscopes could infer when the rangefinder was tilted — information the mapping algorithms could use in interpreting its readings — and the accelerometers provided some information about the wearer’s velocity and very good information about changes in altitude.

Every few meters, the camera takes a snapshot of its surroundings, and software extracts a couple of hundred visual features from the image — particular patterns of color, or contours, or inferred three-dimensional shapes. Each batch of features is associated with a particular location on the map.

The prototype of the sensor platform consists of a handful of devices attached to a sheet of hard plastic about the size of an iPad, which is worn on the chest like a backward backpack. In principle, the whole system could be shrunk to about the size of a coffee mug.

Both the U.S. Air Force and the Office of Naval Research supported the work.

It would be interesting to see if this could be adapted for the blind, using some combination of sound and tactile feedback. — Ed.