Brain’s visual circuits edit what we see before we see it

December 10, 2010

The brain’s visual neurons continually develop predictions of what they will perceive and then correct erroneous assumptions as they take in additional external information, according to new research done at Duke University.

This new mechanism for visual cognition challenges the currently held model of sight and could change the way neuroscientists study the brain. Neurons in the brain predict and edit what we see before we see it, the researchers found.

The new vision model is called predictive coding. It is more complex and adds an extra dimension to the standard model of sight. The prevailing model has been that neurons process incoming data from the retina through a series of hierarchical layers. In this bottom-up system, the lower neurons first detect an object’s features, such as horizontal or vertical lines. The neurons send that information to the next level of brain cells that identify other specific features and feed the emerging image to the next layer of neurons, which add additional details. The image travels up the neuron ladder until it is completely formed.

But new brain imaging data from a study led by Duke researcher Tobias Egner provides “clear and direct evidence” that the standard picture of vision, called feature detection, is incomplete. The data, published Dec. 8 in the Journal of Neuroscience, show that the brain predicts what it will see and edits those predictions in a top-down mechanism, said Egner, who is an assistant professor of psychology and neuroscience.

In this system, the neurons at each level form and send context-sensitive predictions about what an image might be to the next lower neuron level. The predictions are compared with the incoming sensory data. Any mismatches, or prediction errors, between what the neurons expected to see and what they observe are sent up the neuron ladder. Each neuron layer then adjusts its perceptions of an image in order to eliminate prediction error at the next lower layer.

Finally, once all prediction error is eliminated, “the visual cortex has assigned its best guess interpretation of what an object is, and a person actually sees the object,” Egner said. He noted that this happens subconsciously in a matter of milliseconds. “You never even really know you’re doing it,” he said.

doing it,” he said.

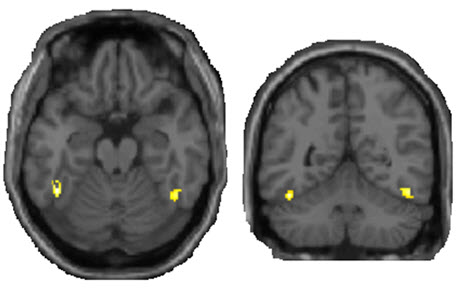

Egner and his colleagues wanted to capture the process almost as it happened. The team used functional Magnetic Resonance Imaging, or fMRI, brain scans of the fusiform face area (FFA), a region that deals with recognizing faces. The researchers monitored 16 subjects’ brains as they observed faces or houses framed in different colored boxes that predicted the likelihood of the picture being a face or house.

Study participants were told to press a button when they observed an inverted image of a face or house, but the researchers were measuring something else. By changing the face-frame or house-frame color combination, the researchers controlled and measured the FFA neural response to tease apart responses to the stimulus, face expectation and error processing.

If the feature detection model were correct, the FFA neural response should be stronger for faces than houses, irrespective of the subjects’ expectations. But Egner and his colleagues found that if subjects had a high expectation of seeing a face, their neural response was nearly the same whether they were actually shown a face or a house. The study goes on to use computational modeling to show that this pattern of neural activation can only be explained by a shared contribution from face expectation and prediction error.

This study provides support for a “very different view” of how the visual system works, said Scott Murray, a University of Washington neuroscientist who was not involved in the research. Instead of high neuron firing rates providing information about the presence of a particular feature, high firing rates are instead associated with a deviation from what neurons expect to see, Murray explained. “These deviation signals presumably provide useful tags for something the visual system has to process more to understand.”

Egner said that theorists have been developing the predictive coding model for the past 30 years, but no previous studies have directly tested it against the feature detection model. “This paper is provocative and motions toward a change in the preconception of how vision works. In essence, more scientists may become more sympathetic to the new model,” he said.

Murray also said that the findings could influence the way neuroscientists continue to study the brain. Most research assumes that if a brain region has a large response to a particular visual image, and then it is somehow responsible for, or specialized for, processing the content of the image. This research “challenges that assumption,” he said, explaining that future studies have to take into account expectations that participants have for the visual images being presented.

Adapted from materials provided by Duke University