Deep-learning algorithm predicts photos’ memorability at ‘near-human’ levels

December 21, 2015

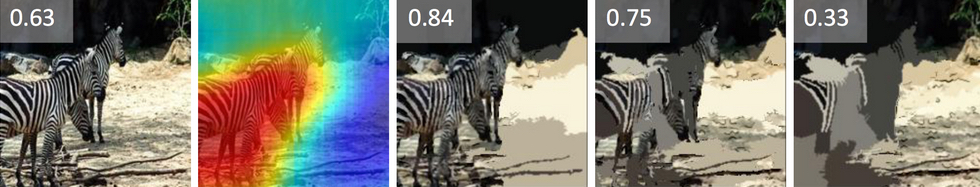

The MemNet algorithm ranks images by how memorable and forgettable they are. It also creates a heat map (second image from left) identifying the image’s most memorable and forgettable regions, ranging from red (most memorable) to blue (most forgettable). The image can then be subtly tweaked to increase or decrease its memorability score (three images on right). (credit: CSAIL)

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a deep-learning algorithm that can predict how memorable or forgettable an image is almost as accurately as humans, and they plan to turn it into an app that tweaks photos to make them more memorable.

For each photo, the “MemNet” algorithm also creates a “heat map” (a color-coded overlay) that identifies exactly which parts of the image are most memorable. You can try it out online by uploading your own photos to the project’s “LaMem” dataset.

The research is an extension of a similar algorithm the team developed for facial memorability. The team fed its algorithm tens of thousands of images from several different datasets developed at CSAIL, including LaMem and the scene-oriented SUN and Places. The images had each received a “memorability score” based on the ability of human subjects to remember them in online experiments.

Close to human performance

The team then pitted its algorithm against human subjects by having the model predicting how memorable a group of people would find a new never-before-seen image. It performed 30 percent better than existing algorithms and was within a few percentage points of the average human performance.

By emphasizing different regions, the algorithm can also potentially increase the image’s memorability.

“CSAIL researchers have done such manipulations with faces, but I’m impressed that they have been able to extend it to generic images,” says Alexei Efros, an associate professor of computer science at the University of California at Berkeley. “While you can somewhat easily change the appearance of a face by, say, making it more ‘smiley,’ it is significantly harder to generalize about all image types.”

LaMem is the world’s largest image-memorability dataset. With 60,000 images, each annotated with detailed metadata about qualities such as popularity and emotional impact, LaMem is the team’s effort to spur further research on what they say has often been an under-studied topic in computer vision.

Team members picture a variety of potential applications, from improving the content of ads and social media posts, to developing more effective teaching resources, to creating your own personal “health-assistant” device to help you remember things. The team next plans to try to update the system to be able to predict the memory of a specific person, as well as to better tailor it for individual “expert industries” such as retail clothing and logo design.

The work is supported by grants from the National Science Foundation, as well as the McGovern Institute Neurotechnology Program, the MIT Big Data Initiative at CSAIL, research awards from Google and Xerox, and a hardware donation from Nvidia.

Abstract of Understanding and Predicting Image Memorability at a Large Scale

Progress in estimating visual memorability has been limited by the small scale and lack of variety of benchmark data. Here, we introduce a novel experimental procedure to objectively measure human memory, allowing us to build LaMem, the largest annotated image memorability dataset to date (containing 60,000 images from diverse sources). Using Convolutional Neural Networks (CNNs), we show that fine-tuned deep features outperform all other features by a large margin, reaching a rank correlation of 0.64, near human consistency (0.68). Analysis of the responses of the high-level CNN layers shows which objects and regions are positively, and negatively, correlated with memorability, allowing us to create memorability maps for each image and provide a concrete method to perform image memorability manipulation. This work demonstrates that one can now robustly estimate the memorability of images from many different classes, positioning memorability and deep memorability features as prime candidates to estimate the utility of information for cognitive systems. Our model and data are available at: http://memorability.csail.mit.edu