Deep learning helps robots perfect skills

March 7, 2016

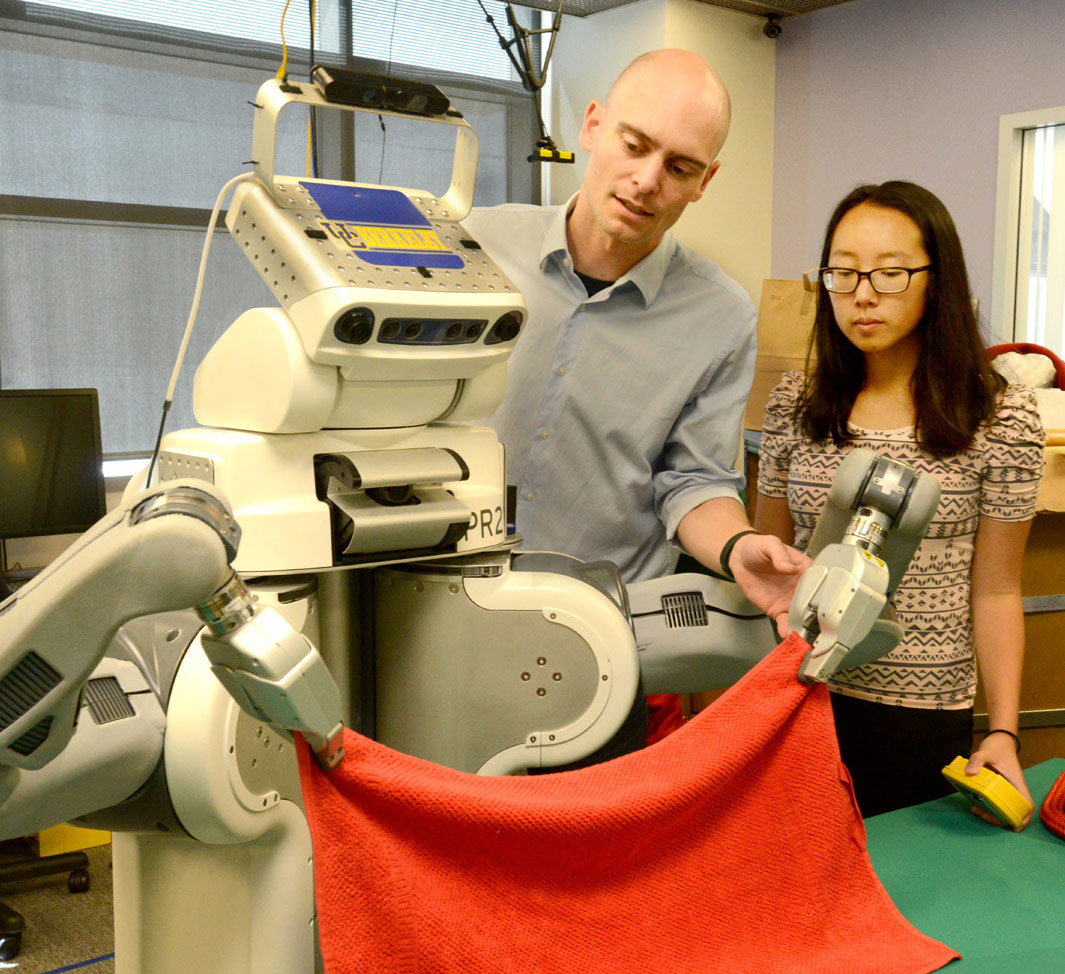

BRETT folds a towel, using deep learning to distinguish corners and edges, as well as how wrinkly certain areas are, and matches what it sees to past experience to determine what to do next. (credit: Peg Skorpinski)

BRETT (Berkeley Robot for the Elimination of Tedious Tasks) has learned to improve its performance in household chores through deep learning and reinforcement learning to provide moment-to-moment visual and sensory feedback to the software that controls the robot’s movements.

For the past 15 years, Berkeley robotics researcher Pieter Abbeel has been looking for ways to make robots learn. In 2010 he and his students programmed BRETT to pick up different-sized towels, figure out their shape, and neatly fold them.

The new use of deep-reinforcement learning strategy opens the way for training robots to carry out increasingly complex tasks. The research goal is to generalize from one task to another. A robot that could learn from experience would be far more versatile than one needing detailed, baked-in instructions for each new act.

Deep learning enables the robot to perceive its immediate environment, including the location and movement of its limbs. Reinforcement learning means improving at a task by trial and error. A robot with these two skills could refine its performance based on real-time feedback.

Applications for such a skilled robot might range from helping humans with tedious housekeeping chores to assisting in highly detailed surgery. In fact, Abbeel says, “Robots might even be able to teach other robots.” Or humans?

UC Berkeley | Autonomous robot doing laundry

Cal ESG | Faculty Talks 2