Getting ‘hallucinating’ robots to arrange your room for you

June 20, 2012 by Amara D. Angelica

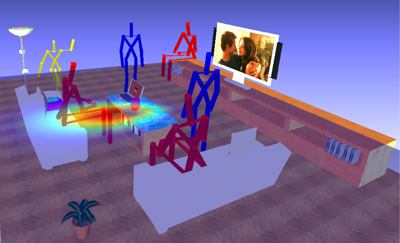

“Hmm, now where would my imaginary human stick figures prefer to sit? Note to self: they like to look at the rectangular object with lots of pixels.” — robot (Credit: Personal Robotics Lab, Cornell)

When we last (virtually) visited the Personal Robotics Lab of Ashutosh Saxena, Cornell assistant professor of computer science, we learned that they’ve taught robots to pick up after you, while you sit around and watch Futurama.

But why stop there in your search for the ultimate slave robot? Now they’ve taught robots where in a room you might stand, sit, or work, and to place objects by “hallucinating” imaginary people — no word if psilocybin is involved.

As we learned the last time, the old way was to simply model relationships between objects: a keyboard goes in front of a monitor, check; a mouse goes next to the keyboard, check, blah blah blah. But what if the tardbot puts the monitor, keyboard and mouse facing the wall?

(Credit: Futurama)

So the researchers decided: instead of beating the robots mercilessly, we’ll teach them a small set of human poses by having them observe 3-D images of rooms with objects in them, and then imagine human stick figures (you) placing these figures in practical relationships with objects and furniture.

Eventually, the bot learns somehow to avoid your wrath. Don’t put a sitting person where there is no chair or couch, dufus! The remote is usually near a human’s reaching arm, NOT floating 10 feet in the freakin air! The beer goes on the coffee table — NOT upside down on the couch, dammit!

“Imagine no humans, I wonder if you can…” — future robot song.

Anyway, fast forwarding: the researchers tested their ingenious method using images of living rooms, kitchens and offices from the Google 3-D Warehouse, and later, images of actual offices and apartments. Then they programmed a robot to carry out the predicted placements in actual apartments. Volunteers rated the placement of each object for correctness on a scale of 1 to 5, 1 being, I guess, something like “recycle the damn thing.”

Conclusion: the best results came from combining human context with object-to-object relationships. Well, duh. Fine, fine, now when can I pick up one of them bots at Ikea?

The research was supported by a Microsoft Faculty Fellowship and a gift from Google. I’m guessing a Kinect2 and Google Glass will be involved somehow in the next Personal Robotics Lab research episode. Stay tuned….