Google’s new multilingual Neural Machine Translation System can translate between language pairs even though it has never been taught to do so

November 25, 2016

Google Neural Machine Translation (credit: Google)

Google researchers have announced they have implemented a neural machine translation system in Google Translate that improves translation quality and enables “Zero-Shot Translation” — translation between language pairs never seen explicitly by the system.

For example, in the animation above, the system was trained to translate bidirectionally between English and Japanese and between English and Korean. But the new system can also translate between Japanese and Korean — even though it has never been taught to do so.

Google calls this “zero-shot” translation — shown by the yellow dotted lines in the animation. “To the best of our knowledge, this is the first time this type of transfer learning has worked in machine translation,” the researchers say.

Neural networks for machine translation

What drove this development was the fact that in the last ten years, Google Translate has grown from supporting just a few languages to 103, translating more than 140 billion words every day. That required building and maintaining many different systems to translate between any two languages — incurring significant computational cost.

That meant the researchers needed to rethink the technology behind Google Translate.

As a result, the Google Brain Team and Google Translate team announced the first step in September: a new system called Google Neural Machine Translation (GNMT), which learns from millions of examples.

“GNMT reduces translation errors by more than 55%–85% on several major language pairs measured on sampled sentences from Wikipedia and news websites with the help of bilingual human raters,” the researchers note. “However, while switching to GNMT improved the quality for the languages we tested it on, scaling up to all the 103 supported languages presented a significant challenge.”

So as explained in a paper, “Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation” (open access), the researchers extended the GNMT system, allowing for a single system to translate between multiple languages. “Our proposed architecture requires no change in the base GNMT system,” the authors explain, “instead it uses an additional ‘token’ at the beginning of the input sentence to specify the required target language to translate to.”

An interlingua

But the success of the zero-shot translation raised another important question: Is the system learning a common representation in which sentences with the same meaning are represented in similar ways regardless of language — i.e. an “interlingua”?

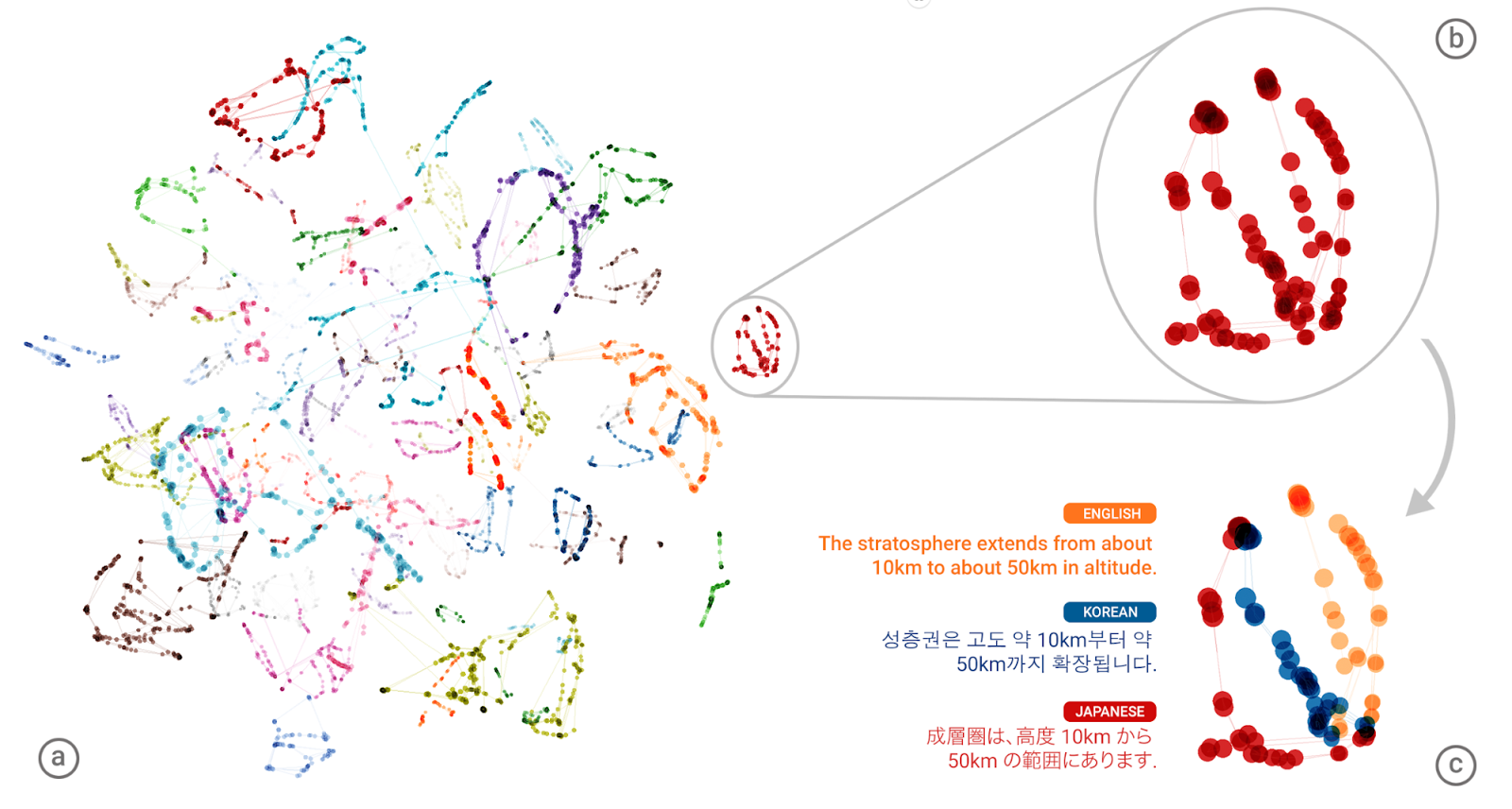

To find out, the researchers used a 3-dimensional representation of internal network data, allowing them to take a peek into the system as it translated a set of sentences between all possible pairs of the Japanese, Korean, and English languages, for example.

Developing an “interlinqua” (credit: Google)

Part (a) from the figure above shows an overall geometry of these translations. The points in this view are colored by the meaning; a sentence translated from English to Korean with the same meaning as a sentence translated from Japanese to English share the same color. From this view we can see distinct groupings of points, each with their own color. Part (b) zooms in to one of the groups, and the colors in part (c) show the different source languages.

Within a single group, we see a sentence with the same meaning (“The stratosphere extends from about 10km to about 50km in altitude”), but from three different languages, the researchers explain. “This means the network must be encoding something about the semantics [meaning] of the sentence, rather than simply memorizing phrase-to-phrase translations. We interpret this as a sign of existence of an interlingua in the network.”

The new Multilingual Google Neural Machine Translation system is running in production today for all Google Translate users, according to the researchers.

Abstract of Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation

We propose a simple, elegant solution to use a single Neural Machine Translation (NMT) model to translate between multiple languages. Our solution requires no change in the model architecture from our base system but instead introduces an artificial token at the beginning of the input sentence to specify the required target language. The rest of the model, which includes encoder, decoder and attention, remains unchanged and is shared across all languages. Using a shared wordpiece vocabulary, our approach enables Multilingual NMT using a single model without any increase in parameters, which is significantly simpler than previous proposals for Multilingual NMT. Our method often improves the translation quality of all involved language pairs, even while keeping the total number of model parameters constant. On the WMT’14 benchmarks, a single multilingual model achieves comparable performance for English→French and surpasses state-of-the-art results for English→German. Similarly, a single multilingual model surpasses state-of-the-art results for French→English and German→English on WMT’14 and WMT’15 benchmarks respectively. On production corpora, multilingual models of up to twelve language pairs allow for better translation of many individual pairs. In addition to improving the translation quality of language pairs that the model was trained with, our models can also learn to perform implicit bridging between language pairs never seen explicitly during training, showing that transfer learning and zero-shot translation is possible for neural translation. Finally, we show analyses that hints at a universal interlingua representation in our models and show some interesting examples when mixing languages.