Helping Siri hear you in a party

August 14, 2015

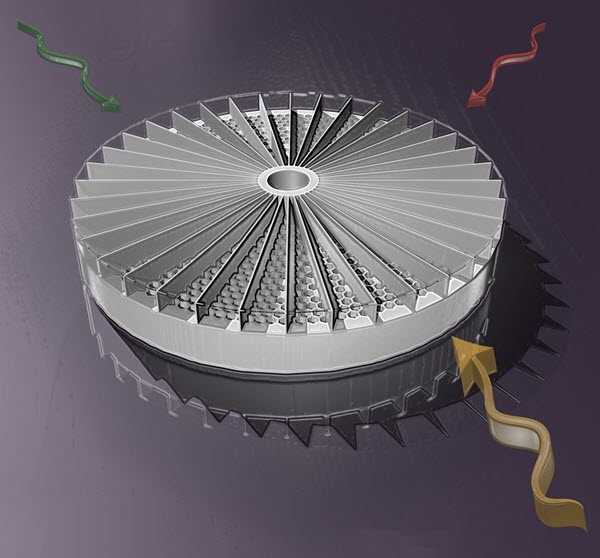

This prototype sensor can separate out simultaneous sounds coming from different directions (credit: Steve Cummer, Duke University)

Duke University engineers have invented a device that emulates the “cocktail party effect” — the remarkable ability of the brain to home in on a single voice in a room with voices coming from multiple directions.

The device uses plastic metamaterials — the combination of natural materials in repeating patterns to achieve unnatural properties — to determine the direction of a sound and extract it from the surrounding background noise.

“We think this could improve the performance of voice-activated devices like smartphones and game consoles while also reducing the complexity of the system,” said Abel Xie, a PhD student in electrical and computer engineering at Duke and lead author of the paper.

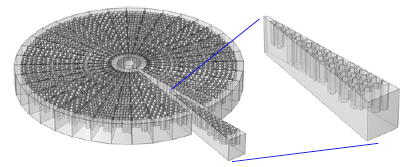

Metamaterial and one fan-like waveguide section, showing the varying resonator cavity depths. For each person speaking, these unique cavities modify the distribution of sound strength across the frequency spectrum, creating a unique directional signature (credit: Yangbo Xie et al./PNAS)

How it works

The 3D-printed proof-of-concept plastic device looks a pie-shaped honeycomb split into dozens of slices. The depth of resonator cavities varies within in each slice. This gives each slice of the honeycomb pie a unique sonic pattern.

“The cavities behave like soda bottles when you blow across their tops,” said Steve Cummer, professor of electrical and computer engineering at Duke. “The amount of soda left in the bottle, or the depth of the cavities in our case, affects the pitch of the sound they make, and this changes the incoming sound in a subtle but detectable way.”

When a sound wave gets to the device, it gets slightly distorted by the cavities. And that distortion has a specific signature depending what slice of the pie it passed over. After being picked up by a microphone, the sound is transmitted to a computer that separates the jumble of noises based on these unique distortions.

The researchers tested their invention in multiple trials in an anechoic chamber by simultaneously sending three identical sounds at the sensor from three different directions. It was able to distinguish between them with a 96.7 percent accuracy rate.

Uses in medical imaging, other applications

While the prototype is six inches wide, the researchers believe it could be scaled down and incorporated into the devices we use on a regular basis.

Once miniaturized, the device could have applications in voice-command electronics and medical sensing devices that use sound waves, like ultrasound imaging, said Xie. “It should also be possible to improve the sound fidelity and increase functionalities for applications like hearing aids and cochlear implants.”

The work was supported by a Multidisciplinary University Research Initiative from the Office of Naval Research. Conceivably, this design concept could be used in hydrophone-based systems to help separate out underwater sounds. It could also be used to separate out battlefield sounds and gunshots and other sounds in urban scenarios.

This research was featured in the Proceedings of the National Academy of Sciences August 11.

Abstract of Single-sensor “cocktail party listening” with acoustic metamaterials

Designing a “cocktail party listener” that functionally mimics the selective perception of a human auditory system has been pursued over the past decades. By exploiting acoustic metamaterials and compressive sensing, we present here a single-sensor listening device that separates simultaneous overlapping sounds from different sources. The device with a compact array of resonant metamaterials is demonstrated to distinguish three overlapping and independent sources with 96.67% correct audio recognition. Segregation of the audio signals is achieved using physical layer encoding without relying on source characteristics. This hardware approach to multichannel source separation can be applied to robust speech recognition and hearing aids and may be extended to other acoustic imaging and sensing applications.