How robots can learn from babies

December 7, 2015

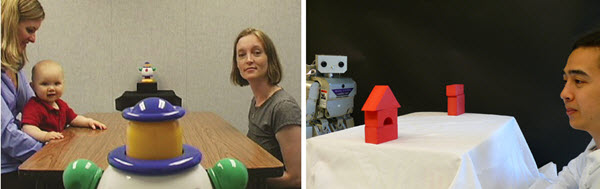

A collaboration between UW developmental psychologists and computer scientists aims to enable robots to learn in the same way that children naturally do. The team used research on how babies follow an adult’s gaze to “teach” a robot to perform the same task. (credit: University of Washington)

Babies learn about the world by exploring how their bodies move in space, grabbing toys, pushing things off tables and by watching and imitating what adults are doing. So instead of laboriously writing code (or moving a robot’s arm or body to show it how to perform an action), why not just let them learn like babies?

That’s exactly what University of Washington (UW) developmental psychologists and computer scientists have now demonstrated in experiments that suggest that robots can “learn” much like kids — by amassing data through exploration, watching a human do something, and determining how to perform that task on its own.

That new method would allow someone who doesn’t know anything about computer programming to be able to teach a robot by demonstration — showing the robot how to clean your dishes, fold your clothes, or do household chores.

“But to achieve that goal, you need the robot to be able to understand those actions and perform them on their own,” said Rajesh Rao, a UW professor of computer science and engineering and senior author of an open-access paper in the journal PLoS ONE.

In the paper, the UW team developed a new probabilistic model aimed at solving a fundamental challenge in robotics: building robots that can learn new skills by watching people and imitating them. The roboticists collaborated with UW psychology professor and I-LABS co-director Andrew Meltzoff, whose seminal research has shown that children as young as 18 months can infer the goal of an adult’s actions and develop alternate ways of reaching that goal themselves.

In one example, infants saw an adult try to pull apart a barbell-shaped toy, but the adult failed to achieve that goal because the toy was stuck together and his hands slipped off the ends. The infants watched carefully and then decided to use alternate methods — they wrapped their tiny fingers all the way around the ends and yanked especially hard — duplicating what the adult intended to do.

Machine-learning algorithms based on play

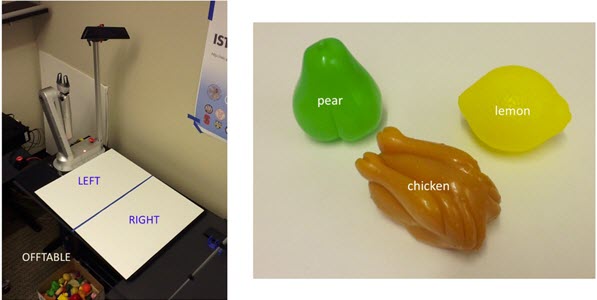

This robot used the new UW model to imitate a human moving toy food objects around a tabletop. By learning which actions worked best with its own geometry, the robot could use different means to achieve the same goal — a key to enabling robots to learn through imitation. (credit: University of Washington)

Children acquire intention-reading skills, in part, through self-exploration that helps them learn the laws of physics and how their own actions influence objects, eventually allowing them to amass enough knowledge to learn from others and to interpret their intentions. Meltzoff thinks that one of the reasons babies learn so quickly is that they are so playful.

“Babies engage in what looks like mindless play, but this enables future learning. It’s a baby’s secret sauce for innovation,” Meltzoff said. “If they’re trying to figure out how to work a new toy, they’re actually using knowledge they gained by playing with other toys. During play they’re learning a mental model of how their actions cause changes in the world. And once you have that model you can begin to solve novel problems and start to predict someone else’s intentions.”

Rao’s team used that infant research to develop machine learning algorithms that allow a robot to explore how its own actions result in different outcomes. Then the robot uses that learned probabilistic model to infer what a human wants it to do and complete the task, and even to “ask” for help if it’s not certain it can.

How to follow a human’s gaze

The team tested its robotic model in two different scenarios: a computer simulation experiment in which a robot learns to follow a human’s gaze, and another experiment in which an actual robot learns to imitate human actions involving moving toy food objects to different areas on a tabletop.

In the gaze experiment, the robot learns a model of its own head movements and assumes that the human’s head is governed by the same rules. The robot tracks the beginning and ending points of a human’s head movements as the human looks across the room and uses that information to figure out where the person is looking. The robot then uses its learned model of head movements to fixate on the same location as the human.

The team also recreated one of Meltzoff’s tests that showed infants who had experience with visual barriers and blindfolds weren’t interested in looking where a blindfolded adult was looking, because they understood the person couldn’t actually see. Once the team enabled the robot to “learn” what the consequences of being blindfolded were, it no longer followed the human’s head movement to look at the same spot.

Smart movements: beyond mimicking

In the second experiment, the team allowed a robot to experiment with pushing or picking up different objects and moving them around a tabletop. The robot used that model to imitate a human who moved objects around or cleared everything off the tabletop. Rather than rigidly mimicking the human action each time, the robot sometimes used different means to achieve the same ends.

“If the human pushes an object to a new location, it may be easier and more reliable for a robot with a gripper to pick it up to move it there rather than push it,” said lead author Michael Jae-Yoon Chung, a UW doctoral student in computer science and engineering. “But that requires knowing what the goal is, which is a hard problem in robotics and which our paper tries to address.”

Though the initial experiments involved learning how to infer goals and imitate simple behaviors, the team plans to explore how such a model can help robots learn more complicated tasks.

“Babies learn through their own play and by watching others,” says Meltzoff, “and they are the best learners on the planet — why not design robots that learn as effortlessly as a child?”

That raises a question: can babies also learn from robots they’ve taught — in a closed loop? And where might that eventually take education — and civilization?

Abstract of A Bayesian Developmental Approach to Robotic Goal-Based Imitation Learning

A fundamental challenge in robotics today is building robots that can learn new skills by observing humans and imitating human actions. We propose a new Bayesian approach to robotic learning by imitation inspired by the developmental hypothesis that children use self-experience to bootstrap the process of intention recognition and goal-based imitation. Our approach allows an autonomous agent to: (i) learn probabilistic models of actions through self-discovery and experience, (ii) utilize these learned models for inferring the goals of human actions, and (iii) perform goal-based imitation for robotic learning and human-robot collaboration. Such an approach allows a robot to leverage its increasing repertoire of learned behaviors to interpret increasingly complex human actions and use the inferred goals for imitation, even when the robot has very different actuators from humans. We demonstrate our approach using two different scenarios: (i) a simulated robot that learns human-like gaze following behavior, and (ii) a robot that learns to imitate human actions in a tabletop organization task. In both cases, the agent learns a probabilistic model of its own actions, and uses this model for goal inference and goal-based imitation. We also show that the robotic agent can use its probabilistic model to seek human assistance when it recognizes that its inferred actions are too uncertain, risky, or impossible to perform, thereby opening the door to human-robot collaboration.