How the Internet (and sex) amplifies irrational group behavior

April 15, 2013

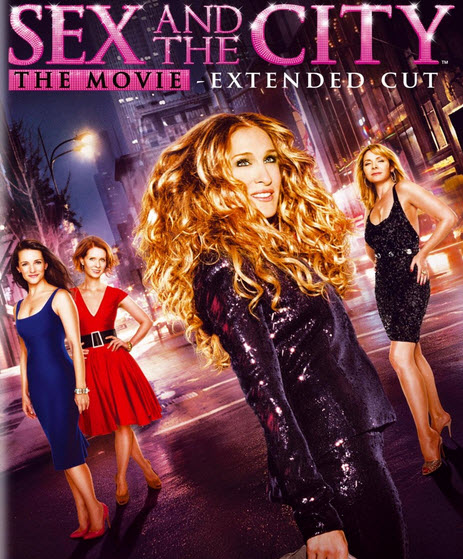

(Credit: New Line Home Video)

New research from the University of Copenhagen combines formal philosophy, social psychology, and decision theory to understand and tackle these phenomena.

“Group behavior that encourages us to make decisions based on false beliefs has always existed.

However, with the advent of the Internet and social media, this kind of behavior is more likely to occur than ever, and on a much larger scale, with possibly severe consequences for the democratic institutions underpinning the information societies we live in,” says professor of philosophy Vincent F. Hendricks at the University of Copenhagen.

He and fellow researchers Pelle G. Hansen and Rasmus Rendsvig analyze a number of social information processes that are enhanced by modern information technology.

Informational cascades and Sex and the City

Curiously, an old book entitled Love Letters of Great Men and Women: From the 18th Century to the Present Day, which in 2007 suddenly climbed the Amazon.com bestseller list, provides a good example of group behavior set in an online context:

“What generated the huge interest in this long forgotten book was a scene in the movie Sex and the City in which the main character Carrie Bradshaw reads a book entitled Love Letters of Great Men — which does not exist. So, when fans of the movie searched for this book, Amazon’s search engine suggested Love Letters of Great Men and Women instead, which made a lot of people buy a book they did not want. Then Amazon’s computers started pairing the book with Sex and the City merchandise, and the old book sold in great numbers,” Vincent F. Hendricks points out.

“This is known as an ‘informational cascade’ in which otherwise rational individuals base their decisions not only on their own private information, but also on the actions of those who act before them. The point is that, in an online context, this can take on massive proportions and result in actions that miss their intended purpose.”

Online discussions take place in echo chambers

While buying the wrong book does not have serious consequences for our democratic institutions, it exemplifies, according to professor Vincent F. Hendricks, what may happen when we give our decision-making power to information technologies and processes. And he points to other social phenomena such as “group polarization” and “information selection” that do pose threats to democratic discussion when amplified by online media.

“In group polarization, which is well-documented by social psychologists, an entire group may shift to a more radical viewpoint after a discussion even though the individual group members did not subscribe to this view prior to the discussion. This happens for a number of reasons — one is that group members want to represent themselves in a favorable light in the group by adopting a viewpoint slightly more extreme than the perceived mean.*

Information selection

In online forums, this well-known phenomenon is made even more problematic by the fact that discussions take place in settings where group members are fed only the information that fits their worldview, making the discussion forum an echo chamber where group members only hear their own voices,” Vincent F. Hendricks suggests.

Companies such as Google and Facebook have designed algorithms that are intended to filter away irrelevant information — known as information selection — so that we are only served content that fits our clicking history. According to Professor Hendricks this is, from a democratic perspective, a problem as you may never in your online life encounter views or arguments that contradict your worldview.

“If we value democratic discussion and deliberation, we should apply rigorous analysis, from a variety of disciplines, to the workings of these online social information processes as they become increasingly influential in our information societies.”

More info: Hendrick’s Initiative for Information Processing and the Analysis of Democracy.

* This could never happen in KurzweilAI comments and Forums :) — Editor