How to reconstruct from brain images which letter a person was reading

August 24, 2013

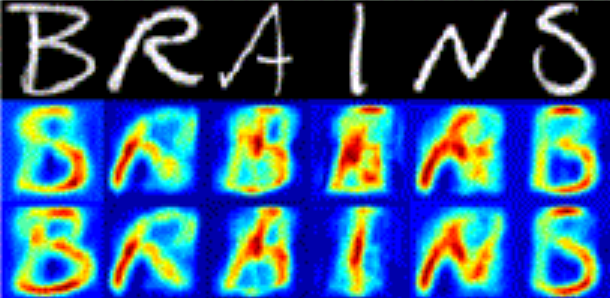

Top: original visual image presented to subjects. Below: two steps in progressive reconstructions from fMRI data. Each letter is predicted using models trained on fMRI data for the remaining letter classes to improve the reconstructions. (Credit: S. Schoenmakers et al./Neuroimage

Researchers from Radboud University Nijmegen in the Netherlands have succeeded in determining which letter a test subject was looking at.

They did that by analyzing the corresponding functional magnetic resonance imaging (fMRI) scanned images of activity in the visual cortex of the brain, using a linear Gaussian mathematical model.

The researchers “taught” the model how 1200 voxels (volumetric pixels) of 2x2x2 mm from the brain scans correspond to individual pixels in different versions of handwritten letters.

By combining all the information about the pixels from the voxels, it became possible to reconstruct a rough version of the image viewed by the subject. The result was a fuzzy speckle pattern.

Improving model performance

They then taught the model what letters actually look like. “This improved the recognition of the letters enormously,” said lead researcher Marcel van Gerven. “The model compares the letters to determine which one corresponds most exactly with the speckle image, and then pushes the results of the image towards that letter.”

The result was close resemblance to the actual letter, a true reconstruction.

“Our approach is similar to how we believe the brain itself combines prior knowledge with sensory information. For example, you can recognize the lines and curves in this article as letters only after you have learned to read. And this is exactly what we are looking for: models that show what is happening in the brain in a realistic fashion.

:We hope to improve the models to such an extent that we can also apply them to the working memory or to subjective experiences such as dreams or visualizations. Reconstructions indicate whether the model you have created approaches reality.”

“In our further research we will be working with a more powerful MRI scanner,” said Sanne Schoenmakers, who is working on a thesis about decoding thoughts. “Due to the higher resolution of the scanner, we hope to be able to link the model to more detailed images. We are currently linking images of letters to 1200 voxels in the brain; with the more powerful scanner we will link images of faces to 15,000 voxels.”

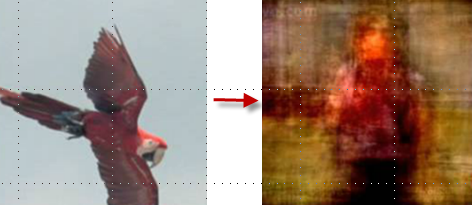

A frame (right) reconstructed from an fMRI image from the brain of a person watching a movie (left) (credit: Shinji Nishimoto et al., Current Biology)

The research builds on 2011 research by University of California, Berkeley scientists, also using fMRI and computational models to decode and reconstruct people’s dynamic visual experiences of viewing movies.