Max More and Ray Kurzweil on the Singularity

February 26, 2002 by Max More, Ray Kurzweil

As technology accelerates over the next few decades and machines achieve superintelligence, we will encounter a dramatic phase transition: the “Singularity.” Will it be a “wall” (a barrier as conceptually impenetrable as the event horizon of a black hole in space), an “AI-Singularity” ruled by super-intelligent AIs, or a gentler “surge” into a posthuman era of agelessness and super-intelligence? Will this meme be hijacked by religious “passive singularitarians” obsessed with a future rapture? Ray Kurzweil and Extropy Institute president Max More debate.

Originally published on KurzweilAI.net February 26, 2002.

Ray: You were one of the earliest pioneers in articulating and exploring issues of the acceleration of technology and transhumanism. What led you to this examination?

Max: This short question is actually an enormous question. A well-rounded answer would take longer than I dare impose on any reader! One short answer is this:

Before my interest in examining accelerating technological progress and issues of transhumanism, I first had a realization. I saw very clearly how limited are human beings in their wisdom, in their intellectual and emotional development, and in their sensory and physical capabilities. I have always felt dissatisfied with those limitations and faults. After an early-teens interest in what I’ll loosely call (with mild embarrassment) “psychic stuff,” as I came to learn more science and critical thinking I ceased to give any credence to psychic phenomena, as well as to any traditional religious views. With those paths to any form of transcendence closed, I realized that transhumanity (as I began to think of it), would only be achieved through science and technology steered by human values.

So, the realization was in two parts: A recognition of the undesirable limitations of human nature. And an understanding that science and technology were essential keys to overcoming human nature’s confines. In my readings in science, especially potent in encouraging me to think in terms of the development of intelligence rather than static Platonic forms was evolutionary theory. When I taught basic evolutionary theory to college students, I invariably found that about 95% of them had never studied it in school. Most quickly developed some understanding of it in the class, but some found it hard to adjust to a different perspective with so many implications. To me evolutionary thinking seemed natural. It only made it clearer that humanity need not be the pinnacle of evolution.

My drive to understand the issues in a transhumanist manner resulted from a melding of technological progress and philosophical perspective. Even before studying philosophy in the strict sense, I had the same essential worldview that included perpetual progress, practical optimism, and self-transformation. Watching the Apollo 11 moon landing at the age of 5, then all the Apollo launches to follow, excited me tremendously. At that time, space was the frontier to explore. Soon, even before I had finished growing, I realized that the major barrier to crack first was that of human aging and mortality. In addition to tracking progress, from my early teens I started taking whatever reasonable measures I could to extend my life expectancy.

Philosophically, I formed an extropian/transhumanist perspective by incorporating numerous ideas and influences into what many have found to be a coherent framework of a perspective. Despite disagreeing with much (and not having read all) of Nietzsche’s work, I do have a fondness for certain of his views and the way he expressed them. Most centrally, as a transhumanist, I resonate to Nietzsche’s declaration that “Man is a rope, fastened between animal and overman – a rope over an abyss… What is great in man is that he is a bridge and not a goal.” A bridge, not a goal. That nicely summarizes a transhumanist perspective. We are not perfect. Neither are we to be despised or pitied or to debase ourselves before imaginary perfect beings. We are to see ourselves as a work in progress. Through ambition, intelligence, and a dash of good sense, we will progress from human to something better (according to my values).

Many others have influenced my interest in these ideas. Though not the deepest or clearest thinker, Timothy Leary’s SMI2LE (Space Migration, Intelligence Increase, Life Extension) formula still appeals to me. However, today I find issues such as achieving superlongevity, superintelligence, and self-sculpting abilities to be more urgent. After my earlier years of interest, I particularly grew my thinking by reading people including philosophers Paul Churchland and Daniel Dennett, biologist Richard Dawkins, Hans Moravec, Roy Walford, Marvin Minsky, Vernor Vinge, and most recently Ray Kurzweil who I think has brought a delightful clarity to many transhumanist issues.

Ray: How do you define the Singularity?

Max: I believe the term “Singularity”, as we are using it these days, was popularized by Vernor Vinge in his 1986 novel Marooned in Realtime. (It appears that the term was first used in something like this sense, but not implying superhuman intelligence, by John von Neumann in the 1950s.) Vinge’s own usage seems to leave an exact definition open to varying interpretations. Certainly it involves an accelerating increase in machine intelligence culminating in a sudden shift to super intelligence, either though the awakening of networked intelligence or the development of individual AIs. From the human point of view, according to Vinge, this change “will be a throwing away of all the previous rules, perhaps in the blink of an eye”. Since the term means different things to different people, I will give three definitions.

Singularity #1: This Singularity includes the notion of a “wall” or “prediction horizon”–a time horizon beyond which we can no longer say anything useful about the future. The pace of change is so rapid and deep that our human minds cannot sensibly conceive of life post-Singularity. Many regard this as a specific point in time in the future, sometimes estimated at around 2035 when AI and nanotechnology are projected to be in full force. However, the prediction horizon definition does not require such an assumption. The more that progress accelerates, the shorter the distance measured in years that we may see ahead. But as we progress, the prediction horizon, while probably shortening in time, will also move further out. So this definition could be broken into two, one of which insists on a particular date for a prediction horizon, while the other acknowledges a moving horizon. One argument for assigning a point in time is based on the view that the emergence of super-intelligence will be a singular advance, an instantaneous break with all the rules of the past.

Singularity #2: We might call this the AI-Singularity, or Moravec’s Singularity since it most closely resembles the detailed vision of roboticist Hans Moravec. In this Singularity humans have no guaranteed place. The Singularity is driven by super-intelligent AI which immediately follows from human-level AI. Without the legacy hardware of humans, these AIs leave humans behind in a runaway acceleration. In some happier versions of this type of Singularity, the super-intelligent AIs benevolently “uplift” humans to their level by means of uploading.

Singularity #3: Singularity seen as a surge into a transhuman and posthuman era. This view, though different in its emphasis, is compatible with the shifting time-horizon version of Singularity #1. In Singularity as Surge the rate of change need not remotely approach infinity (as a mathematical singularity). In this view, technological progress will continue to accelerate, though perhaps not quite as fast as some projections suggest, rapidly but not discontinuously transforming the human condition. This could be termed a Singularity for two reasons: First, it would be a historically brief phase transition from the human condition to a posthuman condition of agelessness, super-intelligence, and physical, intellectual, and emotional self-sculpting. This dramatic phase transition, while not mathematically instantaneous, will mean an unprecedented break from the past. Second, since the posthuman condition (itself continually evolving) will be so radically different from human life, it will likely be largely if not completely incomprehensible to humans as we are today. Unlike some versions of the Singularity, the Surge/phase transition view allows that people may be at different stages along the path to posthuman at the same time, and that we may become posthuman in stages rather than all at once. For instance, I think it fairly likely that we achieve superlongevity before super-intelligence.

Ray: Do you see a Singularity in the future of human civilization?

Max: I do see a Singularity of the third kind in our future. A historically, if not subjectively, extremely fast phase change from human to transhuman to posthuman appears as a highly likely scenario. I do not see it as inevitable. It will take vast amounts of hard work, intelligence, determination, and some wisdom and luck to achieve. It’s possible that some humans will destroy the race through means such as biological warfare. Or our culture may rebel against change, seduced by religious and cultural urgings for “stability”, “peace” and against “hubris” and “the unknown”.

Although a Singularity as Surge could be stopped or slowed in these and other ways (massive meteorite strike?), I see the most likely scenario as being a posthuman Singularity. This is strongly implied by current accelerating progress in numerous fields including computation, materials science, bioinformatics and the convergence of infotech neuroscience, and biotech, microtech and nanotech.

Although I do not see super-intelligence alone as the only aspect of a Singularity, I do see it as a central aspect and driver. I grant that it is entirely possible that super-intelligence will arrive in the form of a deus ex machina, a runaway single-AI super-intelligence. However, my tentative assessment suggests that the Singularity is more likely to arise from one of two other means suggested by Vinge in his 1993 essay1. It could result from large computer networks of computers and their users–some future version of a semantic Web–“waking up” in the form of a distributed super-intelligent entity or community of minds. It could also (not exclusive of the previous scenario) result from increasingly intimate human-computer interfaces where by “computer” I loosely include all manner of sensors, processors, and networks. At least in the early stages, and partly in combination with human-computer interfaces, I expect biological human intelligence to be augmented through the biological sciences.

To summarize: I do not expect an instantaneous Singularity, nor one in which humans play no part after the creation of a self-improving human-level AI. I do anticipate a Singularity in the form of a growing surge in the pace of change, leading to a transhuman transition. This phase change will be a historically rapid and deep change in the evolutionary process. This short period will put an end to evolution in thrall to our genes. Biology will become an increasingly vestigial component of our nature. Biological evolution will become ever more suffused with and replaced by technological evolution, until we pass into the posthuman era.

As a postscript to this answer, I want to sound a note of caution. As the near-universal prevalence of religious belief testifies, humans tend to attach themselves, without rational thought, to belief systems that promise some form of salvation, heaven, paradise, or nirvana. In the Western world, especially in millennarian Christianity, millions are attracted to the notion of sudden salvation and of a “rapture” in which the saved are taken away to a better place.

While I do anticipate a Singularity as Surge, I am concerned that the Singularity concept is especially prone to being hijacked by this memeset. This danger especially arises if the Singularity is thought of as occurring at a specific point in time, and even more if it is seen as an inevitable result of the work of others. I fear that many otherwise rational people will be tempted to see the Singularity as a form of salvation, making personal responsibility for the future unnecessary. Already, I see a distressing number of superlongevity advocates who apparently do not exercise or eat healthily, instead firmly hoping that medical technology will cure aging before they die. Clearly this abdication of personal responsibility is not inherent in the Singularity concept. But I do see the concept as an attractor that will draw in those who treat it in this way. The only way I could see this as a good thing is if the Passive Singularitarians (as I will call them) substitute the Singularity for preexisting and much more unreasonable beliefs. I think those of us who speak of the Singularity should be wary of this risk if we value critical thought and personal responsibility. As much as I like Vernor and his thinking, I get concerned reading descriptions of the Singularity such as “a throwing away of all the previous rules, perhaps in the blinking of an eye.” This comes dangerously close to encouraging a belief in a Future Rapture.

Ray: When will the Singularity take place?

Max: I cannot answer this question with any precision. I feel more confident predicting general trends than specific dates. Some trends look very clear and stable, such as the growth in computer power and storage density. But I see enough uncertainties (especially in the detailed understanding of human intelligence) in the breakthroughs needed to pass through a posthuman Singularity to make it impossible to give one date. Many Singularity exponents see several trends in computer power and atomic control over matter reaching critical thresholds around 2030. It does look like we will have computers with hardware as powerful as the human brain by then, but I remain to be convinced that this will immediately lead to superhuman intelligence. I also see a tendency in many projections to take a purely technical approach and to ignore possible economic, political, cultural, and psychological factors that could dampen the advances and their impact. I will make one brief point to illustrate what I mean: Electronic computers have been around for over half a decade, and used in business for decades. Yet their effect on productivity and economic growth only became evident in the mid-1990s as corporate organization and business processes finally reformed to make better use of the new technology. Practice tend to lag technology, yet projections rarely allow for this. (This factor also led to many dot-com busts where business models required consumers to change their behavior in major ways.)

Cautions aside, I would surprised (and, of course, disappointed) if we did not move well into a posthuman Singularity by the end of this century. I think that we are already at the very near edge of the transhuman transition. This will gather speed, and could lead to a Singularity as phase transition by the middle of the century. So, I will only be pinned down to the extent of placing the posthuman Singularity as not earlier than 2020 and probably not later than 2100, with a best guess somewhere around the middle of the century. Since that puts me in my 80s or 90s, I hope I am unduly pessimistic!

Ray: Thanks, Max, for these thoughtful and insightful replies. I appreciate your description of transhumanity as a transcendence to be “achieved through science and technology steered by human values.” In this context, Nietzsche’s “Man is a rope, fastened between animal and overman — a rope over an abyss” is quite pertinent, thereby interpreting Nietzsche’s “overman” to be a reference to transhumanism.

The potential to hijack the concept of the Singularity by the “future rapture” memeset is discerning, but I would point out that humankind’s innate inclination for salvation is not necessarily irrational. Perhaps we have this inclination precisely to anticipate the Singularity. Maybe it is the Singularity that has been hijacked by irrational belief systems, rather than the other way around. However, I share your antipathy toward passive singularitarianism. If technology is a double-edged sword, then there is the possibility of technology going awry as it surges toward the singularity to profoundly disturbing consequences. We do need to keep our eye on the ethical ball.

I don’t agree that a cultural rebellion “seduced by religious and cultural urgings for ‘stability,’ peace,’ and against ‘hubris’ and ‘the unknown'” are likely to derail technological acceleration. Epochal events such as two world wars, the Cold War, and numerous economic, cultural, and social upheavals have failed to provide the slightest dent in the pace of the fundamental trends. As I discuss below, recessions, including the Great Depression, register as only slight deviations from the far more profound effect of the underlying exponential growth of the economy, fueled by the exponential growth of information-based technologies.

The primary reason that technology accelerates is that each new stage provides more powerful tools to create the next stage. The same was true of the biological evolution that created the technology creating species in the first place. Indeed, each stage of evolution adds another level of indirection to its methods, and we can see technology itself as a level of indirection for the biological evolution that resulted in technological evolution.

To put this another way, the ethos of scientific and technological progress is so deeply ingrained in our civilization that halting this process is essentially impossible. Occasional ethical and legal impediments to fairly narrow developments are rather like stones in the river of advancement; the flow of progress just flows around them.

You wrote that it appears that we will have sufficient computer power to emulate the human brain by 2030, but that this development will not necessarily “immediately lead to superhuman intelligence.” The other very important development is the accelerating process of reverse engineering (i.e., understanding the principles of operation of) the human brain. Without repeating my entire thesis here, this area of knowledge is also growing exponentially, and includes increasingly detailed mathematical models of specific neuron types, exponentially growing price-performance of human brain scanning, and increasingly accurate models of entire brain regions. Already, at least two dozen of the several hundred regions in the brain have been satisfactorily reverse engineered and implemented in synthetic substrates. I believe that it is a conservative scenario to expect that the human brain will be fully reverse engineered in a sufficiently detailed way to recreate its mental powers by 2029.

As you and I have discussed on various occasions, I’ve done a lot of thinking over the past few decades about the laws of technological evolution. As I mentioned above, technological evolution is a continuation of biological evolution. So the laws of technological evolution are compatible with the laws of evolution in general.

These laws imply at least a “surge” form of Singularity, as you describe it, during the first half of this century. This can be seen from the predictions for a wide variety of technological phenomena and indicators, including the power of computation, communication bandwidths, technology miniaturization, brain reverse engineering, the size of the economy, the rate of paradigm shift itself, and many others. These models apply to measures of both hardware and software.

Of course, if a mathematical line of inquiry yields counter intuitive results, then it makes sense to check the sensibility of these conclusions in another manner. However, in thinking through how the transformations of the Singularity will actually take place, through distinct periods of transhuman and posthuman development, the predictions of these formulae do make sense to me, in that one can describe each stage and how it is the likely effect of the stage preceding it.

To me, the concept of the Singularity as a “wall” implies a period of infinite change, that is, a mathematical Singularity. If there is a point in time in which change is infinite, then there is an inherent barrier in looking beyond this point in time. It becomes as impenetrable as the event horizon of a black hole in space, in which the density of matter and energy is infinite. The concept of the Singularity as a “surge,” on the other hand, is compatible with the idea of exponential growth. It is the nature of an exponential function that it starts out slowly, then grows quite explosively as one passes what I call the “knee of the curve.” From the surge perspective, the growth rate never becomes literally infinite, but it may appear that way from the limited perspective of someone who cannot follow such enormously rapid change. This perspective can be consistent with the idea that you mention of the prediction horizon “moving further out,” because one of the implications of the Singularity as a surge phenomenon is that humans will enhance themselves through intimate connection with technology thereby increasing our capacity to understand change. So changes that we could not follow today may very well be comprehensible when they occur. I think that it is fair to say that at any point in time the changes that occur will be comprehensible to someone, albeit that the someone may be a superintelligence on the cutting edge of the Singularity.

With the above as an introduction, I thought you would find of interest a dialog that Hans Moravec and I had on the issue you address on whether the Singularity is a “wall” (i.e., “prediction horizon”) or a “surge.” Some of these ideas are best modeled in the language of mathematics, but I will try to put the math in a box, so to speak, as it is not necessary to track through the formulas in order to understand the basic ideas.

First, let me describe the formulas that I showed to Hans.

The following analysis describes the basis of understanding evolutionary change as an exponentially growing phenomenon (a double exponential to be exact). I will describe here the growth of computational power, although the formulas are similar for other exponentially growing aspects of information-based aspects of evolution, including our knowledge of human intelligence, which is a primary source of the software of intelligence.

We are concerned with three variables:

V: Velocity (i.e., power) of computation (measured in Calculations per Second per unit cost)

W: World Knowledge as it pertains to designing and building computational devices

t: Time

As a first order analysis, we observe that computer power is a linear function of the knowledge of how to build computational devices. We also note that knowledge is cumulative, and that the instantaneous increment to knowledge is proportional to computational power. These observations result in the conclusion that computational power grows exponentially over time.

As noted, our initial observations are:

(1) V = C1 * W

(2) W = C2 * Integral (0 to t) V

This gives us:

W = C1 * C2 * Integral (0 to t) W

W = C1 * C2 * C3 ^ (C4 * t)

V = C1 ^ 2 * C2 * C3 ^ (C4 * t)

(Note on notation: a^b means a raised to the b power.)

Simplifying the constants, we get:

V = Ca * Cb ^ (Cc * t)

So this is a formula for “accelerating” (i.e., exponentially growing) returns, a “regular Moore’s Law.”

The data that I’ve gathered shows that there is exponential growth in the rate of exponential growth (We doubled computer power every three years early in the twentieth century, every two years in the middle of the century, and are doubling it every one year now).

The exponentially growing power of technology results in exponential growth of the economy. This can be observed going back at least a century. Interestingly, recessions including the Great Depression can be modeled as a fairly weak cycle on top of the underlying exponential growth. In each case, the economy “snaps back” to where it would have been had the recession / Depression never existed in the first place. We can see even more rapid exponential growth in specific industries tied to the exponentially growing technologies such as the computer industry.

If we factor in the exponentially growing resources for computation, we can see the source for the second level of exponential growth.

Let’s factor in another exponential phenomenon, which is the growing resources for computation. Not only is each (constant cost) device getting more powerful as a function of W, but the resources deployed for computation are also growing exponentially.

We now have:

N: Expenditures for computation

V = C1 * W (as before)

N = C4 ^ (C5 * t) (Expenditure for computation is growing at its own exponential rate)

W = C2 * Integral(0 to t) (N * V)

As before, world knowledge is accumulating, and the instantaneous increment is proportional to the amount of computation, which equals the resources deployed for computation (N) * the power of each (constant cost) device.

This gives us:

W = C1 * C2 * Integral(0 to t) (C4 ^ (C5 * t) * W)

W = C1 * C2 * (C3 ^ (C6 * t)) ^ (C7 * t)

V = C1 ^ 2 * C2 * (C3 ^ (C6 * t)) ^ (C7 * t)

Simplifying the constants, we get:

V = Ca * (Cb ^ (Cc * t)) ^ (Cd * t)

This is a double exponential — an exponential curve in which the rate of exponential growth is growing at a different exponential rate.

Now, let’s consider some real-world data. My estimate of brain capacity is 100 billion neurons times an average 1,000 connections per neuron (with the calculations taking place primarily in the connections) times 200 calculations per second. Although these estimates are conservatively high, one can find higher and lower estimates. However, even much higher (or lower) estimates by orders of magnitude only shift the prediction by a relatively small number of years.

Considering the data for actual calculating devices and computers during the twentieth century:

CPS/$1K: Calculations Per Second for $1,000

Twentieth century computing data matches:

CPS/$1K = 10^(6.00*((20.40/6.00)^((A13-1900)/100))-11.00)

We can determine the growth rate over a period of time:

Growth Rate =10^((LOG(CPS/$1K for Current Year) — LOG(CPS/$1K for Previous Year))/(Current Year — Previous Year))

Human Brain = 100 Billion (10^11) neurons * 1000 (10^3) Connections/Neuron * 200 (2 * 10^2) Calculations Per Second Per Connection = 2 * 10^16 Calculations Per Second

Human Race = 10 Billion (10^10) Human Brains = 2 * 10^26 Calculations Per Second

The above analysis provides the following conclusions:

We achieve one Human Brain capability (2 * 10^16 cps) for $1,000 around the year 2023.

We achieve one Human Brain capability (2 * 10^16 cps) for one cent around the year 2037.

We achieve one Human Race capability (2 * 10^26 cps) for $1,000 around the year 2049.

We achieve one Human Race capability (2 * 10^26 cps) for one cent around the year 2059.

If we factor in the exponentially growing economy, particularly with regard to the resources available for computation (already about a trillion dollars per year), we can see that nonbiological intelligence will be many trillions of times more powerful than biological intelligence by approximately mid century.

Although the above analysis pertains to computational power, a comparable analysis can be made of brain reverse engineering, i.e., knowledge about the principles of operation of human intelligence. There are many different ways to measure this, including mathematical models of human neurons, the resolution, speed, and bandwidth of human brain scanning, and knowledge about the digital-controlled-analog, massively parallel, algorithms utilized in the human brain.

As I mentioned above, we have already succeeded in developing highly detailed models of several dozen of the several hundred regions of the brain, and implementing these models in software, with very successful results. I won’t describe all of this in our dialog here, but I will be reporting on brain reverse engineering in some detail in my next book, the Singularity is Near. We can view this effort as analogous to the genome project. The effort to understand the information processes in our biological heritage has largely completed the stage of collecting the raw genomic data, is now rapidly gathering the proteomic data, and has made a good start at understanding the methods underlying this information. With regard to the even more ambitious project to understand our neural organization, we are now approximately where the genome project was about ten years ago, but are further along than most people realize. Keep in mind that the brain is the result of chaotic processes (which themselves use a controlled form of evolutionary pruning) described by a genome with very little data (only about 23 million bytes compressed). The analyses I will present in The Singularity is Near demonstrate that it is quite conservative to expect that we will have a complete understanding of the human brain and its methods, and thereby the software of human intelligence, prior to 2030.

The above is my own analysis, at least in mathematical terms, and backed up by extensive real-world data, of the Singularity as a “surge” phenomenon. This, then, is the conservative view of the Singularity.

Hans Moravec points out that my assumption that computer power grows proportionally with knowledge (i.e., V = C1 * W) is overly pessimistic because an independent innovation (each of which is a linear increment to knowledge) increases the power of the technology in a multiplicative way, rather than an additive way.

In an email to me on February 15, 1999, Hans wrote:

“For instance, one (independent innovation) might be an algorithmic discovery (like log N sorting) that lets you get the same result with half the computation. Another might be a computer organization (like RISC) that lets you get twice the computation with the same number of gates. Another might be a circuit advance (like CMOS) that lets you get twice the gates in a given space. Others might be independent speed-increasing advances, like size-reducing copper interconnects and capacitance-reducing silicon-on-insulator channels. Each of those increments of knowledge more or less multiplies the effect of all of the others, and computation would grow exponentially in their number.”

So if we substitute, as Hans suggests, V = exp(W) rather than V = C1 * W, then the result is that both W and V become infinite.

(Note the following omits the constants)

dW/dt = V giving dW/exp(W) = dt

This solves to W = log(-1/t) and V = -1/t

W and V rise very slowly when t<<0, might be mistaken for exponential around t = -1, and have a singularity at t = 0.

Hans and I then engaged in a dialog as to whether or not it is more accurate to say that computer power grows exponentially with knowledge (which is suggested by an analysis of independent innovations) (i.e., V = exp (W)), or grows linearly with knowledge (i.e., V = C1 * W) as I had originally suggested. We ended up agreeing that Hans’ original statement that V = exp (W) is too “optimistic,” and that my original statement that V = C1 * W is too “pessimistic.”

We then looked at what is the “weakest” assumption that one could make that nonetheless results in a mathematical singularity. Hans wrote:

“But, of course, a lot of new knowledge steps on the toes of other knowledge, by making it obsolete, or diluting its effect, so the simple independent model doesn’t work in general. Also, simply searching through an increasing amount of knowledge may take increasing amounts of computation. I played with the V=exp(W) assumption to weaken it, and observed that the singularity remains if you assume processing increases more slowly, for instance V = exp(sqrt(W)) or exp(W^1/4). Only when V = exp(log(W)) (i.e, = W) does the progress curve subside to an exponential.

Actually, the singularity appears somewhere in the I-would-have-expected tame region between and V = W and V = W^2 (!)

Unfortunately the transitional territory between the merely exponential V=W and the singularity-causing V=W^2 is analytically hard to deal with. I assume just before a singularity appears, you get non-computably rapid growth!”

Interestingly, if we take my original assumption that computer power grows linearly with knowledge, but add that the resources for computation also grows in the same way, then the total amount of computational power grows as the square of knowledge, and again, we have a mathematical singularity.

(Note the following omits the constants)

V = W*W

also dW/dt = V+1 as before

This solves to W = tan(t) and V = tan(t)^2,

Which has a singularity at t = pi / 2

The conclusions that I draw from these analyses are as follows. Even with the “conservative” assumptions, we find that nonbiological intelligence crosses the threshold of matching and then very quickly exceeding biological intelligence (both hardware and software) prior to 2030. We then note that nonbiological intelligence will then be able to combine the powers of biological intelligence with the ways in which nonbiological intelligence already excels, in terms of accuracy, speed, and the ability to instantly share knowledge.

Subsequent to the achievement of strong AI, human civilization will go through a period of increasing intimacy between biological and nonbiological intelligence, but this transhumanist period will be relatively brief before it yields to a posthumanist period in which nonbiological intelligence vastly exceeds the powers of unenhanced human intelligence. This, at least, is the conservative view.

The more “optimistic” view is difficult for me to imagine, so I assume that the formulas stop just short of the point at which the result becomes noncomputably large (i.e., infinite). What is interesting in the dialog that I had with Hans above is how easily the formulas can produce a mathematical singularity. Thus the difference between the Singularity as “wall” and the Singularity as “surge” results from a rather subtle difference in our assumptions.

Max: Ray, I know I’m in good company when the “conservative” view means that we achieve superhuman intelligence and a posthuman transition by 2030! I suppose that puts me in the unaccustomed position of being the ultra-conservative. From the regular person’s point of view, the differences between our expectations will seem trivial. Yet I think these critical comparisons are valuable in deciding whether the remaining human future is 25 years or 50 years or more. Differences in these estimations can have profound effects on outlook and which plans for the future are rational. One example would be the sense of saving heavily to build compound returns versus spending almost everything until the double exponential really turns strongly toward a vertical ascent.

I find your trend analysis compelling and certainly the most comprehensive and persuasive ever developed. Yet I am not quite willing to yield fully to the mathematical inevitability of your argument. History since the Enlightenment makes me wary of all arguments to inevitability, at least when they point to a specific time. Clearly your arguments are vastly more detailed and well-grounded than those of the 18th century proponents of inevitable progress. But I suspect that a range of non-computational factors could dampen the growth curve. The double exponential curve may describe very well the development of new technologies (at least those driven primarily by computation), but not necessarily their full implementation and effects. Numerous world-changing technologies from steel mills to electricity to the telephone to the Internet have taken decades to move from introduction to widespread effects. We could point to the Web to argue that this lag between invention and full adoption is shrinking. I would agree for the most part, yet different examples may tell a different story. Fuel cells were invented decades ago but only now do they seem poised to make a major contribution to our energy supply.

Psychological and cultural factors act as future shock absorbers. I am not sure that models based on models of evolution in information technology necessarily take these factors fully into account. In working with businesses to help them deal with change, I see over and over the struggle involved in altering organizational culture and business processes to take advantage of powerful software solutions from supply chain management to customer relationship management (CRM). CRM projects have a notoriously high failure rate, not because the software is faulty but because of poor planning and a failure to re-engineer business processes and employee incentives to fit. I expect we will eventually reach a point where cognitive processes and emotions can be fully understood and modulated and where we have a deep understanding of social processes. These will then cease to act as significant brakes to progress. But major advances in those areas seem likely to come close to the Singularity and so will act as drags until very close. It could be that your math models may overstate early progress toward the Singularity due to these factors. They may also understate the last stages of progress as practice catches up to technology with the liberation of the brain from its historical limitations.

Apart from these human factors, I am concerned that other trends may not present such an optimistic picture of accelerating progress. Computer programming languages and tools have improved over the last few decades, but it seems they improve slowly. Yes, at some point computers will take over most programming and perhaps greatly accelerate the development of programming tools. Or humans will receive hippocampus augmentations to expand working memory. My point is not that we will not reach the Singularity but that different aspects of technology and humanity will advance at different rates, with the slower holding back the faster.

I have no way of formally modeling these potential braking factors, which is why I refrain from offering specific forecasts for a Singularity. Perhaps they will delay the transhuman transition by only a couple of years, or perhaps by 20. I would agree that as information technology suffuses ever more of economy and society, its powerful engines of change will accelerate everything faster and faster as time goes by. Therefore, although I am not sure that your equations will always hold, I do expect actual events to converge on your models the closer we get to Singularity.

I would like briefly to make two other comments on your reply. First, you suggest that “humankind’s innate inclination for salvation is not necessarily irrational. Perhaps we have this inclination precisely to anticipate the Singularity.” I am not sure how to take this suggestion. A natural reading suggests a teleological interpretation: humans have been given (genetically or culturally) this inclination. If so, who gave us this? Since I do not believe the evidence supports the idea that we are designed beings, I don’t think such a teleological view of our inclination for salvation is plausible. I would also say that I don’t regard this inclination as inherently irrational. The inclination may be a side-effect of the apparently universal human desire to understand and to solve problems. Those who feel helpless to solve certain kinds of problems often want to believe there is a higher power (tax accountant, car mechanic, government, or god) that can solve the problem. I would say such an inclination only becomes irrational when it takes the form of unfounded stories that are taken as literal, explanatory facts rather than symbolic expressions of deep yearnings. That aside, I am curious how you think that we come to have this inclination in order to anticipate the Singularity.

Second, you say that you “don’t agree that a cultural rebellion “seduced by religious and cultural urgings for ‘stability,’ peace,’ and against ‘hubris’ and ‘the unknown'” are likely to derail technological acceleration.” We really don’t disagree here. If you look again at what I wrote, you can see that I do not think this derailing is likely. More exactly, while I think they are highly likely locally (look at the Middle East for example), they would have a hard time in today’s world universally stopping or appreciably slowing technological progress. My concern was to challenge the idea that progress is inevitable rather than simply highly likely. This point may seem unimportant if we adopt a position based on overall trends. But it will certainly matter to those left behind, temporarily or permanently in various parts of the world: The Muslim woman dying in childbirth as her culture refuses her medical attention; a dissident executed for speaking out against the state; or a patient who dies of nerve degeneration or who loses their personality due to amentia because religious conservatives have halted progress in stem cell research. The derailing of progress is likely to be temporary and local, but no less real and potentially deadly for many. A more widespread and enduring throwback, perhaps due to a massively infectious and deadly terrorist attack, surely cannot be ruled out. Recent events have reminded us that the future needs security as well as research.

Normally I do the job of arguing that technological change will be faster than expected. Taking the other side in this dialog has been a stimulating change of pace. While I expect those major events we call the Singularity to come just a little later than you calculate, I strongly hope that I am mistaken and that you are correct. The sooner we master these technologies, the sooner we will conquer aging and death and all the evils that humankind has been heir to.

Ray: It’s tempting indeed to continue this dialog indefinitely, or at least until the Singularity comes around. I am sure that we will do exactly that in a variety of forums. A few comments for the moment, however:

You cite the difference in our future perspective (regarding the time left until the Singularity) as being about 20 years. Of course, from the perspective of human history, let alone evolutionary history, that’s not a very big difference. It’s not clear that we differ by even that much. I’ve projected the date 2029 for a nonbiological intelligence to pass the Turing test. (As an aside, I just engaged in a “long term wager” with Mitchell Kapor to be administered by the “Long Now Foundation” on just this point.) However, the threshold of a machine passing a valid Turing test, although unquestionably a singular milestone, does not represent the Singularity. This event will not immediately alter human identity in such a profound way as to represent the tear in the fabric of history that the term Singularity implies. It will take a while longer for all of these intertwined trends: biotechnology, nanotechnology, computing, communications, miniaturization, brain reverse engineering, virtual reality, and others to fully mature. I estimate the Singularity at around 2045. You estimated the “posthuman Singularity as [occurring]. . . . with a best guess somewhere around the middle of the century.” So, perhaps, our expectations are close to being about five years apart. Five years will in fact be rather significant in 2045, but even with our mutual high levels of impatience, I believe we will be able to wait that much longer

I do want to comment on your term “the remaining human future” (being “25 years or 50 years or more”). I would rather consider the post-Singularity period to be one that is “post biological” rather than “post human.” In my view, the other side of the Singularity may properly be considered still human and still infused with (our better) human values. At least that is what we need to strive for. The intelligence we are creating will be derived from human intelligence, i.e., derived from human designs, and from the reverse engineering of human intelligence.

As the beautiful images and descriptions that (your wife) Natasha Vita-More and her collaborators put together (“Radical Body Design ‘Primo 3M+'”) demonstrate, we will gladly move beyond the limitations, not to mention the pain and discomfort, of our biological bodies and brains. As William Butler Yeats wrote, an aging man’s biological body is “but a paltry thing, a tattered coat upon a stick.” Interestingly, Yeats concludes “Once out of nature I shall never take, My bodily form from any natural thing, But such a form as Grecian goldsmiths make, Of hammered gold and gold enamelling.” I suppose that Yeats never read Feynman’s treatise on nanotechnology or he would have mentioned carbon nanotubes.

I am concerned that if we refer to a “remaining human future,” this terminology may encourage the perspective that something profound is being lost. I believe that a lot of the opposition to these emerging technologies stems from this uninformed view. I think we are in agreement that nothing of true value needs to be lost.

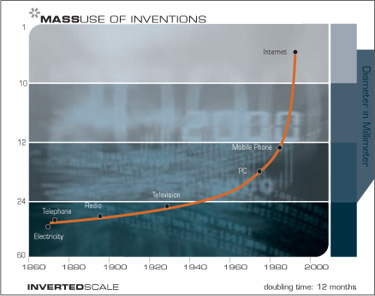

You write that “Numerous world-changing technologies. . . . have taken decades to move from introduction to widespread effects.” But keep in mind that, in accordance with the law of exponential returns, technology adoption rates are accelerating along with everything else. The following chart shows the adoption time of various technologies, measured from invention to adoption by a quarter of the U.S. population:

With regard to technologies that are not information based, the exponent of exponential growth is definitely slower than for computation and communications, but nonetheless positive, and as you point out fuel cell technologies are posed for rapid growth. As one example of many, I’m involved with one company that has applied MEMS technology to fuel cells. Ultimately we will also see revolutionary changes in transportation from nanotechnology combined with new energy technologies (think microwings).

A common challenge to the feasibility of strong AI, and therefore to the Singularity, is to distinguish between quantitative and qualitative trends. This challenge says, in essence, that perhaps certain brute force capabilities such as memory capacity, processor speed, and communications bandwidths are expanding exponentially, but the qualitative aspects are not.

This is the hardware versus software challenge, and it is an important one. With regard to the price-performance of software, the comparisons in virtually every area are dramatic. Consider speech recognition software as one example of many. In 1985 $5,000 bought you a speech recognition software package that provided a 1,000 word vocabulary, did not provide continuous speech capability, required three hours of training, and had relatively poor accuracy. Today, for only $50, you can purchase a speech recognition software package with a 100,000 word vocabulary, that does provide continuous speech capability, requires only five minutes of training, has dramatically improved accuracy, provides natural language understanding ability (for editing commands and other purposes), and many other features.

What about software development itself? I’ve been developing software myself for forty years, so I have some perspective on this. It’s clear that the growth in productivity of software development has a lower exponent, but it is nonetheless growing exponentially. The development tools, class libraries, and support systems available today are dramatically more effective than those of decades ago. I have today small teams of just three or four people who achieve objectives in a few months that are comparable to what a team of a dozen or more people could accomplish in a year or more 25 years ago. I estimate the doubling time of software productivity to be approximately six years, which is slower than the doubling time for processor price-performance, which is approximately one year today. However, software productivity is nonetheless growing exponentially.

The most important point to be made here is that we have a specific game plan (i.e., brain reverse engineering) for achieving the software of human-level intelligence in a machine. It’s actually not my view that brain reverse engineering is the only way to achieve strong AI, but this scenario does provide an effective existence proof of a viable path to get there.

If you speak to some of the (thousands of) neurobiologists who are diligently creating detailed mathematical models of the hundreds of types of neurons found in the brain, or who are modeling the patterns of connections found in different regions, you will often encounter the common engineer’s / scientist’s myopia that results from being immersed in the specifics of one aspect of a large challenge. I’ll discuss the challenge here in some detail in the book I’m now working on, but I believe it is a conservative projection to expect that we will have detailed models of the several hundred regions of the brain within about 25 years (we already have impressively detailed models and simulations for a couple dozen such regions). As I alluded to above, only about half of the genome’s 23 million bytes of useful information (i.e., what’s left of the 800 million byte genome after compression) specifies the brain’s initial conditions.

The other “non-computational factors [that] could dampen the growth curve” that you cite are “psychological and cultural factors [acting] as future shock absorbers.” You describe organizations you’ve worked with in which the “culture and business processes” resist change for a variety of reasons. It is clearly the case that many organizations are unable to master change, but ultimately such organizations will not be the ones to thrive.

You write that “different aspects of technology and humanity will advance at different rates, with the slower holding back the faster.” I agree with the first part, but not the second. There’s no question but that different parts of society evolve at different rates. We still have people pushing plows with oxen, but the continued existence of preindustrial societies has not appreciably slowed down Intel and other companies from advancing microprocessor design.

In my view, the rigid cultural and religious factors that you eloquently describe end up being like stones in a stream. The water just flows around them. A good case in point is the current stem cell controversy. Although I believe that banning therapeutic cloning represents an ignorant and destructive position, it has had the effect of accelerating workable approaches to converting one type of cell into another. Every cell has the complete genetic code, and we are beginning to understand the protein signaling factors that control differentiation. The holy grail of tissue engineering will be to directly convert one cell into another by manipulating these signaling factors and thereby bypassing fetal tissue and egg cells altogether. We’re not that far from being able to do this, and the current controversy has actually spurred these efforts. Ultimately, these will be superior approaches anyway because egg cells are hard to come by.

I think our differences here are rather subtle, and I agree strongly with your insight that “the derailing of progress is likely to be temporary and local, but no less real and potentially deadly for many.”

On a different note, you ask, “who gave us this,. . .innate inclination for salvation.” I agree that we’re evolved rather than explicitly designed beings, so we can view this inclination to express “deep yearnings” as representative of our position as the cutting edge of evolution. We are that part of evolution that will lead the Universe to converting its endless masses of dumb matter into sublimely intelligent patterns of mass and energy. So we can view this inclination for transcendence in its evolutionary perspective as a useful survival tool in our ecological niche, a special niche for a species that is capable of modeling and extending its own capabilities.

Finally, I have to strongly endorse your conclusion that ‘the sooner we master these technologies, the sooner we will conquer aging and death and all the evils that humankind has been heir to.”

Max: Ray, I want to thank you for inviting me into this engaging dialog. Such a vigorous yet well-mannered debate has, I think, helped both of us to detail our views further. Our shared premises and modestly differing conclusions have allowed us to tease out some implicit assumptions. As you concluded, our views amount to a rather small difference considered from the point of view of most people. The size of our divergence in estimations of time until that set of events we call the Singularity will seem large only once we are close to it. However, before then I expect our views to continue converging. What I find especially interesting is how we have reached very similar conclusions from quite different backgrounds. That someone with a formal background originally in economics and philosophy converges in thought with someone with a strong background in science and technology encourages me to favor E. O. Wilson’s view of consilience. I look forward to continuing this dialog with you. It has been a pleasure and an honor.

Ray: Thanks, Max, for sharing your compelling thoughts. The feelings of satisfaction are mutual, and I look forward to continued convergence and consilience.

1 http://www-rohan.sdsu.edu/faculty/vinge/misc/singularity.html