Measuring the human pulse from tiny head movements to help diagnose cardiac disease

June 24, 2013

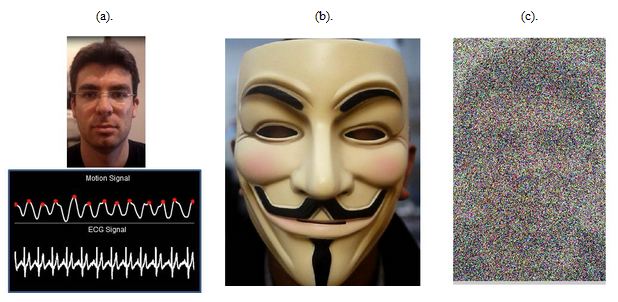

Examples of videos from which MIT researchers were able to extract a pulse signal. (a) a typical view of the face along with an example of the motion signal in comparison to an ECG device. (b) a subject wearing a mask. (c) a video of a subject with a significant amount of added white Gaussian noise. (Credit: G. Balakrishnan et al.)

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory have developed a new algorithm that can accurately measure the heart rates of people depicted in ordinary digital video by analyzing imperceptibly small head movements that accompany the rush of blood caused by the heart’s contractions.

In tests, the algorithm gave pulse measurements that were consistently within a few beats per minute of those produced by electrocardiograms (EKGs). It was also able to provide useful estimates of the time intervals between beats, a measurement that can be used to identify patients at risk for cardiac events.

A video-based pulse-measurement system could be useful for monitoring newborns or the elderly, whose sensitive skin could be damaged by frequent attachment and removal of EKG leads.

According to John Guttag (the Dugald C. Jackson Professor of Electrical Engineering and Computer Science, director of MIT’s Data-Driven Medicine Group, and professor of computer science and engineering), “from a medical perspective, I think that the long-term utility is going to be in applications such as looking for bilateral asymmetries and measuring cardiac output, which is used in the diagnosis of several types of heart disease.

How it works

Using direction and magnitude of movement of feature points for pulse signal extraction (credit: G. Balakrishnan et al.)

The algorithm uses standard face recognition to distinguish the subject’s head from the rest of the image. Then it randomly selects 500 to 1,000 distinct points, clustered around the subjects’ mouths and noses, whose movement it tracks from frame to frame.

Next, it filters out any frame-to-frame movements whose temporal frequency falls outside the range of a normal heartbeat — roughly 0.5 to 5 hertz, or 30 to 300 cycles per minute.

And using a technique called principal component analysis, the algorithm decomposes the resulting signal into several constituent signals, which represent aspects of the remaining movements that don’t appear to be correlated with each other.

Of those signals, it selects the one that appears to be the most regular and that falls within the typical frequency band of the human pulse