New algorithm will allow for simulating neural connections of entire brain on future exascale supercomputers

March 21, 2018

(credit: iStock)

An international team of scientists has developed an algorithm that represents a major step toward simulating neural connections in the entire human brain.

The new algorithm, described in an open-access paper published in Frontiers in Neuroinformatics, is intended to allow simulation of the human brain’s 100 billion interconnected neurons on supercomputers. The work involves researchers at the Jülich Research Centre, Norwegian University of Life Sciences, Aachen University, RIKEN, KTH Royal Institute of Technology, and KTH Royal Institute of Technology.

An open-source neural simulation tool. The algorithm was developed using NEST* (“neural simulation tool”) — open-source simulation software in widespread use by the neuroscientific community and a core simulator of the European Human Brain Project. With NEST, the behavior of each neuron in the network is represented by a small number of mathematical equations, the researchers explain in an announcement.

Since 2014, large-scale simulations of neural networks using NEST have been running on the petascale** K supercomputer at RIKEN and JUQUEEN supercomputer at the Jülich Supercomputing Centre in Germany to simulate the connections of about one percent of the neurons in the human brain, according to Markus Diesmann, PhD, Director at the Jülich Institute of Neuroscience and Medicine. Those simulations have used a previous version of the NEST algorithm.

Why supercomputers can’t model the entire brain (yet). “Before a neuronal network simulation can take place, neurons and their connections need to be created virtually,” explains senior author Susanne Kunkel of KTH Royal Institute of Technology in Stockholm.

During the simulation, a neuron’s action potentials (short electric pulses) first need to be sent to all 100,000 or so small computers, called nodes, each equipped with a number of processors doing the actual calculations. Each node then checks which of all these pulses are relevant for the virtual neurons that exist on this node.

That process requires one bit of information per processor for every neuron in the whole network. For a network of one billion neurons, a large part of the memory in each node is consumed by this single bit of information per neuron. Of course, the amount of computer memory required per processor for these extra bits per neuron increases with the size of the neuronal network. To go beyond the 1 percent and simulate the entire human brain would require the memory available to each processor to be 100 times larger than in today’s supercomputers.

In future exascale** computers, such as the post-K computer planned in Kobe and JUWELS at Jülich*** in Germany, the number of processors per compute node will increase, but the memory per processor and the number of compute nodes will stay the same.

Achieving whole-brain simulation on future exascale supercomputers. That’s where the next-generation NEST algorithm comes in. At the beginning of the simulation, the new NEST algorithm will allow the nodes to exchange information about what data on neuronal activity needs to sent and to where. Once this knowledge is available, the exchange of data between nodes can be organized such that a given node only receives the information it actually requires. That will eliminate the need for the additional bit for each neuron in the network.

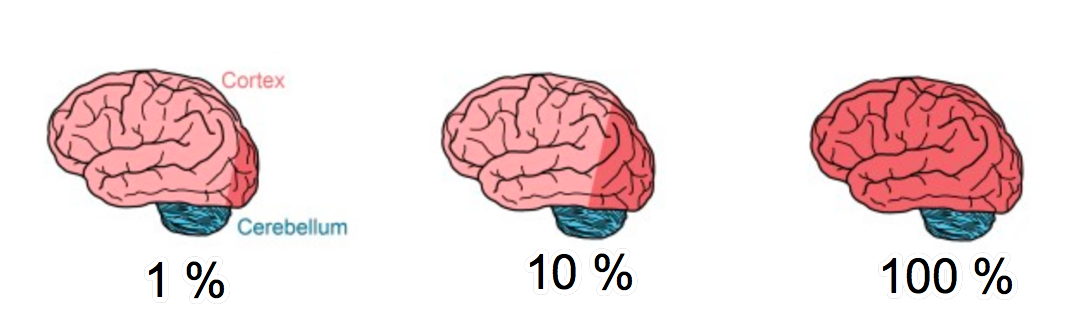

Brain-simulation software, running on a current petascale supercomputer, can only represent about 1 percent of neuron connections in the cortex of a human brain (dark red area of brain on left). Only about 10 percent of neuron connections (center) would be possible on the next generation of exascale supercomputers, which will exceed the performance of today’s high-end supercomputers by 10- to 100-fold. However, a new algorithm could allow for 100 percent (whole-brain-scale simulation) on exascale supercomputers, using the same amount of computer memory as current supercomputers. (credit: Forschungszentrum Jülich, adapted by KurzweilAI)

With memory consumption under control, simulation speed will then become the main focus. For example, a large simulation of 0.52 billion neurons connected by 5.8 trillion synapses running on the supercomputer JUQUEEN in Jülich previously required 28.5 minutes to compute one second of biological time. With the improved algorithm, the time will be reduced to just 5.2 minutes, the researchers calculate.

“The combination of exascale hardware and [forthcoming NEST] software brings investigations of fundamental aspects of brain function, like plasticity and learning, unfolding over minutes of biological time, within our reach,” says Diesmann.

The new algorithm will also make simulations faster on presently available petascale supercomputers, the researchers found.

NEST simulation software update. In one of the next releases of the simulation software by the Neural Simulation Technology Initiative, the researchers will make the new open-source code freely available to the community.

For the first time, researchers will have the computer power available to simulate neuronal networks on the scale of the entire human brain.

Kenji Doya of Okinawa Institute of Science and Technology (OIST) may be among the first to try it. “We have been using NEST for simulating the complex dynamics of the basal ganglia circuits in health and Parkinson’s disease on the K computer. We are excited to hear the news about the new generation of NEST, which will allow us to run whole-brain-scale simulations on the post-K computer to clarify the neural mechanisms of motor control and mental functions,” he says .

* NEST is a simulator for spiking neural network models that focuses on the dynamics, size and structure of neural systems, rather than on the exact morphology of individual neurons. NEST is ideal for networks of spiking neurons of any size, such as models of information processing, e.g., in the visual or auditory cortex of mammals, models of network activity dynamics, e.g., laminar cortical networks or balanced random networks, and models of learning and plasticity.

** Petascale supercomputers operate at petaflop/s (quadrillions or 1015 floating point operations per second). Future exascale supercomputers will operate at exaflop/s (1018 flop/s). The fastest supercomputer at this time is the Sunway TaihuLight at the National Supercomputing Center in Wuxi, China, operating at 93 petaflops/sec.

*** At Jülich, the work is supported by the Simulation Laboratory Neuroscience, a facility of the Bernstein Network Computational Neuroscience at Jülich Supercomputing Centre. Partial funding comes from the European Union Seventh Framework Programme (Human Brain Project, HBP) and the European Union’s Horizon 2020 research and innovation programme, and the Exploratory Challenge on Post-K Computer (Understanding the neural mechanisms of thoughts and its applications to AI) of the Ministry of Education, Culture, Sports, Science and Technology (MEXT) Japan. With their joint project between Japan and Europe, the researchers hope to contribute to the formation of an International Brain Initiative (IBI).

BernsteinNetwork | NEST — A brain simulator

Abstract of Extremely Scalable Spiking Neuronal Network Simulation Code: From Laptops to Exascale Computers

State-of-the-art software tools for neuronal network simulations scale to the largest computing systems available today and enable investigations of large-scale networks of up to 10 % of the human cortex at a resolution of individual neurons and synapses. Due to an upper limit on the number of incoming connections of a single neuron, network connectivity becomes extremely sparse at this scale. To manage computational costs, simulation software ultimately targeting the brain scale needs to fully exploit this sparsity. Here we present a two-tier connection infrastructure and a framework for directed communication among compute nodes accounting for the sparsity of brain-scale networks. We demonstrate the feasibility of this approach by implementing the technology in the NEST simulation code and we investigate its performance in different scaling scenarios of typical network simulations. Our results show that the new data structures and communication scheme prepare the simulation kernel for post-petascale high-performance computing facilities without sacrificing performance in smaller systems.