New supercomputer on a chip ‘sees’ well enough to drive a car someday

September 16, 2010 by Amara D. Angelica

Eugenio Culurciello of Yale’s School of Engineering & Applied Science has developed a supercomputer based on the ventral pathway of the mammalian visual system. Dubbed NeuFlow, the system mimicks the visual system’s neural network to quickly interpret the world around it.

The system uses complex vision algorithms developed by Yann LeCun at New York University to run large neural networks for synthetic vision applications. One idea — the one Culurciello and LeCun are focusing on — is a system that would allow cars to drive themselves. In order to be able to recognize the various objects encountered on the road—such as other cars, people, stoplights, sidewalks, and the road itself—NeuFlow processes tens of megapixel images in real time.

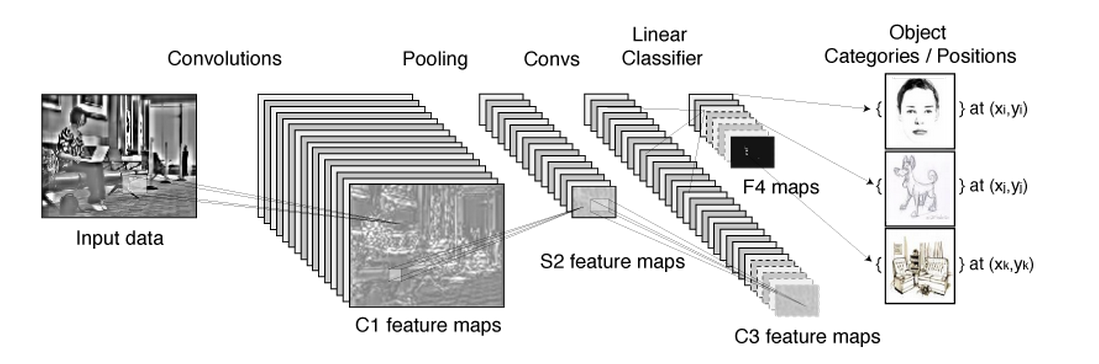

Convolutional neural networks or ConvNets are a multi-stage neural network that can model the way brain visual processing area V1, V2, V4, IT create invariance to size and position to identify objects. Each stage is composed of three layers: a filter bank layer, a non-linearity layer, and a feature pooling layer. A typical ConvNet is composed of one, two or three such 3-layer stages, followed by a classification module. (Yale University)

The system is also extremely efficient, simultaneously running more than 100 billion operations per second using only a few watts (less than the power a cell phone uses) to accomplish what it takes benchtop computers with multiple graphic processors more than 300 watts to achieve.

“One of our first prototypes of this system is already capable of outperforming graphic processors on vision tasks,” Culurciello said.

Culurciello embedded the supercomputer on a single chip, making the system much smaller, yet more powerful and efficient, than full-scale computers. “The complete system is going to be no bigger than a wallet, so it could easily be embedded in cars and other places,” Culurciello said.

Beyond the autonomous car navigation, the system could be used to improve robot navigation into dangerous or difficult-to-reach locations, to provide 360-degree synthetic vision for soldiers in combat situations, or in assisted living situations where it could be used to monitor motion and call for help should an elderly person fall, for example.

Other collaborators include Clement Farabet (Yale University and New York University), Berin Martini, Polina Akselrod, Selcuk Talay (Yale University) and Benoit Corda (New York University).

Culurciello presented the results Sept. 15 at the High Performance Embedded Computing (HPEC) workshop in Boston.

Bio-inspired object categorization

The researchers are also developing bio-inspired neuromorphic algorithms and neural processing hardware to allow fast categorization of hundreds of objects in real time in multi-megapixel images, videos and custom event-based cameras. Applications are in robotic vision, security, monitoring and assisted living and remote care of elderly and patients.

They have used temporal-difference image sensors to recognize objects and people’s postures in real time. The algorithm also works on any regular off-the-shelf camera and imeage sensor array. The same algorithm is lightweight and can be implemented in embedded platforms, as sensor networks and cellular phones.

Here’s a demonstration on a Apple iPhone cellular phone platform of the ultra-low computation object recognition engine:

They have also used this algorithm to categorize human postures in real time for assisted living applications. The goal is to monitor elderly people or patients in the comfort of their homes.

More info: Yale University news, publications, Windows PC code, MAC OS X code.