NSF announces ‘big data’ research funding

March 30, 2012

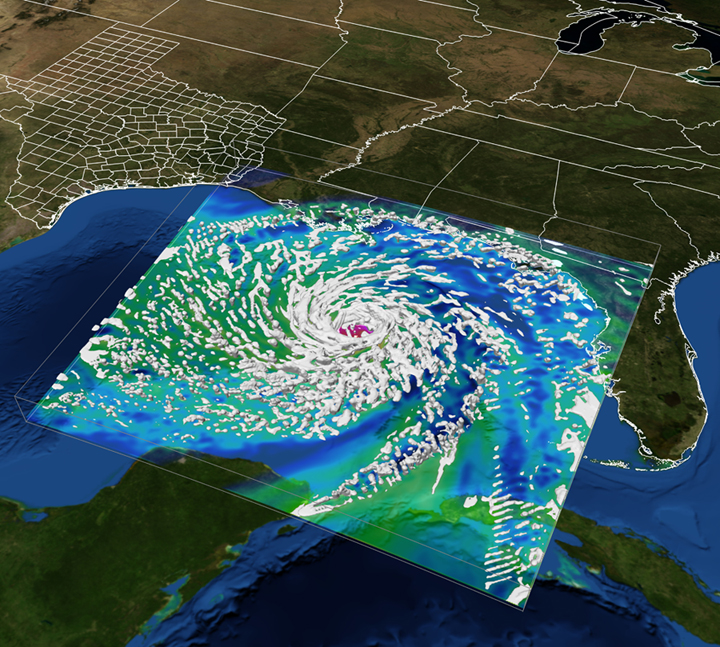

Throughout the 2008 hurricane season, the Texas Advanced Computing Center was an active participant in a NOAA research effort to develop next-generation hurricane models. Teams of scientists relied on TACC's Ranger supercomputer to test high-resolution ensemble hurricane models, and to track evacuation routes from data streams on the ground and from space. Using up to 40,000 processing cores at once, researchers simulated both global and regional weather models and received on-demand access to some of the most powerful hardware in the world enabling real-time, high-resolution ensemble simulations of the storm. This visualization of Hurricane Ike shows the storm developing in the gulf and making landfall on the Texas coast. (Credit: Gregory P. Johnson, Romy Schneider, John Cazes, Karl Schulz, Bill Barth, The University of Texas at Austin; Frank Marks, NOAA; Fuqing Zhang and Yonghui Weng, Pennsylvania State University)

National Science Foundation (NSF) Director Subra Suresh joined other federal science agency leaders to discuss cross-agency big data plans and announce new areas of research funding across disciplines at an event led by the White House Office of Science and Technology Policy in Washington, D.C.

NSF released a Big Data solicitation, “Core Techniques and Technologies for Advancing Big Data Science & Engineering,” jointly with NIH.

This program will fund research to develop and evaluate new algorithms, statistical methods, technologies, and tools for improved data collection and management, data analytics, and e-science collaboration environments.

NSF also announced a $10 million award under the Expeditions in Computing program to researchers at the University of California, Berkeley.

The team will integrate algorithms, machines, and people to turn data into knowledge and insight. The objective is to develop new scalable machine-learning algorithms and data management tools that can handle large-scale and heterogeneous datasets, novel datacenter-friendly programming models, and an improved computational infrastructure.

Cyberinfrastructure Framework for 21st Century Science and Engineering

NSF’s Cyberinfrastructure Framework for 21st Century Science and Engineering, or “CIF21,” is core to strategic efforts. CIF21 will foster the development and implementation of the national cyberinfrastructure for researchers in science and engineering to achieve a democratization of data.

Awards announced include:

- The first round of awards made through an NSF geosciences program called EarthCube, under the CIF21 framework, will support the development of community-guided cyberinfrastructure to integrate big data across geosciences and ultimately change how geosciences research is conducted. Integrating data from disparate locations and sources with eclectic structures and formats that has been stored as well as captured in real time, will expedite the delivery of geoscience knowledge.

- A $1.4 million award for a focused research group that brings together statisticians and biologists to develop network models and automatic, scalable algorithms and tools to determine protein structures and biological pathways.

- A $2 million award for a research training group in big data will support training for undergraduates, graduates and postdoctoral fellows to use statistical, graphical and visualization techniques for complex data.