Open-source GPU could push computing power to the next level

January 20, 2016

Nvidia | Mythbusters Demo GPU versus CPU

Binghamton University researchers have developed Nyami, a synthesizable graphics processor unit (GPU) architectural model for general-purpose and graphics-specific workloads, and have run a series of experiments on it to see how different hardware and software configurations would affect the circuit’s performance.

Binghamton University computer science assistant professor Timothy Miller said the results will help other scientists make their own GPUs and “push computing power to the next level.”

GPUs are typically found on commercial video or graphics cards inside of a computer or gaming console. The specialized circuits have computing power designed to make images appear smoother and more vibrant on a screen. There has recently been a movement to see if the chip can also be applied to non-graphical computations, such as algorithms processing large chunks of data.

GPU programming model “unfamiliar”

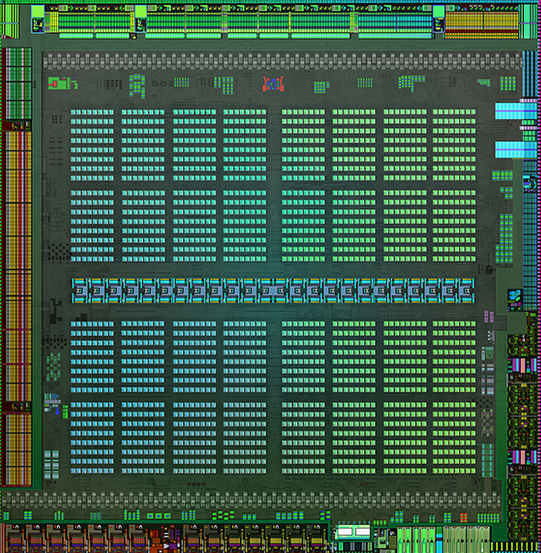

Maxwell, Nvidia’s most powerful GPU architecture (credit: Nvidia)

“In terms of performance per Watt and performance per cubic meter, GPUs can outperform CPUs by orders of magnitude on many important workloads,” the researchers note in an open-access paper by Miller and other authors in International Symposium on Performance Analysis of Systems and Software (Jeff Bush, the director of software engineering at Roku, was lead author).

“The adoption of GPUs into HPC [high-performance computing] has therefore been both a major boost in performance and a shift in how supercomputers are programmed. Unfortunately, this shift has suffered slow adoption because the GPU programming model is unfamiliar to those who are accustomed to writing software for traditional CPUs.”

This slow adoption was a result of GPU manufacturers’ decision to keep their chip specifications secret, said Miller. “That prevented open source developers from writing software that could utilize that hardware. Nyami makes it easier for other researchers to conduct experiments of their own, because they don’t have to reinvent the wheel. With contributions from the ‘open hardware’ community, we can incorporate more creative ideas and produce an increasingly better tool.

“The ramifications of the findings could make processors easier for researchers to work with and explore different design tradeoffs. We can also use [Nyami] as a platform for conducting research that isn’t GPU-specific, like energy efficiency and reliability,” he added.

Abstract of Nyami: a synthesizable GPU architectural model for general-purpose and graphics-specific workloads

Graphics processing units (GPUs) continue to grow in popularity for general-purpose, highly parallel, high-throughput systems. This has forced GPU vendors to increase their focus on general purpose workloads, sometimes at the expense of the graphics-specific workloads. Using GPUs for general-purpose computation is a departure from the driving forces behind programmable GPUs that were focused on a narrow subset of graphics rendering operations. Rather than focus on purely graphics-related or general-purpose use, we have designed and modeled an architecture that optimizes for both simultaneously to efficiently handle all GPU workloads. In this paper, we present Nyami, a co-optimized GPU architecture and simulation model with an open-source implementation written in Verilog. This approach allows us to more easily explore the GPU design space in a synthesizable, cycle-precise, modular environment. An instruction-precise functional simulator is provided for co-simulation and verification. Overall, we assume a GPU may be used as a general-purpose GPU (GPGPU) or a graphics engine and account for this in the architecture’s construction and in the options and modules selectable for synthesis and simulation. To demonstrate Nyami’s viability as a GPU research platform, we exploit its flexibility and modularity to explore the impact of a set of architectural decisions. These include sensitivity to cache size and associativity, barrel and switch-on-stall multithreaded instruction scheduling, and software vs. hardware implementations of rasterization. Through these experiments, we gain insight into commonly accepted GPU architecture decisions, adapt the architecture accordingly, and give examples of the intended use as a GPU research tool.