Poison attacks against machine learning

July 23, 2012

New results indicate that it may be easier than we thought to provide data to a learning program that causes it to learn the wrong things by by feeding it wrong data — a “poison attack,” I Programmer reports.

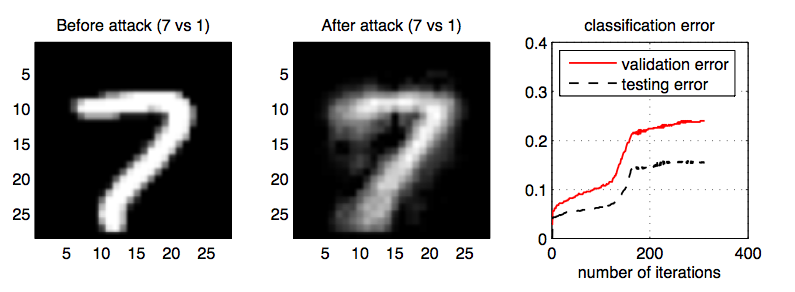

Three researchers, Battista Biggio (Italy) Blaine Nelson and Pavel Laskov (Germany), have found a way to feed a Support Vector Machine (SVM) with data specially designed to increase the error rate of the machine as much as possible with a few bogus data points.

SVMs are fairly simple learning devices that use examples to make classifications or decisions. They are used in security settings to detect abnormal behavior such as fraud, credit card use anomalies and even to weed out spam. SVMs learn by being shown examples of the sorts of things they are supposed to detect, including examples while doing its job.

The approach assumes that the attacker knows the learning algorithm being employed and has access to the same data to the original training data (which can be simulated).

What they discovered is that their method was capable of having a surprisingly large impact on the performance of the SVMs tested. They also point out that it could be possible to direct the induced errors so as to product particular types of error. For example, a spammer could send some poisoned data so as to evade detection in the future.