‘Robo Brain’ will teach robots everything from the Internet

August 26, 2014

Robo Brain logo (credit: Saxena Lab)

Robo Brain is currently downloading and processing about 1 billion images, 120,000 YouTube videos, and 100 million how-to documents and appliance manuals, all being translated and stored in a robot-friendly format.

The reason: to serve as helpers in our homes, offices and factories, robots will need to understand how the world works and how the humans around them behave.

Robotics researchers like Ashutosh Saxena, assistant professor of computer science at his Cornell University and his associates at Cornell’s Personal Robotics Lab have been teaching them these things one at a time (which KurzweilAI has covered over the last two years in four articles).

Robotic arm placing an object in a specific location (credit: Saxena Lab)

For example, how to find your keys, pour a drink, put away dishes, and when not to interrupt two people having a conversation.

Now it’s all being automated, cloudified, and crowdsourced.

“If a robot encounters a situation it hasn’t seen before it can query Robo Brain in the cloud,” said Saxena.

Saxena and colleagues at Cornell, Stanford and Brown universities and the University of California, Berkeley, say Robo Brain will process images to pick out the objects in them, and by connecting images and video with text, it will learn to recognize objects and how they are used, along with human language and behavior.

If a robot sees a coffee mug, it can learn from Robo Brain not only that it’s a coffee mug, but also that liquids can be poured into or out of it, that it can be grasped by the handle, and that it must be carried upright when it is full, as opposed to when it is being carried from the dishwasher to the cupboard.

RSS2014: 07/16 15:00-16:00 Early Career Spotlight: Ashutosh Saxena (Cornell): Robot Learning

Structured deep learning

Saxena described the project at the 2014 Robotics: Science and Systems Conference, July 12–16 in Berkeley, and has launched a website for the project at http://robobrain.me

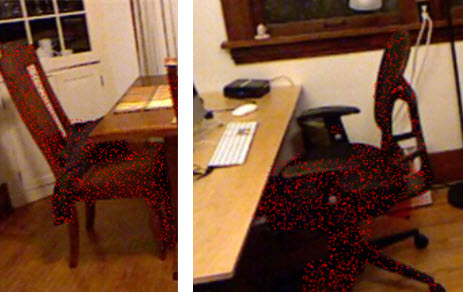

Semantic labeling: Chair has the following appearance in the image (credit: Saxena et al.)

The system employs what computer scientists call “structured deep learning,” where information is stored in many levels of abstraction. An easy chair is a member of the class of chairs, and going up another level, chairs are furniture.

Robo Brain knows that chairs are something you can sit on, but that a human can also sit on a stool, a bench or the lawn.

A robot’s computer brain stores what it has learned in a form mathematicians call a Markov model, which can be represented graphically as a set of points connected by lines (formally called nodes and edges).

The nodes could represent objects, actions or parts of an image, and each one is assigned a probability — how much you can vary it and still be correct.

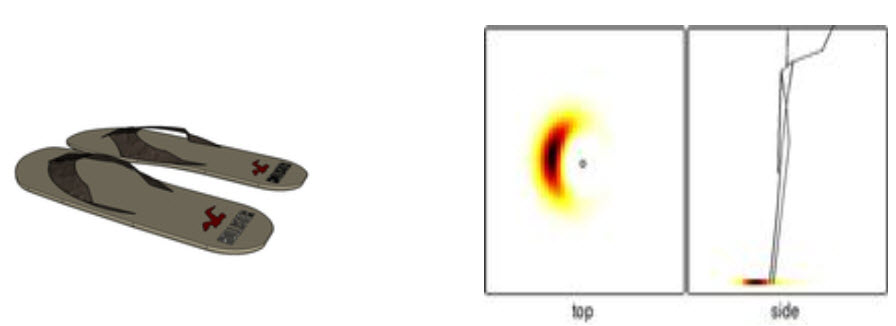

Object Affordance: the position of a standing human while using a shoe is distributed as heatmap_12 (credit: Saxena Lab)

In searching for knowledge, a robot’s brain makes its own chain and looks for one in the knowledge base that matches within those limits. “The Robo Brain will look like a gigantic, branching graph with abilities for multi-dimensional queries,” said Aditya Jami, a visiting researcher art Cornell, who designed the large-scale database for the brain. Perhaps something that looks like a chart of relationships between Facebook friends, but more on the scale of the Milky Way Galaxy.

Like a human learner, Robo Brain will have teachers, thanks to crowdsourcing. The Robo Brain website will display things the brain has learned, and visitors will be able to make additions and corrections.

The project is supported by the National Science Foundation, The Office of Naval Research, the Army Research Office, Google, Microsoft, Qualcomm, the Alfred P. Sloan Foundation and the National Robotics Initiative, whose goal is to advance robotics to help make the United States competitive in the world economy.