Robot learns to use tools by ‘watching’ YouTube videos

January 2, 2015

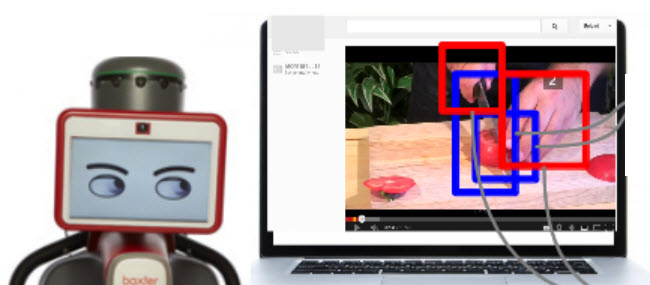

Robot watches videos to detect objects and how to grasp them. “Hmmm, so that’s how to hold and slice a soft spherical red object by holding a slicing-tool with a ‘power small diameter’ type grip, while avoiding damage to my gripper hand. Got it.” (Credit: Yezhou Yang et al.)

Imagine a self-learning robot that can enrich its knowledge about fine-grained manipulation actions (such as preparing food) simply by “watching” demo videos. That’s the idea behind a new robot-training system based on recent developments of “deep neural networks” in computer vision, developed by researchers at the University of Maryland and NICTA in Australia.

The objective of the system is to improve performance, improving on previous automated robot-training systems such as Robobrain, discussed on KurzweilAI last August. Robobrain, which has been downloading and processing about 1 billion images, 120,000 YouTube videos, and 100 million how-to documents and appliance manuals, is designed to deduce where and how to grasp grasp an object from its appearance.

The problem with Robobrain (and other automated robot-training systems): there’s a large variation in exactly where (which frames) the action appears in the videos, and without 3D information, its hard for the robot to infer what part of the image to model and how to associate a specific action such as a hand movement (cracking on egg on a dish, for example) with a specific object (“hmm, did the human just crack the egg on the dish, or did it break open magically from an arm movement, or do spheroid objects spontaneously implode?”).

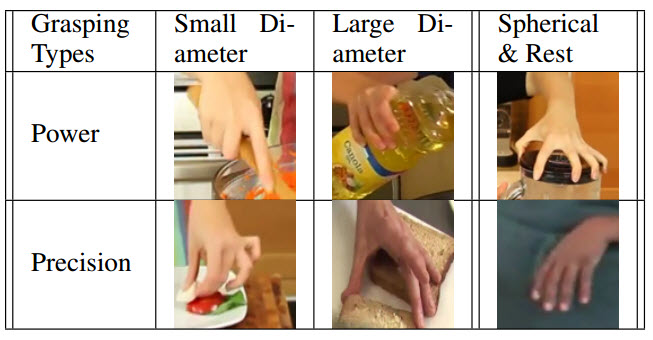

Object grasping types (credit: Yezhou Yang et al.)

The researchers took a crack, if you will, at this problem by developing a deep-learning system that uses object recognition (it’s an egg) and grasping-type recognition (it’s using a “precision large diameter” grasping type) and can also learn what tool to use and how to grasp it.

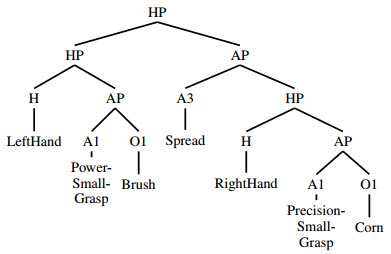

Buttering corn 101 for robots (credit: Yezhou Yang et al.)

Their system handles all of that with recognition modules based on a “convolutional neural network” (CNN), which also predicts the most likely action to take using language derived by mining a large database. The robot also needs to understand the hierarchical and recursive structure of an action. For that, the researchers used a parsing module based on a manipulation action grammar.

Most likely parse tree generated for the buttering-corn clip (credit: Yezhou Yang et al.)

The system will be presented Jan. 29 at the AAAI conference in Austin (open-access paper is available online).

Hat tip: “Spikosauropod”

Abstract of Robot Learning Manipulation Action Plans by “Watching” Unconstrained Videos

from the World Wide Web

In order to advance action generation and creation in robots beyond simple learned schemas we need computational tools that allow us to automatically interpret and represent human actions. This paper presents a system that learns manipulation action plans by processing unconstrained videos from the World Wide Web. Its goal is to robustly generate the sequence of atomic actions of seen longer actions in video in order to acquire knowledge for robots. The lower level of the system consists of two convolutional neural network (CNN) based recognition modules, one for classifying the hand grasp type and the other for object recognition. The higher level is a probabilistic manipulation action grammar based parsing module that aims at generating visual sentences for robot manipulation. Experiments conducted on a publicly available unconstrained video dataset show that the system is able to learn manipulation actions by “watching” unconstrained videos with high accuracy.