Skull echoes could become the new passwords for augmented-reality glasses

May 2, 2016

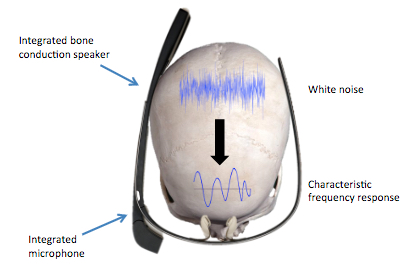

SkullConduct uses the bone conduction speaker and microphone integrated into some augmented-reality glasses and analyzes the characteristic frequency response of an audio signal sent through the user’s skull as a biometric system. (credit: Stefan Schneegass et al./Proc. ACM SIGCHI Conference on Human Factors in Computing Systems)

German researchers have developed a biometric system called SkullConduct that uses bone conduction of sound through the user’s skull for secure user identification and authentication on augmented-reality glasses, such as Google Glass, Meta 2, and HoloLens.

SkullConduct uses the microphone already built into many of these devices and adds electronics (such as a chip) to analyze the frequency response of sound after it travels through the user’s skull. The researchers, at the University of Stuttgart, Saarland University, and Max Planck Institute for Informatics, found that individual differences in skull anatomy result in highly person-specific frequency responses that can be used as a biometric system.

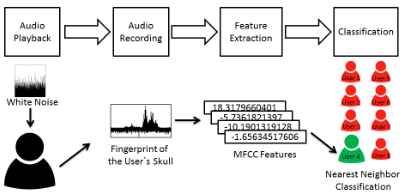

The recognition pipeline used to authenticate users: (1) white noise is played back using the bone conduction speaker, (2) the user’s skull influences the signal in a characteristic way, (3) MFCC features are extracted, and (4) a neuron-network algorithm is used for classification. (credit: Stefan Schneegass et al./Proc. ACM SIGCHI Conference on Human Factors in Computing Systems)

The system combines “Mel Frequency Cepstral Coefficient” (MFCC) (a feature extraction method used in automatic speech recognition) with a lightweight neural-network classifier algorithm that can directly run on the augmented-reality device.

The researchers also conducted a controlled experiment with ten participants that showed that skull-based frequency response serves as a robust biometric, even when taking off and putting on the device multiple times. The experiments showed that the system could identify users with 97.0% accuracy and authenticate them with an error rate of 6.9%.

It’s not as accurate as the CEREBRE biometric system (see You can now be identified by your ‘brainprint’ with 100% accuracy), but it’s low-cost, portable, and doesn’t require a complex system and extensive user testing.

Abstract of SkullConduct: Biometric User Identification on Eyewear Computers Using Bone Conduction Through the Skull

Secure user identification is important for the increasing number of eyewear computers but limited input capabilities pose significant usability challenges for established knowledge-based schemes, such as a passwords or PINs. We present SkullConduct, a biometric system that uses bone conduction of sound through the user’s skull as well as a microphone readily integrated into many of these devices, such as Google Glass. At the core of SkullConduct is a method to analyze the characteristic frequency response created by the user’s skull using a combination of Mel Frequency Cepstral Coefficient (MFCC) features as well as a computationally light-weight 1NN classifier. We report on a controlled experiment with 10 participants that shows that this frequency response is person-specific and stable – even when taking off and putting on the device multiple times – and thus serves as a robust biometric. We show that our method can identify users with 97.0% accuracy and authenticate them with an equal error rate of 6.9%, thereby bringing biometric user identification to eyewear computers equipped with bone conduction technology.