System predicts 85 percent of cyber attacks using input from human experts

April 25, 2016

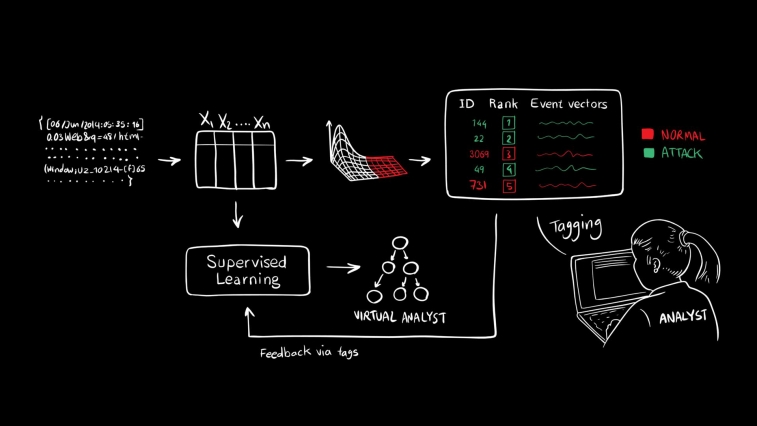

AI2 combs through data and detects suspicious activity using unsupervised machine-learning. It then presents this activity to human analysts, who confirm which events are actual attacks, and incorporate that feedback into its models for the next set of data. (credit: Kalyan Veeramachaneni/MIT CSAIL)

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the machine-learning startup PatternEx have developed an AI platform called AI2 that predicts cyber-attacks significantly better than existing systems by continuously incorporating input from human experts (AI2 refers to merging AI with “analyst intuition”: rules created by living experts).

The team showed that AI2 can detect 85 percent of attacks —about three times better than previous benchmarks — while also reducing the number of false positives by a factor of 5. The system was tested on 3.6 billion pieces of data known as “log lines,” which were generated by millions of users over a period of three months.

To predict attacks, AI2 combs through data and detects suspicious activity by clustering the data into meaningful patterns using unsupervised (automatic, no human help) machine learning. It then presents this activity to human analysts, who confirm which events are actual attacks, and incorporates that feedback into its models for the next set of data.

“You can think about the system as a virtual analyst,” says CSAIL research scientist Kalyan Veeramachaneni, who developed AI2 with Ignacio Arnaldo, a chief data scientist at PatternEx and a former CSAIL postdoc. “It continuously generates new models that it can refine in as little as a few hours, meaning it can improve its detection rates significantly and rapidly.”

Creating cybersecurity systems that merge human- and computer-based approaches is tricky, partly because of the challenge of manually labeling cybersecurity data for the algorithms. For example, let’s say you want to develop a computer-vision algorithm that can identify objects with high accuracy. Labeling data for that is simple: Just enlist a few human volunteers to label photos as either “objects” or “non-objects,” and feed that data into the algorithm.

But for a cybersecurity task, the average person on a crowdsourcing site like Amazon Mechanical Turk simply doesn’t have the skillset to apply labels like “DDOS” or “exfiltration attacks,” says Veeramachaneni. “You need security experts.” That opens up another problem: Experts are busy and expensive, so an effective machine-learning system has to be able to improve itself without overwhelming its human overlords.

Merging methods

AI2’s secret weapon is that it fuses together three different unsupervised-learning methods, and then shows the top events to analysts for them to label. It then builds a supervised model that it can constantly refine through what the team calls a “continuous active learning system.”

Specifically, on day one of its training, AI2 picks the 200 most abnormal events and gives them to the expert. As it improves over time, it identifies more and more of the events as actual attacks, meaning that in a matter of days, the analyst may only be looking at 30 or 40 events a day.

“This paper brings together the strengths of analyst intuition and machine learning, and ultimately drives down both false positives and false negatives,” says Nitesh Chawla, the Frank M. Freimann Professor of Computer Science at the University of Notre Dame. “This research has the potential to become a line of defense against attacks such as fraud, service abuse and account takeover, which are major challenges faced by consumer-facing systems.”

The team says that AI2 can scale to billions of log lines per day, transforming the pieces of data on a minute-by-minute basis into different “features”, or discrete types of behavior that are eventually deemed “normal” or “abnormal.”

“The more attacks the system detects, the more analyst feedback it receives, which, in turn, improves the accuracy of future predictions,” Veeramachaneni says. “That human-machine interaction creates a beautiful, cascading effect.”

Veeramachaneni presented a paper about the system at last week’s IEEE International Conference on Big Data Security in New York City.

MITCSAIL | AI2: an AI-driven predictive cybersecurity platform

Abstract of AI2 : Training a big data machine to defend

We present an analyst-in-the-loop security system, where analyst intuition is put together with stateof-the-art machine learning to build an end-to-end active learning system. The system has four key features: a big data behavioral analytics platform, an ensemble of outlier detection methods, a mechanism to obtain feedback from security analysts, and a supervised learning module. When these four components are run in conjunction on a daily basis and are compared to an unsupervised outlier detection method, detection rate improves by an average of 3.41×, and false positives are reduced fivefold. We validate our system with a real-world data set consisting of 3.6 billion log lines. These results show that our system is capable of learning to defend against unseen attacks.