The 21st Century: a Confluence of Accelerating Revolutions

May 15, 2001 by Ray Kurzweil

In this keynote given at the 8th Annual Foresight Conference, Raymond Kurzweil discusses exponential trends in various technologies, and the double-edged sword accelerating technologies represent.

Originally presented November 2000. Published on KurzweilAI.net May 15, 2001.

It’s a pleasure to be here. I speak quite a bit about the future. It’s enjoyable to speak to a group that actually understands something about the technology and believes in the future.

I want to start off by sharing some views about the nature of technology trends. I was talking with Rob Freitas about his very compelling book, Nanomedicine, which I think will emerge ultimately as a classic book, much like Drexler’s book, establishing some of the foundation for the feasibility of an important technological concept. A lot of the scenarios that I talk about are dependent on the marriage of nanotechnology and biological systems which Rob Freitas has explored.

As Rob and I were talking, he, J. Storrs Hall, and Ralph Merkle were chiding me for the conversation I had with Bill Joy in a bar in Lake Tahoe, which, I guess, started Bill on a course that he’s still on. And I do want to address the issues that Bill Joy has raised.

Another person at that bar room conversation was John Searle, the philosopher. I thought, actually, that some of the interactions I had with John would end up being more controversial. But, not as many people are interested in the controversy about consciousness. Although I’ll touch on that as well, the nature of consciousness, and whether consciousness is limited to biological entities and so forth.

Now, Bill Joy and I are very often paired as the pessimistic/optimistic respectively, and I do take issue with Bill Joy’s conclusions. Particularly, I feel that his prescription for relinquishing broad areas of technology like nanotechnology is unrealistic, unfeasible, not desirable, and basically totalitarian.

But, if there’s more than just Bill and I on a panel, I very often end up defending Bill on the basic feasibility of the scenarios that he describes. The panel rarely gets beyond that point once somebody attacks the feasibility of the kinds of technological capabilities that Bill describes and worries about.

We were on a panel at Harvard a few weeks ago, and a Nobel Prize winning molecular scientist said, “Oh, we’re not going to see nanotechnology self-replicating devices for a hundred years. So, therefore, these scenarios are nothing to be concerned about.”

I said, “Actually, that’s not a bad estimate, with the amount of technical progress that we need to make to achieve that particular technological milestone at today’s rate of progress.” But, we are accelerating the rate of progress and, in fact, a lot of people are quick to agree to that. This is something that I’ve studied now for the last 20 years. And I can show you a lot of data about that.

According to my models, we’re actually doubling the rate of paradigm shift, or the rate of technical progress, every decade. So, we’ll see a hundred years of progress at today’s rate of progress in the next 20 or 25 years. Because the nature of exponential growth is so explosive, particularly when you get to the knee of the curve, exponential trends start out flat and stay about zero for a very long time, which is why nobody notices them. Then suddenly, they flow seemingly out of nowhere and get very powerful. And we’re now entering that knee of the curve. This field of nanotechnology is one example of that. But, there’s a whole confluence of revolutions all of which are governed by what I call The Law of Accelerating Returns, which is exponential growth and exponential acceleration.

The very rate of progress itself is an example of this law. If you figure out the implications of doubling the rate of progress every decade, you’ll see that we will experience 20,000 years of technical progress, at today’s rate of progress in the 21st century, not a 100 years worth of progress. And that makes a huge difference.

A lot of people are quick to agree, “Oh, yeah, things are accelerating,” but relatively few serious observers or commentators about the future really truly internalize the implications of this. Otherwise thoughtful people will very often say, “Oh, that’s going to take a 100 years.” Or, “We won’t see that for 300 years.” This is not the conception of how much change we’ll see in those kinds of timeframes if you accept the exponential rate of change. But, if you have a linear view of the future–and I call that the intuitive linear view–then things look very different.

In centuries past, people didn’t even accept the fact that things were changing because things for them had been pretty much like their parents, and things in the present were very much like the past. And they expected the future to be the same. Today, it’s generally accepted that things are changing, that technology is driving change. But, very few people really internalize the implications of exponential growth.

We really only saw 25 years of progress at today’s rate of progress in the 20th century because we’ve been accelerating up to this point. The 21st century will be almost a thousand times greater in technical change. Of course, the 20th century was pretty dramatic as it is.

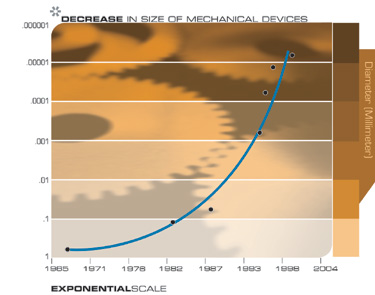

I want to share with you what I’ve explored in terms of technical change in different fields, some of which bears on the field of nanotechnology. I see this area of investigation as a less than sharply defined field, and more the inevitable end result of another pervasive exponential trend, which is the trend toward miniaturization, which also goes back hundreds of years; but again, people didn’t notice it because it was so slow. Now it’s reaching the knee of the curve in terms of exponential growth and making things smaller. We’re currently shrinking technology at a rate of 5.6 per linear dimension per decade–pretty much the same figure in both electronic and mechanical systems.

And that gets us to nanotechnology ranges, which are not very well defined. I mean, how many nano meters is still considered nanotechnology? But, the speed at which technology is shrinking gets us there within a couple of decades, two or three decades, depending on where you draw the line. And that’s another reason why the relinquishment of broad areas like nanotechnology, which is Bill Joy’s prescription, is utterly unfeasible, or unrealistic, because it’s really not one field; it’s really the end result of thousands of very diverse projects which are incrementally making things a bit smaller, at a very gradual and continuous rate.

I’d like to explore some of those trends with you, and then put them together in some scenarios and discuss the kinds of impact this will have, because I see it as very pervasive. Technology is getting more and more intimate. It’s already expanding the reach and capability of the human civilization. And, ultimately, I think we’ll expand the capabilities of human beings themselves. By the end of the century, I don’t think there will be a clear distinction between you and a machine.

Most people today have a concept of a machine as something much more brittle, much less subtle, much less emotional or capable than human beings. But, that’s because the machines we interact with are still on the order of a million times simpler than the human brain and body. But, that won’t remain the case, particularly as we succeed in reverse engineering human system; and, we’re well along that path. I’ll talk a little bit about that, later.

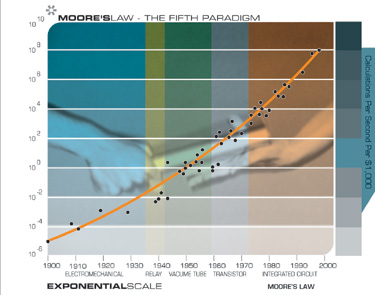

So, as we combine this field of nanotechnology with some of the other very powerful exponential trends, it will change the nature of most things, including what it means to be human. This exploration really started by my reflecting on Moore’s Law. And very often when I speak, particularly to educated lay audiences, I’ll ask how many people have heard of Moore’s Law. Even in non-technical audiences, I’ve stopped doing that, because pretty much everybody has heard of it.

But, four or five years ago, even in the technical audiences, only a scattering of hands would go up. So, there’s certainly been increasing recognition of that trend. But, that trend is not the only exponential trend. I began to think, “Why is computation growing exponentially?” I mean, Moore’s Law literally means the shrinking of transistors, so it is an example of miniaturization. It’s actually approximately every 24 months that there are twice as many transistors on an integrated circuit. That results in a quadrupling of computer power, because you can put twice as many on the chip, and they run twice as fast because the electrons have less distance to travel. This has been going on since integrated circuits were first introduced.

Is this a special field? Why do we have exponential growth in computers? Is there something more profound going on? Or, is it, as Randy Isaac said, “…just a set of industry expectations now. We’re kind of used to it, so we know what we need to target two years out, four years out, in order to remain competitive and it becomes a self fulfilling prophecy.”

This actually becomes quite significant and relevant in terms of the question of whether exponential growth in computing will outlive Moore’s Law. Because increasingly, it’s being recognized that Moore’s Law, as a specific paradigm, is going to run out of steam in somewhere between 10 and 18 years. And now there is the consensus that by that time, the key features will be some reasonable number of atoms and then, they wouldn’t be able to shrink them anymore. The basic conclusion is, okay, that will be it.

So, I began to think about this, and have been thinking about this for 20 years. The book I wrote in 1990, The Age of Intelligent Machines, reflected on some of those implications. The first thing I realized is that Moore’s Law was not the first, but actually the fifth paradigm to produce exponential growth in computing. I know these charts are kind of small; you don’t really have to see the details. They all kind of go like this. (Laughter, as Ray mimics Ramona)

This goes back to the beginning of the 20th century. I put 49 famous computers on a graph–and this is an exponential graph. Most of the charts I’ll show you are exponential graphs, where a straight line means exponential growth. This chart goes back to the machines that were used in the 1890 census, which was the first time that data processing equipment was used in a census.

I’ve since then put a lot more computers on this chart. It just fills it in, but it’s the same trend. The first thing you notice is that the exponential growth of computing didn’t start with Moore’s Law. It goes back a hundred years. Also, that Moore’s Law is the fifth paradigm. And, every time one paradigm ran out of steam, another paradigm was ready to pick up the pace. We had relay-based computers say in 1940, with the Robinson machine which cracked the German’s enigma code. It created a real dilemma for Winston Churchill because he got the information about which cities were to be bombed. He was told, for example, that Coventry was to be bombed, and he refused to warn them, because that would have tipped off the Germans that their seemingly impregnable code had been cracked.

Next, there was the vacuum tube computer, like the one that predicted the election of Eisenhower. NASA used transistor based machines in the 1960’s in the first space launches; I think they still use them. And there, at the upper right, is the PC you just bought your nephew for Christmas.

Every time a paradigm ran out of steam, another one stepped in. They were making vacuum tubes smaller. They finally couldn’t make them any smaller and still keep the vacuum. And then transistors came along, which had already been in development. Transistors are not just small vacuum tubes; it’s a completely different approach.

It’s clear to me, and I’m sure to many of you, where the sixth paradigm will come in. It will come from molecular computing. We live in a three dimensional world. Our brain uses a very bulky, inefficient form of information processing–I mean, it’s a different paradigm than the computers we’re used to; it is electro-chemical, it is digital control analog, it’s not simply parallel, and they’re very bulky structures. Most of the complexity has to do with maintaining their life processes.

They’re very slow. The reset time is typically on the order of five milliseconds, so they can do maybe 200 calculations per second in a neural connection, which is where most of the processing takes place. But, they’re organized massively parallel in three dimensions. They get their very prodigious powers from that organization. We have about a hundred billion neurons, about a thousand connections from one neuron to another, so it’s a hundred trillion connections, a hundred trillion fold parallelism. And multiplied by typically 200 calculations per second, it’s 20 million billion calculations per second or 20 billion MIPs.

And that’s conservatively high. We’re beginning to reverse engineer some portions of the human brain, and there’s been some very impressive work done in the auditory system. We are starting to understand the algorithms. And, we’re able to replicate them with far fewer computes than that theoretical calculation would imply.

By putting things in three dimensions we can keep that trend going actually well into the 21st century. And there are quite a few very interesting three dimensional computing technologies being explored.

We were talking last night about nanotubes. We have some challenges, but we do know how to do computing with nanotubes, and small electrical circuits have been created using nanotubes. They’re very strong, as you know. If we could build them in three dimensional lattices, they could have structural properties and computing properties. And if you figure out the theoretical potential of nanotubes, a one inch cube would have at least a million times the processing power of the human brain. There are at least a dozen different molecular computing initiatives. And I hear about new ones on a regular basis.

But, back to the exponential growth of computing. Generally, as one paradigm died, the other paradigms were already being experimented with. There were many competing projects. And it’s when the exponential growth of one paradigm ran out of steam that the other one took over and was able to continue the exponential growth.

Another interesting thing is that this is not a straight line in this chart; it’s another exponential. I have a mathematical model of why that takes place. All of these trends actually have double exponentials. The first exponential, in general terms, occurs because we get more and more powerful tools from one generation of technology which enables us to create the next generation more quickly, and with greater capability. We’ve had technology problems in the exponential domains. We don’t try to add things lineally; we try to make things twice as powerful. We try to increase things by a multiple.

The second increment really comes from the slowly exponentially growing resources for a particular type of technology. And since we are in early stages of this field, you can see that already, the resources for nanotechnology and for shrinking technology are growing exponentially. They have been for computing as well, which is why you see that slow second exponent there. We doubled the computing power every three years at the beginning of the century, every two years in the middle of the century. We’re now doubling it–Well, actually, two or three years ago it was every year; it’s now down to maybe every 11 months that we are doubling computing power.

We are increasing technology exponentially in many different fields. It’s not limited to computing power. It’s an inherent property of technology. Technology is an evolutionary process, and an evolutionary process grows exponentially. We certainly saw that in biological evolution. As I’ll show you at the end, there is a very clear indication that technological evolution is a continuation of the biological process that created the first technology, creating species in the first place.

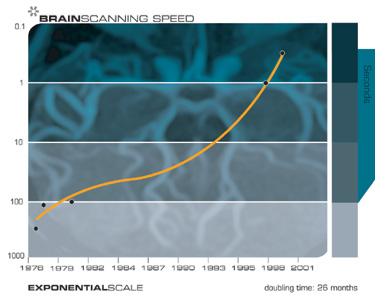

This chart shows brain scanning via resolution, which has been growing exponentially. There’s already technology that can see inter-neuronal features with very high resolution, provided the scanning tip is near the tissues being scanned. Later, I’ll talk about a way in which we can combine nanotechnology, using some of the principles that Rob Freitas has talked about, to scan the brain from inside, which is how we ultimately will get a full brain scan.

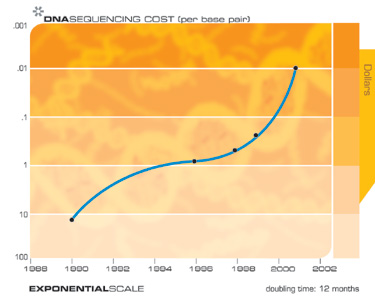

This chart shows genome sequencing: When the Genome Project was first announced 15 years ago it was considered a fringe project. There were many critics who pointed out that at the rate we could scan a genome, it would take 10,000 years to finish the project, but it actually finished a little bit ahead of schedule.

As you can see on this chart, we went from a costs of over $10 per base pair to under a penny per base pair in 10 years.

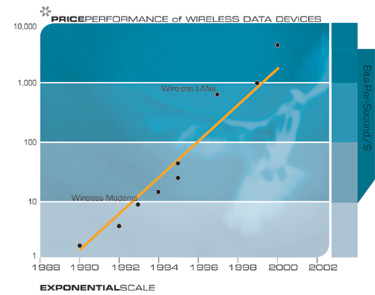

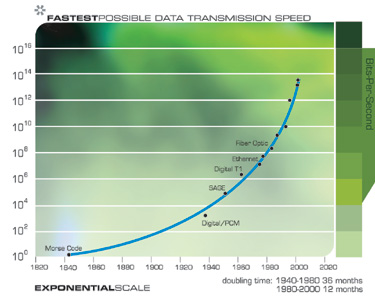

And the speed has been growing exponentially. The growth of telecommunications is well known. And this is not just a manifestation of Moore’s Law; this has to do with exponential growth in other technologies other than packing more transistors on an integrated circuit. Developments in wireless communication, fiberoptics, and other forms of communication technology are also growing exponentially.

And here, as I’m getting enough points on these graphs, you can actually see the stack S curves. People very often challenge me and say, “Well, exponential growth can’t go on forever,” like rabbits in Australia; they eventually hit a wall because they run out of resources; they can’t grow exponentially anymore. And that is true for any particular paradigm. Like rabbits in Australia, they grow exponentially for a while, but in such small numbers you don’t notice it. Then there’s an explosion where exponential growth really takes off. And then they hit a wall and you get this sort of stretched out S curve, which is called a Sigmoid. But, its a paradigm shift, shifting to some other paradigm innovation, that keeps the law of accelerating the trends and the exponential growth going on.

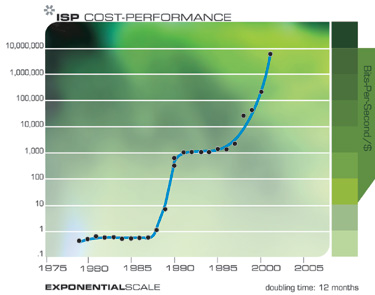

If you get enough points on the graph you can actually see these stacked up S codes. I’m getting an update now in the computational realm to actually see these small S curves every time we’ve had a paradigm shift. And here you can see that in this chart of ISP cost performance.

This is an interesting one because when I was writing The Age of Intelligent Machines in the late 1980s, I felt we would see an explosion of the Internet around the mid-1990s. This was very clear to me because I could see that the data was developing quite predictably.

These trends are predictable in the exponential domain. But, we don’t experience technology in the exponential domain; we experience it in the linear domain. This is how people have experienced the Internet. When the numbers are 10,000 doubling to 20,000 doubling to 40,000, scientists and the general public don’t notice it. But, when it gets to 40 million or 80 million people, then it becomes quite noticeable. Seemingly, out of nowhere, the Internet exploded in the mid-1990s, but it was quite predictable from the exponential chart.

Wireless–same thing. Memory is a different manifestation of Moore’s Law, although, actually magnetic data storage is different; that’s more of a material science technology. But that’s also been growing exponentially.

If you take the model and project it out through the 21st century in terms of computation, current computer technology is currently somewhere between an insect and a mouse brain, but not as well organized as an insect or a mouse brain, and not very good at pattern recognition. But, we are learning some of the chaotic processes that mammalian brains use for pattern recognition. That’s actually my field. The highly parallel architectures–and actually analog architectures–will be ideal for that. In theory, you can do anything in a digital domain that you can do in the analog domain because you can simulate analog processes to any desired level of accuracy.

But, it takes a lot more transistors by a factor of maybe a thousand. It takes thousands of transistors to do a multiply. Digitally, you can do an analog multiply, in theory, with just a couple transistors. Maybe you need some to set it up. But, it’s an order of a thousand fewer transistors for digital controlled analog processes. Particularly for things like neuron-nets and self organizing paradigming, it is a very good and efficient engineering tradeoff, which is what biological evolution arrived at in the design of mammalian brains.

We’ll get to at least the raw capacity of the human brain by around 2020. By 2050, a thousand dollars of computing will equal all biological human brains on earth. That might be off by a couple of years. But, the 21st century won’t be wanting for computational resources. And I’ll show you a few implications outside of pure technologies.

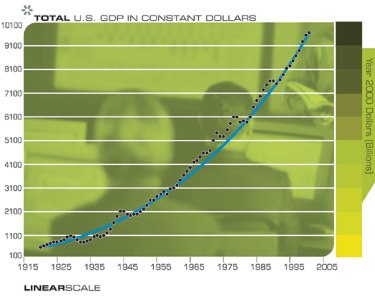

Our economy is driven by the power of technology. It’s been growing exponentially as well. I mean, Republican, Democrats, recessions, you almost don’t see these trends. The economy just grows exponentially. The events that you can actually notice on this chart are the depression and then the post-World War II reconstruction.

This chart is on a linear scale, and you can see a little more clearly the recessions; but they’re relatively a minor cycle built on basically an exponential growth. And it’s also true on a per capita basis, so this is not a function of growth and population.

And it’s also double exponential. The growth rate itself has been growing exponentially. It’s been very clear for the last 10 years. The models that are used, which I’ve looked at in some detail, by the Fed and by other policymakers, have all of these old economy factors of labor, capital investment, energy prices, and don’t model at all things like MIPs, megabytes and bandwidth, and the growth of information and knowledge, intellectual property, all of which are growing exponentially. And all of which are increasingly driving the economy. But, I won’t get further into that.

And, it affects education. We’ve been eliminating jobs at the bottom of the skill ladder for 200 years through automation increasing and aiding new jobs at the top of the skill ladder. The skill ladder has been moving up, and we’ve been growing. This chart shows that there has been growth at an exponential rate in education in constant dollars and per capita.

Even human longevity has been growing exponentially. In the 18th century, we added a few days to human life expectancy every year. In the 19th century we added a few weeks every year. We’re now adding about 120 days every year to human life expectancy. And there’s a consensus, at least among some of us, that within 10 years, from the revolutions in genomics and podiomics, national drug design, cloning, and other biotechnology capabilities, we’ll be adding more than a year every year to human life expectancy.

So, if you can hang in there a little while longer, you actually will get to see how dramatic the 21st century will be.

Miniaturization: a trend that is quite relevant to this conference. We’ve been shrinking technology. These are exponential charts of elecronic technology, private computers, and private mechanical devices. This is why I don’t see nanotechnology as just one sharply defined field–as if Drexler defined these capabilities, and now this field is only of building Drexlerian machines.

We’re really getting there through this pervasive, and actually very old trend. But a trend again that starts out very slowly, and then flows exponentially into smaller and smaller realms. And will ultimately get to Drexlerian type machines, but not because we’re building them directly, but because that’s the inevitable end result of this pervasive trend toward miniaturization. What Drexler showed was the feasibility of technology at those scales. I don’t believe we’ll actually stop at nanoscales, either.

And finally, I talked about the intuitive-linear perspective of history versus the historical exponential view. It’s just remarkable to me how many otherwise thoughtful scientists and observers and would-be prognosticators just assume a linear view of history and assume that things will carry on at today’s rate of progress, even though they’ve been around long enough to see that the rate of progress today is much faster than it was 10 years ago.

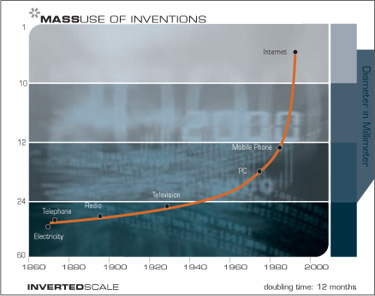

This chart shows the adoption rate of different technologies as defined by adoption by quarter of the American population.

This is a particularly interesting chart, because it’s a double exponential. The first paradigm shift, which was the creation of primitive cells, was shortly after that the first stages of DNA. It took billions of years. Then, in the Cambrian Explosion, things moved a lot faster and the various body plans were devised in only tens of millions of years. By the time mammals came around, paradigm shifts were down to tens of millions of years, and for primates, millions of years. Home sapiens evolved in hundreds of thousands of years. And at that point, the cutting edge of technology, technology to create more and more intelligent processes, really shifted from biological evolution to technological evolution by the first technology creating species. Actually, there were a few such species, but there was only room for one surviving species in that ecological niche.

The first paradigm shifts in technology took tens of thousands of years–stone tools, fire, the wheel. A thousand years ago, a paradigm shift only took a few hundred years. In the 19th century, more was accomplished than in the previous eight centuries. During the first 20 years of the 20th century, we accomplished more than the entire 19th century. Today a paradigm shift only takes a few years time. The worldwide web didn’t exist 10 years ago; it really exploded in 1993, 1994. I first heard of it in 1994.

Sometimes people look at the data behind this chart and say, “Well, wait a second, this particular body plan didn’t take 10 million years; that took 40 million years. And the web wasn’t seven years; that went back to the Arpanet and that was 15 years. But, this doesn’t change the data; you still get a straight line. The Cambrian Explosion didn’t take place in 10 years. And the web didn’t take 50 million years.

The acceleration of these evolutionary processes and technology as a continuation of evolution by other means is a very powerful, inexorable trend.

Let’s now talk about what some of the implications of putting these trends together are. I think by the end of this decade, computers as we know them will disappear. And that’s one of the steps along the way in miniaturization. We’ll have images written on our retinas by our eyeglasses. These are all technologies you can see today. They’re not ready for prime time. But, we’ll walk around with images written to our retina from our eyeglasses and contact lenses. We’ll have very high bandwidth connection to the Internet at all times, wirelessly. The electronics for all of that and all the computation will be so tiny they’ll be in your eyeglasses, woven in your clothing. You won’t be carrying around even palm pilot devices.

Computing will disappear into the environment. It won’t be inside our bodies yet for most people, but it will be very close and intimate. Going to a website will mean entering a shared virtual reality environment, at least encompassing the visual and auditory senses. So, for a meeting such as this, or just when getting together with someone else, you can be in a shared virtual reality environment, which might overlay real reality, or it might replace real reality, at least for the visual and auditory sense.

And increasingly, going to a website will mean choosing some virtual reality environment that may have some different properties than real reality.

The technology becomes particularly interesting as we go inside the body. We’ve already started that process. We actually are using devices throughout our bodies; virtually every biological system has some early stages of the use of artificial technologies. Particularly the brain–I have a friend who is profoundly deaf, but I can actually talk to him on the phone now because of an implant. There has been a dramatic demonstration by a French doctor, Dr. Benebid, a few years ago. Parkinson’s patients were wheeled into a room. The doctor could turn implanted devices on and off from outside by radio control. When he had the device turned off, and they were in a very rigid state common in advanced Parkinson patients. When he turned the machine on, and they seemingly came alive, with these implants replacing the function of the particular cells that had been destroyed.

We’re increasingly using early stages of neuroimplants. And finding, in fact, that we can model and replace and simulate what specific brain areas do.

Sometimes people question whether or not it’s really feasible to reverse engineer the human brain. I think by 2030, we will have all the raw data. I’ll explain in a moment how I think we can get that. But, the question then comes, once you have the data, can you really make sense of it? Or, are we inherently, as human beings, incapable of understanding the organization of our own brains?

The reality is that the reverse engineering process is very hot on the heels of the availability of the data, neuron models and brain interconnection data. The brain is not one big tabular rasa or one big neural net. There are hundreds of specialized regions. In each region there are different types of wiring or interconnections. The neurons are structured differently. And they’re optimized with very different kinds of functions.

Lloyd Wright has developed a detailed model of the auditory system, or a significant portion of the auditory system, that shows five parallel pathways. And, he has identified about 20 different brain regions, which each do different types of processing, trying to identify the location of sounds in three dimensions, and there’s a different way of doing it left to right and up and down. The model is trying to separate different sounds that are coming from different sources, and identifying what they are.

Such modeling is used extensively in speech recognition. We’ve actually only been using the results of modeling two of those five pathways; some of the other pathways actually would help us, for example, to eliminate background noise by being able to identify a particular person’s voice coming from a particular location and eliminating other voices coming from other locations.

But, Lloyd has a very detailed model including the way in which the information is encoded in the inter-neuronal signals at each stage. And, he has implemented that model. The model does the same sorts of things with the same acuity as the human auditory system. It’s very impressive work. It also demonstrates that these simulations require on the order of 100 or 1,000 times less computation than the theoretical potential of these neurons. But, it also shows the feasibility of taking the detailed models coming from neuro-biology, and the detailed scans that are coming from brain scanning, and putting them together in very intricate models of the human brain. The human brain is not one organ. It’s really hundreds of specialized information processing organs.

We’ll get the brain scanning data, I think ultimately by scanning the brain from the inside, and Rob Freitas’ book will help us in this process. A we get to the third decade of this century, the 2020’s, we will be sending little devices inside our bloodstream. They we all be on a local area network, and they can be scanning from inside As Rob points out, many of these will have medical applications.

We already have technology, that, if you put the scanning probe right near the neuron, it can see it with extremely high resolution, almost good enough today to what we need to accurately scan these internal connections. But, you have to be right near the neural features. So, if you send nano scanning devices through the capillaries of the brain, which by definition travel next to every neural feature, and send billions of them, with various ways having them transmit the information and compiling it back, you can get very detailed brain scans from inside. At leas, that’s one way of doing it.

Another way, of course, is to do a destructive scan, which is feasible today. There’s one condemned killer who actually agreed to have his brain scanned. You can see the 10 billion bytes of his brain on the internet. There’s also a 25 billion byte female companion up there as well.

A project at Carnegie Mellon is now scanning a mouse brain with 100 nanometer resolution, which is almost good enough for these applications. Destructive scanning actually is good enough also to give us the raw data from which to do the reverse engineering that will enable us to understand how the human brain works.

We already use these very early results. For example, in the mid-1990’s,some detailed models became available for the early stages of auditory processing in at least two of the five parallel pathways. We (at Kurzweil Applied Intelligence, Inc.) implemented those in speech recognition. Our competitors did as well, Dragon and IBM in the front end of speech recognition. And it definitely improves the quality of speech recognition. With these more detailed models now becoming available, we’ll be able to do an even better job of dealing with things like background noise.

So, as these models become available from reverse engineering the human brain and the neural processes we have there, there’s a very quick and rapid progression from the availability of the data, which is neuron models and interconnection data, two engineering models, to working simulations, to applying that information in the creation of more intelligent machines. As we really understand how the whole human brain fits together and works, and we’re far from that today, but because of the acceleration of technology, because of the ability of being able to make things smaller, and ultimately sending that into the brain where we can get a very close look at how it works, we’ll have very good ideas, and very accurate models by 2030 of how the brain works, which will give us great insight into ourselves, into human intelligence, the nature of human emotion.

Emotion, by the way, is not some kind of side issue that distracts us from the real capability of human intelligence. Emotion and our ability to recognize emotion and respond appropriately to it, is the most complex, richest, deepest, most subtle thing that we do. It’s a real exemplar of human intelligence. If we understand that better, we’ll have better insight into ourselves, we’ll have better insight into human dysfunction, and we’ll be able to create more and more intelligent machines.

Another scenario using these nanobots in 2030, is to send them through the bloodstream to take up positions around nerves and neurons within the brain; the communication to the neurons is actually wireless. So, if you sense these nanobots and have them take their positions by all the nerve fibers coming from all of our sensors, you can provide full immersion, shared virtual reality environments from within, encompassing all of the senses.

If you want to be in real reality, they just sit there and do nothing; if you want to enter virtual reality, they shut down the signals coming from your real senses, replace them with the signals that would be appropriate for the virtual environment. And then you can be an actor in the virtual environment. You can decide to move your hand in front of your face. Your real hand doesn’t move. It instead moves your virtual hand.

And you can see it, you can hear it. You can have other people in your shared virtual environment who also see you do those things. And you can have any kind of interaction with anyone, from business negotiations to central encounters in virtual reality encompassing all of the senses. And these can also involve other neurological correlates of secondary reactions to our sensory experiences.

In my book, I talk about open brain surgery where this young girl was conscious during the surgery. When they stimulated a specific spot in her brain, she began to laugh. They assumed well, they must be triggering some kind of involuntary laugh reflex. But they quickly discovered that no, they actually were triggering the perception of humor. Whenever they stimulated this spot, she just found everything hilarious. Her typical comment was, “You guys are just so funny standing there.” And everything was a riot.

Through our neurological correlates, (some of which may be more complex than just stimulating a specific spot, but there are correlates of our secondary experiences from sexual pleasure to emotional reaction) certainly things like fear and humor can be detected, and also can be triggered.

So, we’ll have people, rather than just sending out their grainy images as they do today from web CAM’s in their living room, sending out their full stream of sensory experiences, as well as their emotional overlays. You can experience someone else’s life, a la John Malkovich, with these experience beamers circa 2030. Most of those experiences probably won’t be that interesting, so you might want to go to the archive where more interesting experiences will be saved.

This will be an important way in which we can get together and communicate. Certainly meetings like this, or any kind of interaction between two or more people can be in shared environments. And we’re going to start down that path this decade with devices inside our bodies and brains that are nonetheless very close to our bodies as the technology gets more and more personal.

These nanobots can also expand our brain. Since they are communicating wirelessly, they can create a new connection, a virtual connection. The computing or thinking that takes place in our brain is in the inter-neural connections is not a simple wire. There are various complex things that happen to the signals. But, those can be modeled, and have been modeled quite successfully in a number of cases, showing the feasibility of this.

If we have two nanobots communicating wirelessly, they can create a new internal connection. So, now, instead of having 100 trillion connections in your brain, you can have a 100 trillion and one. That won’t do you much good. And none of the connections are all that important. There’s no chief executive officer neuron or connection. Our brains are different than computers.

If you go open up your PC and cut one of the wires, you’ll probably break it. But, if you break any of the internal connections in your brain, or even thousands of them, it has absolutely no effect. It’s much more holographic in the sense that it is the patterns of information that are important. So, adding one connection won’t do any good, but you could double the connections and ultimately have another 100 trillion connections. That will make a minor difference.

If you look at the trends and see the kind of capabilities that the technology will provide, you can see how we can expand human intelligence. We can multiply the capacity of our brains many-fold, ultimately by factors of thousands or millions. By the end of the century, it’s actually by factors of trillions. When you meet somebody in 2040, typically a person of biological origin, they will have thinking processes going on inside their brain that are biological as well as non-biological.

However, the biological thinking is flat. I mean, human race right now, under 10 billion people, have approximately 10 to the 26 power calculations per second. And that’s a generous estimate. And that’s going to still be 10 of the 26 calculations per second at the end of the century.

Non-biological thinking is growing exponentially. It’s under that figure by a factor of more than a million; but it’s growing. It will cross over before 2030. And by the end of the century, non-biological thinking will be trillions of times more powerful than biological thinking. And people say, “Okay, it will be very powerful, but it won’t have the subtlety and the suppleness and the richness of human intelligence; they’ll just be extremely fast calculators.” But, that’s not true.

Because the knowledge, the richness, the intelligence of human intelligence is going to be understood and reverse engineered and utilized by these non-biological forms of thinking.

I’ll make one last point, and hopefully we will have a few minutes for questions. There are some natural advantages of non-biological intelligence. Machines can share their knowledge. If I spend years learning French, I can’t download that knowledge to you. We don’t have quick downloading ports on the patterns of information in our brains.

But, machines can share their knowledge. We spend years training one computer to understand human speech, (and it learns because we’re using chaotic methods, self organizing methods like neural nets and markup models and genetic algorithms). As it learns the material, it’s developing a pattern.

Now, if you want your personal computer to understand you and speak to you, you don’t have to spend years training it; you can just load the pattern that we evolved in our research computer by loading the program. We don’t have the ways of doing that with biological intelligence. But, with non-biological intelligence, we’ll be able to do that. And, non-biological intelligence is much faster. Our electronics is already 10 million times faster than the ponderous speed of our internal connections.

And the accuracy of memory is far greater in computers. I mean, we’re hard pressed to remember a handful of phone numbers, whereas machines can remember billions of things quite accurately. If you combine those advantages with a real reverse engineering, and harness the subtlety and strength of pattern recognition that human intelligence represents, that will be a very formidable combination.

Thank you very much.

Question & Answer:

Q: You have been not merely an entrepreneur, an innovator, an engineer, but really an artist of technology. And I don’t want anyone to think that what I have to say is an attack on your character. (laughter)

You have done a tremendous amount of work here documenting the trends and making the case very convincingly for the overall trend in technology that you have perceived. And I generally share your perception, although I might argue with some of your timetables and so forth. As you’ve said, that’s not really important. What is important is the overall trends, the overall direction.

I myself work in technology. I’m a graduate student doing work in quantum computing. I’ve read your book, The Age of Spiritual Machines. I’ve also read a great deal of other material from what is a very large and growing cult, but known basically as extropians, trans-humanists–basically, technology worshippers. And you are now the most notable, most articulate, and I would say the leading exponent of that point of view.

Your message is not primarily technological, I think, although you’ve done such a good job here today with outlining the technical trends. But really, it is a moral and philosophical message that you’ve brought to the public. And I have to say I’m very pleased to have the opportunity to tell you here in front of this audience what I think of that.

In terms of a philosophy, a moral philosophy, it is the nadir, it is the new low, the new depths of depravity. Hitler only wanted to exterminate certain groups of people, but you have advocated the extermination or the gradual extinction, the elimination of the entire human race and its replacement by something else, technology.

Now, you have not proposed any death camps, you have not proposed the use of force; rather you use seductive language, and this notion of man/machine convergence and so on. By these means, by these gradual means, you propose that technology shall replace the human race. This is the most hideous notion that has ever been proposed in human history.

RK: Well, first I’d say, don’t shoot the messenger. But, I think you forcefully articulate a legitimate and, I think, fundamentally important point. And the question is whether what I’m talking about amounts to a reductionist point of view where I’m saying that people are just machines, or whether I’m really elevating the concept of machines to something profound.

I think the technology moves forward in incremental steps, each of which makes sense, and guides us along a certain path. And it’s not that we’re going to suddenly wake up in 2050 and realize that we’ve suddenly altered humanity in this profound way. We’re going to get there one step at a time.

Now, the technology for example, is not one field. Bill Joy’s idea of “let’s just shut down nanotechnology, we’ll just shut those couple of government labs and close down Zyvex and other companies” won’t work. It’s a pervasive trend of technology, to make things smaller.

We’ve been expanding the capabilities of the human civilization by amplifying our ability, including our direct personal ability, through technology. These trends are going to get more and more powerful. In my view, this is still the human civilization. And this is what it will look like as we go through this century. Of course, the story of the century hasn’t been written yet, and there are some dangers. And I think the dangers that Bill Joy has talked about are real, but I don’t think relinquishment is a viable or responsible direction to move in.

Q: Why not have humans maintain control of technology, instead of allowing technology to control human destiny?

RK: Well, let me finish. I think we are in control of it. I think, as we make technology smaller, to put it inside our clothing, inside our bodies and brains, which we’re doing already today, we make those decisions one step at a time. And my own view is that this will enhance what we value about humanity. And we’re making human civilization more capable, and more of what we value as human beings.

I really think it comes down to what I said earlier. The nature of the word “machines.” If you take what most people think of the word machines, and say we’re going to make human beings into machines, it is as depressing a vision as you describe. However, if you have a different view that technology is really an expression of humanity, we’re going to increase the power of our own ability, particularly in the area of the cutting edge of human intelligence, which is art and music and emotional expression. And those things are going to become richer. We’re going to become more of what we value of humanity, then it’s a profoundly positive vision. I see it as the next step of evolution.

It’s not so much something I’m advocating; it is something that I think is going to happen. And we do need to guide it. The difference though between biological evolution and technological evolution is that biological evolution has been called “the blind watchmaker.” I don’t like that term because it’s insensitive to blind people. Call it “a mindless watchmaker,” whereas technology is guided by a thoughtful thesis. We do have the responsibility to guide this in ways that emphasize our human values, despite the fact that we don’t have an absolute consensus on what those are. We could talk a long time about this, but that’s my view.

Q: With this last time remaining, I’m interested in hearing what your conversation about consciousness what John Searle was about. And, I think, perhaps related to that is your talk about nanobots entering the body and triggering or turning off the functions of certain neurons. I’ll be in trouble for putting it quite this way, but–where is the locus of meaning? Where is any kind of meaning–and I don’t mean that to be a broadly philosophical question–and where is the bottom line of the experience? How do you internalize that? Once those nanobots are in the bloodstream–the status of the nanobot changes.

In a central experience, perhaps the relativity isn’t as significant as, say, in a business experience where people might want to have a bottom line accomplished But, first, I guess if you could briefly talk about Searle’s position and your position on the issue of consciousness.

RK: Well, another profoundly important question. The locus of meaning is maybe a little bit different than the issue of consciousness. In my mind, meaning and some of the higher level attributes that human beings deal with are emergent properties of very profound subtle patterns of activity that take place in our brains.

And it’s really the output of the human brain. As I said before, the ability to recognize and respond appropriately to emotion is the most subtle thing we do. It’s not some side effect of human intelligence. I think any entity as complex as the human brain is going to necessarily have emotions, at least as a behavior.

But, your real question gets at trying to separate or focus on the difference between emotion and ability to deal with it as an objective phenomena and what we actually experience. It’s one thing to look at this girl’s brain as some kind of machine, you trigger it, she starts laughing and you deal with it as a cause and effect. And it’s another thing that she’s actually experiencing humor, or we experience fear or passion or any of the other things we experience. The nature of human consciousness.

John Searle says that I’m a reductionist, but I think that he’s a reductionist. In my mind it’s a deep mystery, not a scientific question. There is no scientific machine into which you can slide something into the end and a light will go on, conscious or not conscious. We will have entities in 2030, 2040 that claim to be conscious. We have them today, but they’re not very convincing. But, in 2040, they’ll claim to be conscious and they’ll be very convincing. They’ll have all the subtle, emotional, and rich kinds of behaviors, the kind that convince us that each other are conscious.

But, some people will say, “Well, no, that entity is not conscious because it doesn’t squirt neurotransmitters, it’s not biological.” And there’s no way to resolve those questions. You can argue about it. Look inside its brain. It’s got the feedback loops and it’s modeling the environment, and it’s just as complex and rich as a human being. In fact, it’s a copy of a human brain, modified in certain ways.

But, no, it’s not biological, it can’t be conscious. There’s no absolute way we can resolve that. We have a consensus with each other, conscious that other human beings that don’t appear to be unconscious are conscious. But, as you go outside to share a human experience, that consensus breaks down. We argue about animals. That’s at the root of the animal’s right issue. Are our animals really experiencing the emotions they seem to be experiencing?

When we say that they do, even that’s a human centric reaction because we’ve seen animals acting in a way that reminds us of the human emotions. I think my cat is conscious, but other people don’t agree because they haven’t met my cat. But, this issue will be even more contentious when we get to non-biological entities, whose behavior is even closer to human behavior than animals are.

But, there’s no absolute way to identify or to measure consicousness. It comes down to the basic difference between objective and subjective. Science is about objective measurement and the deductions we can make from that. And subjectivity is another word for consciousness. And there’s really no way to enter the subjective experience of another entity.

Now, how will we resolve this question? Well, we’ll resolve it politically. These machines will be so intelligent and so subtle, they’ll be able to convince most people that they’re conscious. If we don’t believe them, they’ll get mad at us. So, we’ll probably end up believing them. But, that’s a political prediction, not a real philosophical statement.

Searle says no, we can measure consciousness, and we’re going to discover some specific biological process in the brain that causes consciousness.

However, there’s no actual conception; if anything, consciousness is an emerging property of the supremely complex subtle patterns that take place in our brain. But, even that, in my view, can’t be absolutely measured in an objective way. In my view, Searle is a reductionist, thinking that it’s just some physical process. He actually says that consciousness is just another biological property and compares computer intelligence to computer simulation of digestion, and we’ll discover what the process of consciousness is. And then we’ll know that something is conscious.

So, I pointed out, “Well, if we discover that, why can’t we build a machine that has the same frame?”