This low-power chip could make speech recognition practical for tiny devices

March 17, 2017

MIT researchers have built a low-power chip specialized for automatic speech recognition. A cellphone running speech-recognition software might require about 1 watt of power; the new chip requires 100 times less power (between 0.2 and 10 milliwatts, depending on the number of words it has to recognize).

That could translate to a power savings of 90 to 99 percent, making voice control practical for wearables (especially watches, earbuds, and glasses, where speech recognition is essential) and other simple electronic devices, including ones that have to harvest energy from their environments or go months between battery charges, used in the “internet of things” (IoT), says Anantha Chandrakasan, the Vannevar Bush Professor of Electrical Engineering and Computer Science at MIT, whose group developed the new chip.

A voice-recognition network is too big to fit in a chip’s onboard memory, which is a problem because going off-chip for data is much more energy intensive than retrieving it from local stores. So the MIT researchers’ design concentrates on minimizing the amount of data that the chip has to retrieve from off-chip memory.

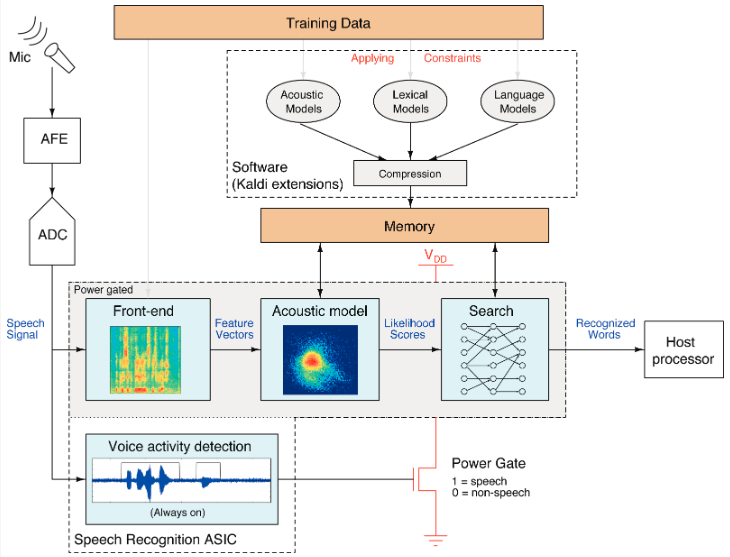

The new MIT speech-recognition chip uses an SRAM memory chip (instead of MLC flash, which requires higher energy-per-bit); deep neural networks (a first in a standalone hardware speech recognizer) that are optimized for power consumption as low as 3.3 mW by using limited network widths and quantized, sparse weight matrices; and other methods. The chip supports vocabularies up to 145,000 words, run in real-time. A simple “voice activity detection” circuit monitors ambient noise to determine whether it might be speech, and not just higher energy. (credit: Michael Price et al./MIT)

The new chip was presented last week at the International Solid-State Circuits Conference.

The research was funded through the Qmulus Project, a joint venture between MIT and Quanta Computer, and the chip was prototyped through the Taiwan Semiconductor Manufacturing Company’s University Shuttle Program.

Abstract of A Scalable Speech Recognizer with Deep-Neural-Network Acoustic Models and Voice-Activated Power Gating

The applications of speech interfaces, commonly used for search and personal assistants, are diversifying to include wearables, appliances, and robots. Hardware-accelerated automatic speech recognition (ASR) is needed for scenarios that are constrained by power, system complexity, or latency. Furthermore, a wakeup mechanism, such as voice activity detection (VAD), is needed to power gate the ASR and downstream system. This paper describes IC designs for ASR and VAD that improve on the accuracy, programmability, and scalability of previous work.