Why doesn’t my phone understand me yet?

January 13, 2016

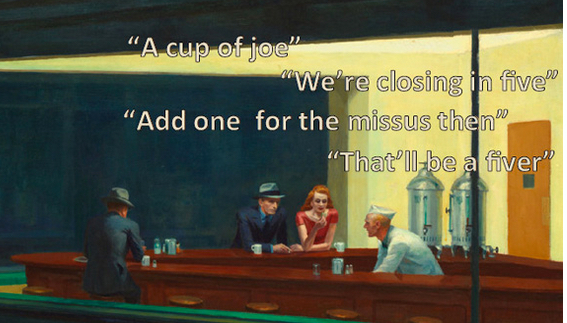

A computer would have a hard time understanding this conversation, but humans get it immediately. That’s because human communicators share a conceptual space or common ground that enables them to quickly interpret a situation. (credit for Edward Hopper’s Nighthawks artwork: the Art Institute of Chicago)

Because machines can’t develop a shared understanding of the people, place and situation — often including a long social history, the key to human communication) — say University of California, Berkeley, postdoctoral fellow Arjen Stolk and his Dutch colleagues.

In other words, machines don’t consider the context of a conversation the way people do.

The word “bank,” for example, would be interpreted one way if you’re holding a credit card but a different way if you’re holding a fishing pole, says Stolk. “Without context, making a ‘V’ with two fingers could mean victory, the number two, or ‘these are the two fingers I broke.'”

Stolk argues that scientists and engineers should focus more on the contextual aspects of mutual understanding, basing his argument on experimental evidence from brain scans that humans achieve nonverbal mutual understanding using unique computational and neural mechanisms. Some of the studies Stolk has conducted suggest that a breakdown in mutual understanding is also behind social disorders such as autism.

Brain scans pinpoint site for “meeting of minds”

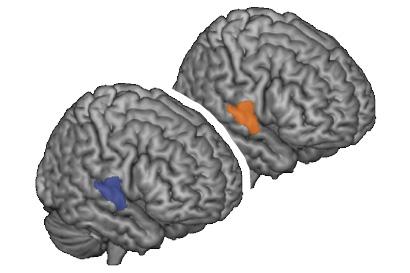

As two people conversing rely more and more on previously shared concepts, the same area of their brains — the right superior temporal gyrus — becomes more active (blue is activity in communicator, orange is activity in interpreter). This suggests that this brain region is key to mutual understanding as people continually update their shared understanding of the context of the conversation to improve mutual understanding. (credit: Arjen Stolk, UC Berkeley)

To explore how brains achieve mutual understanding, Stolk created a game that requires two players to communicate the rules to each other solely by game movements, without talking or even seeing one another, eliminating the influence of language or gesture. He then placed both players in an fMRI (functional magnetic resonance imager) and scanned their brains as they non-verbally communicated with one another via computer.

He found that the same regions of the brain — located in the poorly understood right temporal lobe, just above the ear — became active in both players during attempts to communicate the rules of the game. Critically, the superior temporal gyrus of the right temporal lobe maintained a steady, baseline activity throughout the game but became more active when one player suddenly understood what the other player was trying to communicate. The brain’s right hemisphere is more involved in abstract thought and social interactions than the left hemisphere.

“These regions in the right temporal lobe increase in activity the moment you establish a shared meaning for something, but not when you communicate a signal,” Stolk said. “The better the players got at understanding each other, the more active this region became.”

This means that both players are building a similar conceptual framework in the same area of the brain, constantly testing one another to make sure their concepts align, and updating only when new information changes that mutual understanding. The results were reported in 2014 in the Proceedings of the National Academy of Sciences.

“It is surprising,” said Stolk, “that for both the communicator, who has static input while she is planning her move, and the addressee, who is observing dynamic visual input during the game, the same region of the brain becomes more active over the course of the experiment as they improve their mutual understanding.”

Statistical reasoning vs. human reasoning

Robots and computers, on the other hand, converse based on a statistical analysis of a word’s meaning, Stolk said. If you usually use the word “bank” to mean a place to cash a check, then that will be the assumed meaning in a conversation, even when the conversation is about fishing.

“Apple’s Siri focuses on statistical regularities, but communication is not about statistical regularities,” he said. “Statistical regularities may get you far, but it is not how the brain does it. In order for computers to communicate with us, they would need a cognitive architecture that continuously captures and updates the conceptual space shared with their communication partner during a conversation.”

Hypothetically, such a dynamic conceptual framework would allow computers to resolve the intrinsically ambiguous communication signals produced by a real person, including drawing upon information stored years earlier.

Stolk’s studies have pinpointed other brain areas critical to mutual understanding. In a 2014 study, he used brain stimulation to disrupt a rear portion of the temporal lobe and found that it is important for integrating incoming signals with knowledge from previous interactions.

A later study found that in patients with damage to the frontal lobe (the ventromedial prefrontal cortex), decisions to communicate are no longer fine-tuned to stored knowledge about an addressee. Both studies could explain why such patients appear socially awkward in everyday social interactions.

“Most cognitive neuroscientists focus on the signals themselves, on the words, gestures and their statistical relationships, ignoring the underlying conceptual ability that we use during communication and the flexibility of everyday life,” he said. “Language is very helpful, but it is a tool for communication, it is not communication per se. By focusing on language, you may be focusing on the tool, not on the underlying mechanism, the cognitive architecture we have in our brain that helps us to communicate.”

Stolk and his colleagues discuss the importance of conceptual alignment for mutual understanding in an opinion piece appearing Jan. 11 in the journal Trends in Cognitive Sciences, in hopes of moving the study of communication to a new level, with a focus on conceptual alignment.

Stolk’s co-authors are Ivan Toni of the Donders Institute for Brain, Cognition and Behavior at Radboud University in the Netherlands, where the studies were conducted, and Lennart Verhagen of the University of Oxford.

Abstract of Conceptual Alignment: How Brains Achieve Mutual Understanding

We share our thoughts with other minds, but we do not understand how. Having a common language certainly helps, but infants’ and tourists’ communicative success clearly illustrates that sharing thoughts does not require signals with a pre-assigned meaning. In fact, human communicators jointly build a fleeting conceptual space in which signals are a means to seek and provide evidence for mutual understanding. Recent work has started to capture the neural mechanisms supporting those fleeting conceptual alignments. The evidence suggests that communicators and addressees achieve mutual understanding by using the same computational procedures, implemented in the same neuronal substrate, and operating over temporal scales independent from the signals’ occurrences.

Trends

State-of-the-art artificial agents such as the virtual assistants on our phones are powered by associative deep-learning algorithms. Yet those agents often make communicative errors that, if made by real people, would lead us to question their mental capacities.

We argue that these communicative errors are a consequence of focusing on the statistics of the signals we use to understand each other during communicative interactions.

Recent empirical work aimed at understanding our communicative abilities is showing that human communicators share concepts, not signals.

The evidence shows that communicators and addressees achieve mutual understanding by using the same computational procedures, implemented in the same neuronal substrate, and operating over temporal scales independent from the signals’ occurrences.