Brain responses to androids in the ‘uncanny valley’

July 15, 2011

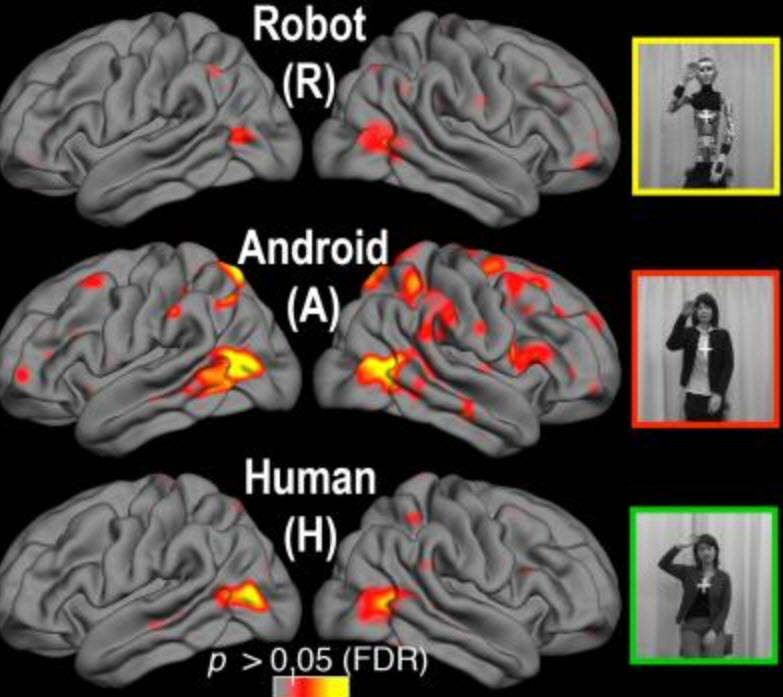

Brain response to videos of a robot, android and human (credit: Ayse Saygin, UC San Diego)

In an in-depth study of the “uncanny valley” phenomenon, an international team of researchers led by the University of California, San Diego has imaged the brains of people viewing videos of an “uncanny” android (compared to videos of a human and a robot-looking robot).

The term “uncanny valley” refers to an artificial agent’s (such as a robot) drop in likeability when it becomes too humanlike.

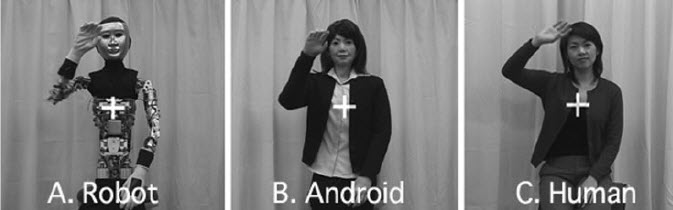

The researchers showed subjects 12 videos of the Japanese Repliee Q2 robot performing such ordinary actions as waving, nodding, taking a drink of water, and picking up a piece of paper from a table.

They were also shown videos of the same actions performed by the human on whom the android was modeled, and by a stripped version of the android — skinned to its underlying metal joints and wiring, revealing its mechanics until it could no longer be mistaken for a human.

They set up three conditions: (1) a human with biological appearance and movement; (2) a robot with mechanical appearance and mechanical motion; and (3) a human-seeming android with the exact same mechanical movement as the robot.

Androids cause brain to light up

(Credit: Ayse Saygin, UC San Diego)

The researchers found the biggest difference in brain response was during the android condition, with differences in the parietal cortex, on both sides of the brain — specifically in the areas that connect the part of the brain’s visual cortex that processes bodily movements with the section of the motor cortex thought to contain mirror neurons (neurons also known as “monkey-see, monkey-do neurons” or “empathy neurons”).

Based on their interpretation of the fMRI results, the researchers say they saw, in essence, evidence of mismatch. The brain “lit up” when the human-like appearance of the android and its robotic motion “didn’t compute.”

In other words, if it looks human and moves likes a human, we are OK with that. If it looks like a robot and acts like a robot, we are OK with that, too; our brains have no difficulty processing the information.

The trouble arises when — contrary to a lifetime of expectations — appearance and motion are at odds, the researchers said.

Ref.: C. Frith, The thing that should not be: predictive coding and the uncanny valley in perceiving human and humanoid robot actions, Social Cognitive and Affective Neuroscience, 2011; [DOI: 10.1093/scan/nsr025]