Humanoid robot learns language like a baby [updated 6/15/2012]

June 14, 2012

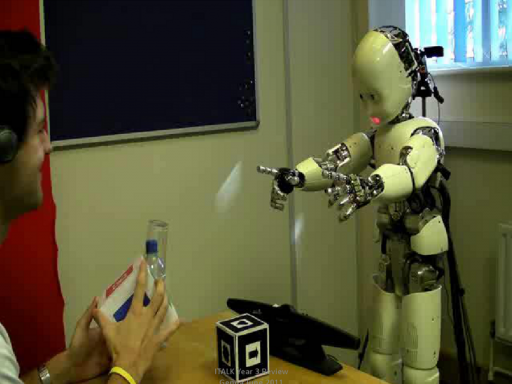

The scenario for the human-robot dialogue (credit: Caroline Lyon, Chrystopher L. Nehaniv, Joe Saunders/PLoS ONE)

With the help of human instructors, a robot has learned to talk like a human infant, learning the names of simple shapes and colors, reports Wired Science.

“Our work focuses on early stages analogous to some characteristics of a human child of about 6 to 14 months, the transition from babbling to first word forms,” wrote computer scientists led by Caroline Lyon of the University of Hertfordshire.

Named DeeChee, the robot is an iCub, a three-foot-tall open source humanoid machine designed to resemble a baby. The similarity isn’t merely aesthetic, but has a functional purpose: many researchers think certain cognitive processes are shaped by the bodies in which they occur. A brain in a vat would think and learn very differently than a brain in a body.

This field of study is called embodied cognition and in DeeChee’s case applies to learning the building blocks of language, a process that in humans is shaped by an exquisite sensitivity to the frequency of sounds.

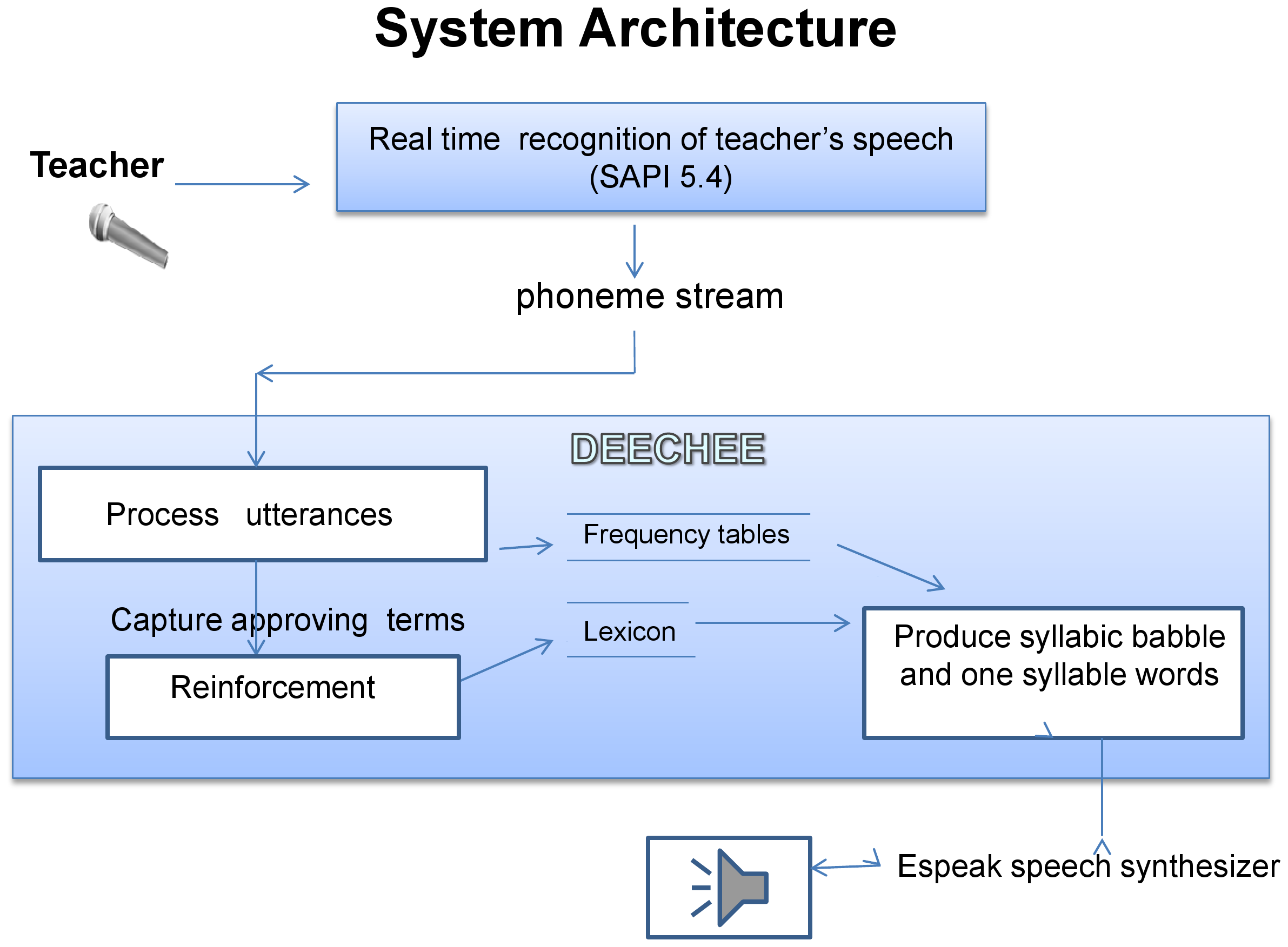

Overview of the system architecture (credit: Caroline Lyon, Chrystopher L. Nehaniv, Joe Saunders/PLoS ONE)

Using DeeChee also allowed the researchers to quantify the transition from babble to recognizable word forms in detail, drawing statistical links between sound frequencies and the robot’s performance that might eventually inform research on human learning.

“This paper fascinated me because I’m a big fan of Tomasello’s constructivist theory of language acquisition, which is one of the key inspirations they cite for their work,” artificial general intelligence expert Dr. Ben Goertzel told KurzweilAI. “I think that having machines learn language in an embodied and social context is the right thing to do. So, I do think this work is going in a great direction, broadly speaking.

“However, the limitations of the particular techniques and results reported here are also worth carefully noting….. What the AI is learning here is, basically, to recognize individual words in streams of sounds. This is interesting and valuable, but obviously, in itself, it doesn’t get you very far toward general language learning. They’ve done this using statistical reinforcement learning methods — using well-known methods that seem pretty obviously capable of doing this sort of thing. So there’s nothing really revolutionary here scientifically, though of course it’s wonderful to see that they’ve gotten this working.

“Obviously, one key question is whether the methods they’ve used to do this will be extensible in future to more advanced aspects of language learning. According to my own scientific intuition, they probably will not be thus extensible. I think very different methods will be needed to make more advanced language learning work, within the embodied social language learning context that these researchers have (IMO correctly) chosen. But the researchers involved may have a different intuition about the potential of their particular methods.”

Ref.: Caroline Lyon, Chrystopher L. Nehaniv, Joe Saunders, Interactive Language Learning by Robots: The Transition from Babbling to Word Forms, PLoS One, 2012, DOI: 10.1371/journal.pone.0038236 (open access)

Update 6/15/2012: Comments by iCub developer Jürgen Schmidhuber, Director of the Swiss Artificial Intelligence Lab IDSIA and artificial general intelligence expert Ben Goertzel added