Machines will achieve human-level intelligence in the 2028 to 2150 range: poll

April 26, 2011

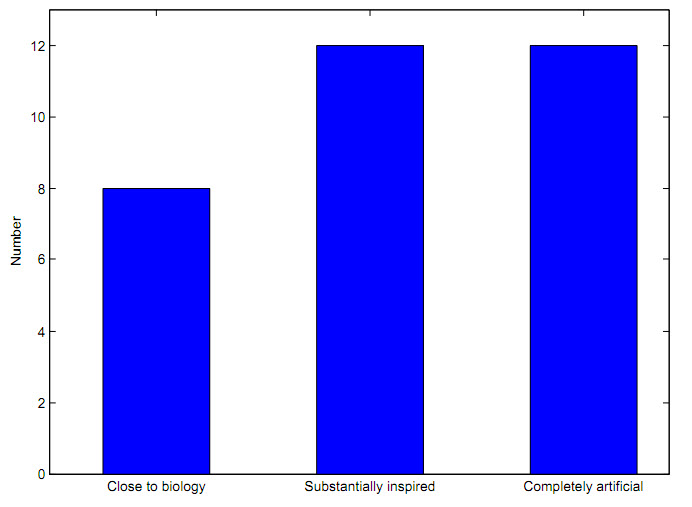

How similar will machine intelligence be to human intelligence? (credit: A. Sandberg & N. Bostrom/Future of Humanity Institute)

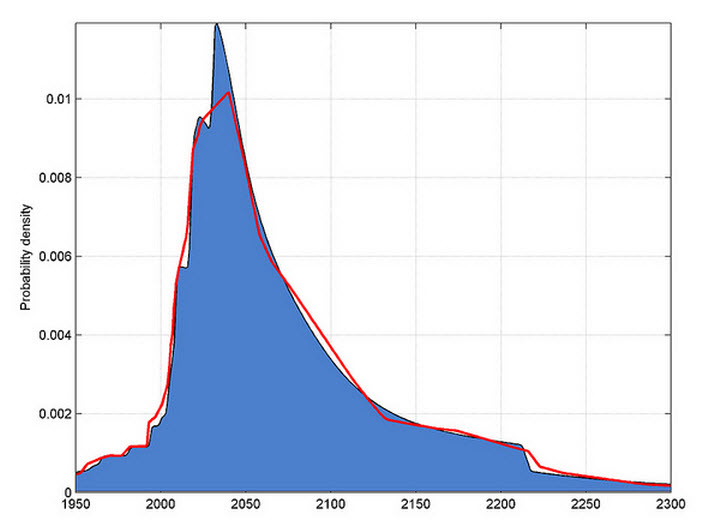

Machines will achieve human-level intelligence by 2028 (median estimate: 10% chance), by 2050 (median estimate: 50% chance), or by 2150 (median estimate: 90% chance), according to an informal poll at the Future of Humanity Institute (FHI) Winter Intelligence conference on machine intelligence in January.

“Human‐level machine intelligence, whether due to a de novo AGI (artificial general intelligence) or biologically inspired/emulated systems, has a macroscopic probability to occurring mid‐century,” the report authors, Dr. Anders Sandberg and Dr. Nick Bostrom, both researchers at FHI, found.

“This development is more likely to occur from a large organization than as a smaller project. The consequences might be potentially catastrophic, but there is great disagreement and uncertainty about this — radically positive outcomes are also possible.”

Other findings:

- Industry, academia and the military are the types of organizations most likely to first develop a human‐level machine intelligence.

- The response to “How positive or negative are the ultimate consequences of the creation of a human‐level (and beyond human‐level) machine intelligence likely to be?” were bimodal, with more weight given to extremely good and extremely bad outcomes.

- Of the 32 responses to “How similar will the first human‐level machine intelligence be to the human brain?,” 8 thought “very biologically inspired machine intelligence” the most likely, 12 thought “brain‐inspired AGI” and 12 thought “entirely de novo AGI” was the most likely.

- Most participants were only mildly confident of an eventual win by IBM’s Watson over human contestants in the “Jeopardy!” contest.

Probability density of human-level AI by date -- the blue represents skew Gaussian fits, the red represents triangular fits; previous dates are artifacts (credit: Anders Sandberg)

“This survey was merely an informal polling of an already self‐selected group, so the results should be taken with a large grain of salt,” the authors advise. “The small number of responses, the presence of visiting groups with presumably correlated views, the simple survey design and the limitations of the questionnaire all contribute to make this of limited reliability and validity.”

“While the validity is questionable, the results are consistent with earlier surveys,” Sandberg told KurzweilAI. “The kind of people who respond to this tend to think mid-century human-level AI is fairly plausible, with a tail towards the far future.Opinions on the overall effect were not divided but bimodal — it will likely be really good or really bad, not something in between.”

Brent Allsop, a Senior Software Engineer at 3M, has started a “Human Level AI Milestone?” Canonizer (consensus building open survey system) to encourage public participation in this interesting question in the survey: “Can you think of any milestone such that if it were ever reached you would expect human‐level machine intelligence to be developed within five years thereafter?”

Ref.: Sandberg, A. and Bostrom, N. (2011): Machine Intelligence Survey, Technical Report

#2011‐1, Future of Humanity Institute, Oxford University: pp. 1‐12. URL: www.fhi.ox.ac.uk/reports/2011‐1.pdf