MIT deep-learning system autonomously learns to identify objects

May 14, 2015

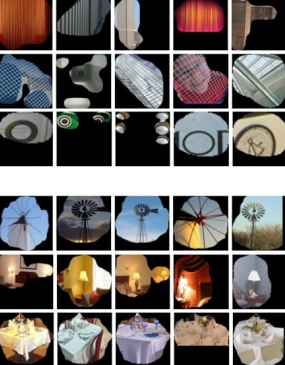

The first layers of a neural network trained to classify scenes seem to be tuned to geometric patterns of increasing complexity, but the higher layers appear to be picking out particular classes of objects (credit: the researchers)

MIT researchers have discovered that a deep-learning system designed to recognize and classify scenes has also learned how to recognize individual objects.

Last December, at the Annual Conference on Neural Information Processing Systems, MIT researchers announced the compilation of the world’s largest database of images labeled according to scene type, with 7 million entries. By exploiting a machine-learning technique known as “deep learning,” they used it to train the most successful scene-classifier yet, which was between 25 and 33 percent more accurate than its best predecessor.

The new discovery implies that scene-recognition and object-recognition systems could work in concert or could be mutually reinforcing.

The MIT researchers presented the new findings at the recent International Conference on Learning Representations in an open-access paper.

“Deep learning works very well, but it’s very hard to understand why it works — what is the internal representation that the network is building,” says Antonio Torralba, an associate professor of computer science and engineering at MIT and a senior author on the new paper.

“It could be that the representations for scenes are parts of scenes that don’t make any sense, like corners or pieces of objects. But it could be that it’s objects: To know that something is a bedroom, you need to see the bed; to know that something is a conference room, you need to see a table and chairs. That’s what we found, that the network is really finding these objects.”

After the MIT researchers’ network had processed millions of input images, readjusting its internal settings, it was about 50 percent accurate at labeling scenes — where human beings are only 80 percent accurate, since they can disagree about high-level scene labels. But the researchers didn’t know how their network was doing what it was doing.

The MIT researchers identified the 60 images that produced the strongest response in each unit of their network; then, to avoid biasing, they sent the collections of images to paid workers on Amazon’s Mechanical Turk crowdsourcing site, who they asked to identify commonalities among the images.

Beyond category

“The first layer, more than half of the units are tuned to simple elements — lines, or simple colors,” Torralba says. “As you move up in the network, you start finding more and more objects. And there are other things, like regions or surfaces, that could be things like grass or clothes.”

According to the assessments by the Mechanical Turk workers, about half of the units at the top of the network are tuned to particular objects. “The other half, either they detect objects but don’t do it very well, or we just don’t know what they are doing,” Torralba says. “They may be detecting pieces that we don’t know how to name. Or it may be that the network hasn’t fully converged, fully learned.”

In ongoing work, the researchers are starting from scratch and retraining their network on the same data sets, to see if it consistently converges on the same objects, or whether it can randomly evolve in different directions that still produce good predictions. They’re also exploring whether object detection and scene detection can feed back into each other, to improve the performance of both. “But we want to do that in a way that doesn’t force the network to do something that it doesn’t want to do,” Torralba says.

Abstract of Learning Deep Features for Scene Recognition using Places Database

Scene recognition is one of the hallmark tasks of computer vision, allowing definition of a context for object recognition. Whereas the tremendous recent progress in object recognition tasks is due to the availability of large datasets like ImageNet and the rise of Convolutional Neural Networks (CNNs) for learning high-level features, performance at scene recognition has not attained the same level of success. This may be because current deep features trained from ImageNet are not competitive enough for such tasks. Here, we introduce a new scene-centric database called Places with over 7 million labeled pictures of scenes. We propose new methods to compare the density and diversity of image datasets and show that Places is as dense as other scene datasets and has more diversity. Using CNN, we learn deep features for scene recognition tasks, and establish new state-of-the-art results on several scene-centric datasets. A visualization of the CNN layers’ responses allows us to show differences in the internal representations of object-centric and scene-centric networks.

Abstract of Object Detectors Emerge in Deep Scene CNNs

With the success of new computational architectures for visual processing, such as convolutional neural networks (CNN) and access to image databases with millions of labeled examples (e.g., ImageNet, Places), the state of the art in computer vision is advancing rapidly. One important factor for continued progress is to understand the representations that are learned by the inner layers of these deep architectures. Here we show that object detectors emerge from training CNNs to perform scene classification. As scenes are composed of objects, the CNN for scene classification automatically discovers meaningful objects detectors, representative of the learned scene categories. With object detectors emerging as a result of learning to recognize scenes, our work demonstrates that the same network can perform both scene recognition and object localization in a single forward-pass, without ever having been explicitly taught the notion of objects.