A neuromorphic-computing ‘roadmap’

April 22, 2014

Professor Jennifer Hasler displays a field programmable analog array (FPAA) board that includes an integrated circuit with biological-based neuron structures for power-efficient calculation (credit: Rob Felt)

Electrical engineers at the Georgia Institute of Technology have published a roadmap that details innovative analog-based techniques that they believe could make it possible to build a practical neuromorphic (brain-inspired) computer while minimizing energy requirements.

“A configurable analog-digital system can be expected to have a power efficiency improvement of up to 10,000 times compared to an all-digital system,” said Jennifer Hasler, a professor in the Georgia Tech School of Electrical and Computer Engineering (ECE) and a pioneer in using analog techniques for neuromorphic computing.

The roadmap was published in the journal Frontiers in Neuroscience (open access).

“To my knowledge, this is the first time a detailed neuromorphic roadmap has been attempted,” said Hasler. “We describe specific computational techniques could offer real progress in neuromorphic systems.”

Unlike digital computing, in which computers can address many different applications by processing different software programs, analog circuits have traditionally been hard-wired to address a single application. For example, cell phones use energy-efficient analog circuits for a number of specific functions, including capturing the user’s voice, amplifying incoming voice signals, and controlling battery power.

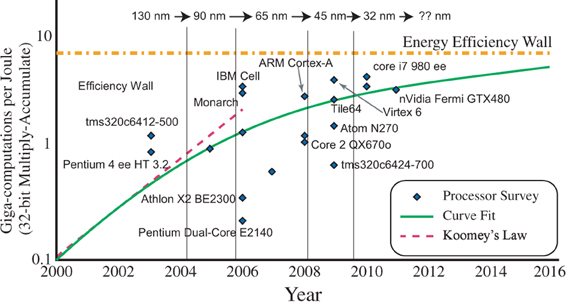

Plots of computational efficiency for digital multiply accumulate (MAC) operations (credit: Jennifer Hasler and Bo Marr/Front. Neurosci.)

Because analog devices do not have to process binary codes as digital computers do, their performance can be both faster and much less power hungry. Yet traditional analog circuits are limited because they’re built for a specific application, such as processing signals or controlling power. They don’t have the flexibility of digital devices that can process software, and they’re vulnerable to signal disturbance issues, or noise.

But in recent years, Hasler has developed a new approach to analog computing, in which silicon-based analog integrated circuits take over many of the functions now performed by familiar digital integrated circuits. These analog chips can be quickly reconfigured to provide a range of processing capabilities, in a manner that resembles conventional digital techniques in some ways.

Hasler and her research group have developed devices called field programmable analog arrays (FPAA). Like field programmable gate arrays (FPGA), which are digital integrated circuits that are ubiquitous in modern computing, the FPAA can be reconfigured after it’s manufactured — thus the phrase “field-programmable.”

In the paper, the researchers discuss ways to scale energy efficiency, performance and size to eventually achieve large-scale neuromorphic systems. “A major concept here is that we have to first build smaller systems capable of a simple representation of one layer of human brain cortex,” Hasler said. “When that system has been successfully demonstrated, we can then replicate it in ways that increase its complexity and performance.”

Among neuromorphic computing’s major hurdles are the communication issues involved in networking integrated circuits in ways that could replicate human cognition. In their paper, Hasler and Marr emphasize local interconnectivity to reduce complexity.