NVIDIA opens up CUDA platform, helping accelerate the path to exascale computing

December 15, 2011

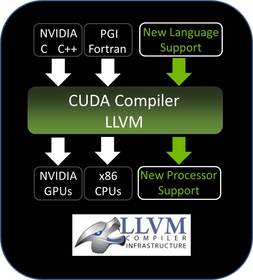

NVIDIA has announced that it will provide the source code for the new NVIDIA CUDA LLVM-based compiler to academic researchers and software-tool vendors, enabling them to more easily add GPU support for more programming languages and support parallel-computing CUDA applications on alternative processor architectures. The move should help accelerate the path to exascale computing.

LLVM is a widely-used open source compiler infrastructure with a modular design that makes it easy to add support for new programming languages and processor architectures. It is used for a range of programming requirements by many leading companies, including Adobe, Apple, Cray, Electronic Arts, and others.

“Opening up the CUDA platform is a significant step,” said Sudhakar Yalamanchili, professor at Georgia Institute of Technology and lead of the Ocelot project, which maps software written in CUDA C to different processor architectures. “The future of computing is heterogeneous, and the CUDA programming model provides a powerful way to maximize performance on many different types of processors, including AMD GPUs and Intel x86 CPUs.”

By releasing the source code to the CUDA compiler and internal representation (IR) format, NVIDIA is enabling researchers with more flexibility to map the CUDA programming model to other architectures, and furthering development of next-generation higher-performance computing platforms.

Early access to the CUDA compiler source code is available for qualified academic researchers and software tools developers.

More info: CUDA web site.

See also: GPU-based server farm slashes analysis time for DNA sequencing data batches from days to hours