Quadriplegic shapes robot hand with her thoughts

December 18, 2014

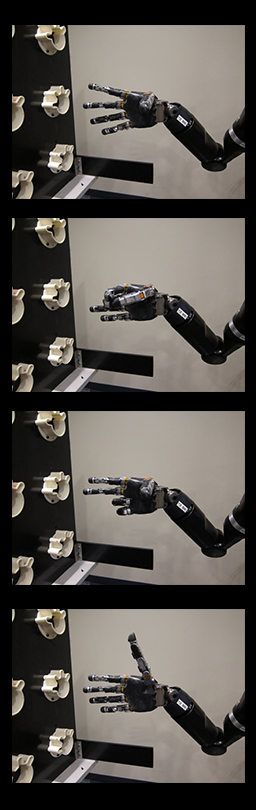

Controlling a robot arm with her thoughts, quadriplegic shapes the hand into four positions (credit: Journal of Neural Engineering/IOP Publishing)

In an experiment, a woman with quadriplegia shaped the almost-human hand of a robot arm with just her thoughts, directing it to pick up big and small boxes, a ball, an oddly shaped rock, and fat and skinny tubes and showing that brain-computer interface technology has the potential to improve the function and quality of life of those unable to use their own arms.

The findings by researchers at the University of Pittsburgh School of Medicine, published (open-access) online Tuesday in the Journal of Neural Engineering, describe for the first time 10-dimension brain control of a prosthetic device in which the trial participant used the arm and hand to reach, grasp, and place a variety of objects.

“Our project has shown that we can interpret signals from neurons with a simple computer algorithm to generate sophisticated, fluid movements that allow the user to interact with the environment,” said senior investigator Jennifer Collinger, Ph.D., assistant professor, Department of Physical Medicine and Rehabilitation (PM&R), Pitt School of Medicine, and research scientist for the VA Pittsburgh Healthcare System.

The four robot positions: fingers spread, scoop, pinch, and thumb up (credit: Journal of Neural Engineering/IOP Publishing)

In February 2012, small electrode grids with 96 tiny contact points each were surgically implanted in the regions of trial participant Jan Scheuermann’s left motor cortex that would normally control her right arm and hand movement.

Each electrode point picked up signals from an individual neuron, which were then relayed to a computer to identify the firing patterns associated with particular observed or imagined movements, such as raising or lowering the arm, or turning the wrist.

That “mind-reading” was used to direct the movements of a prosthetic arm developed by Johns Hopkins Applied Physics Laboratory.

Within a week of the surgery, Ms. Scheuermann could reach in and out, left and right, and up and down with the arm to achieve 3D control, and before three months had passed, she also could flex the wrist back and forth, move it from side to side and rotate it clockwise and counter-clockwise, as well as grip objects, adding up to 7D control. Those findings were published in The Lancet in 2012.

“In the next part of the study, described in this new paper, Jan mastered 10D control, allowing her to move the robot hand into different positions while also controlling the arm and wrist,” said Michael Boninger, M.D., professor and chair, PM&R, and director of the UPMC Rehabilitation Institute.

To bring the total of arm and hand movements to 10, the simple pincer grip was replaced by four hand shapes: finger abduction, in which the fingers are spread out; scoop, in which the last fingers curl in; thumb opposition, in which the thumb moves outward from the palm; and a pinch of the thumb, index and middle fingers.

As before, Ms. Scheuermann watched animations and imagined the movements while the team recorded the signals her brain was sending in a process called calibration. Then, they used what they had learned to read her thoughts so she could move the hand into the various positions.

“Jan used the robot arm to grasp more easily when objects had been displayed during the preceding calibration, which was interesting,” said co-investigator Andrew Schwartz, Ph.D., professor of Neurobiology, Pitt School of Medicine. “Overall, our results indicate that highly coordinated, natural movement can be restored to people whose arms and hands are paralyzed.”

The project was funded by the Defense Advanced Research Projects Agency, the Department of Veterans Affairs, and the UPMC Rehabilitation Institute.

Abstract of Ten-dimensional anthropomorphic arm control in a human brain−machine interface: difficulties, solutions, and limitations

Objective. In a previous study we demonstrated continuous translation, orientation and one-dimensional grasping control of a prosthetic limb (seven degrees of freedom) by a human subject with tetraplegia using a brain−machine interface (BMI). The current study, in the same subject, immediately followed the previous work and expanded the scope of the control signal by also extracting hand-shape commands from the two 96-channel intracortical electrode arrays implanted in the subject’s left motor cortex. Approach. Four new control signals, dictating prosthetic hand shape, replaced the one-dimensional grasping in the previous study, allowing the subject to control the prosthetic limb with ten degrees of freedom (three-dimensional (3D) translation, 3D orientation, four-dimensional hand shaping) simultaneously. Main results. Robust neural tuning to hand shaping was found, leading to ten-dimensional (10D) performance well above chance levels in all tests. Neural unit preferred directions were broadly distributed through the 10D space, with the majority of units significantly tuned to all ten dimensions, instead of being restricted to isolated domains (e.g. translation, orientation or hand shape). The addition of hand shaping emphasized object-interaction behavior. A fundamental component of BMIs is the calibration used to associate neural activity to intended movement. We found that the presence of an object during calibration enhanced successful shaping of the prosthetic hand as it closed around the object during grasping. Significance. Our results show that individual motor cortical neurons encode many parameters of movement, that object interaction is an important factor when extracting these signals, and that high-dimensional operation of prosthetic devices can be achieved with simple decoding algorithms. ClinicalTrials.gov Identifier: NCT01364480.