Researchers watch video images people are seeing, decoded from their fMRI brain scans in near-real-time

October 27, 2017

Purdue Engineering researchers have developed a system that can show what people are seeing in real-world videos, decoded from their fMRI brain scans — an advanced new form of “mind-reading” technology that could lead to new insights in brain function and to advanced AI systems.

The research builds on previous pioneering research at UC Berkeley’s Gallant Lab, which created a computer program in 2011 that translated fMRI brain-wave patterns into images that loosely mirrored a series of images being viewed.

The new system also decodes moving images that subjects see in videos and does it in near-real-time. But the researchers were also able to determine the subjects’ interpretations of the images they saw — for example, interpreting an image as a person or thing — and could even reconstruct a version of the original images that the subjects saw.

Deep-learning AI system for watching what the brain sees

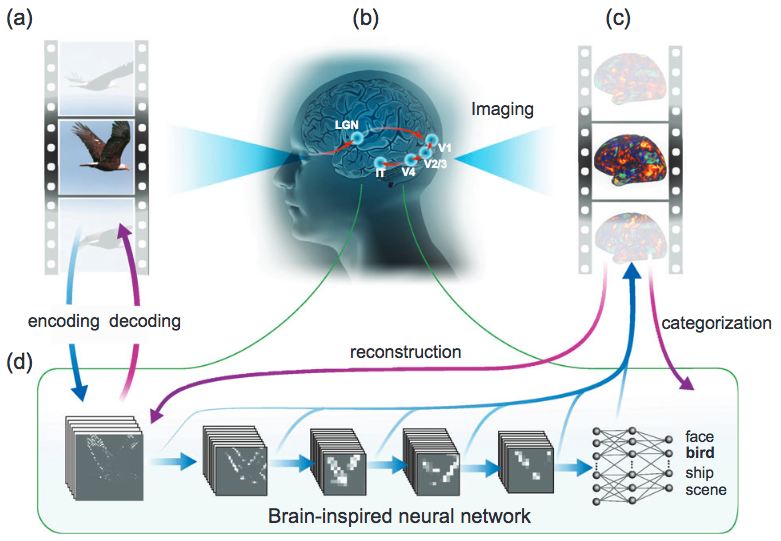

Watching in near-real-time what the brain sees. Visual information generated by a video (a) is processed in a cascade from the retina through the thalamus (LGN area) to several levels of the visual cortex (b), detected from fMRI activity patterns (c) and recorded. A powerful deep-learning technique (d) then models this detected cortical visual processing. Called a convolutional neural network (CNN), this model transforms every video frame into multiple layers of features, ranging from orientations and colors (the first visual layer) to high-level object categories (face, bird, etc.) in semantic (meaning) space (the eighth layer). The trained CNN model can then be used to reverse this process, reconstructing the original videos — even creating new videos that the CNN model had never watched. (credit: Haiguang Wen et al./Cerebral Cortex)

The researchers acquired 11.5 hours of fMRI data from each of three women subjects watching 972 video clips, including clips showing people or animals in action and nature scenes.

To decode the fMRI images, the research pioneered the use of a deep-learning technique called a convolutional neural network (CNN). The trained CNN model was able to accurately decode the fMRI blood-flow data to identify specific image categories (such as the face, bird, ship, and scene examples in the above figure). The researchers could compare (in near-real-time) these viewed video images side-by-side with the computer’s visual interpretation of what the person’s brain saw.

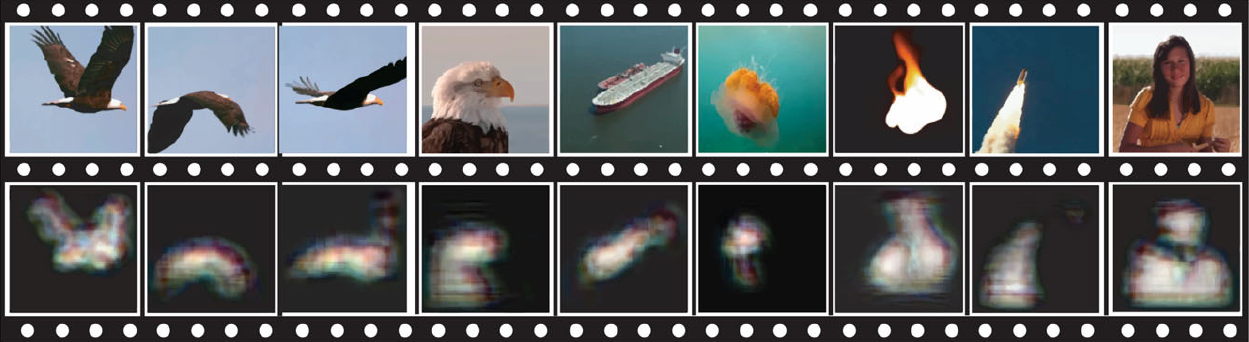

Reconstruction of a dynamic visual experience in the experiment. The top row shows the example movie frames seen by one subject; the bottom row shows the reconstruction of those frames based on the subject’s cortical fMRI responses to the movie. (credit: Haiguang Wen et al./ Cerebral Cortex)

The researchers were also able to figure out how certain locations in the visual cortex were associated with specific information a person was seeing.

Decoding how the visual cortex works

CNNs have been used to recognize faces and objects, and to study how the brain processes static images and other visual stimuli. But the new findings represent the first time CNNs have been used to see how the brain processes videos of natural scenes. This is “a step toward decoding the brain while people are trying to make sense of complex and dynamic visual surroundings,” said doctoral student Haiguang Wen.

Wen was first author of a paper describing the research, appearing online Oct. 20 in the journal Cerebral Cortex.

“Neuroscience is trying to map which parts of the brain are responsible for specific functionality,” Wen explained. “This is a landmark goal of neuroscience. I think what we report in this paper moves us closer to achieving that goal. Using our technique, you may visualize the specific information represented by any brain location, and screen through all the locations in the brain’s visual cortex. By doing that, you can see how the brain divides a visual scene into pieces, and re-assembles the pieces into a full understanding of the visual scene.”

The researchers also were able to use models trained with data from one human subject to predict and decode the brain activity of a different human subject, a process called “cross-subject encoding and decoding.” This finding is important because it demonstrates the potential for broad applications of such models to study brain function, including people with visual deficits.

The research has been funded by the National Institute of Mental Health. The work is affiliated with the Purdue Institute for Integrative Neuroscience. Data reported in this paper are also publicly available at the Laboratory of Integrated Brain Imaging website.

UPDATE Oct. 28, 2017 — Additional figure added, comparing the original images and those reconstructed from the subject’s cortical fMRI responses to the movie; subhead revised to clarify the CNN function. Two references also added.

Abstract of Neural Encoding and Decoding with Deep Learning for Dynamic Natural Vision

Convolutional neural network (CNN) driven by image recognition has been shown to be able to explain cortical responses to static pictures at ventral-stream areas. Here, we further showed that such CNN could reliably predict and decode functional magnetic resonance imaging data from humans watching natural movies, despite its lack of any mechanism to account for temporal dynamics or feedback processing. Using separate data, encoding and decoding models were developed and evaluated for describing the bi-directional relationships between the CNN and the brain. Through the encoding models, the CNN-predicted areas covered not only the ventral stream, but also the dorsal stream, albeit to a lesser degree; single-voxel response was visualized as the specific pixel pattern that drove the response, revealing the distinct representation of individual cortical location; cortical activation was synthesized from natural images with high-throughput to map category representation, contrast, and selectivity. Through the decoding models, fMRI signals were directly decoded to estimate the feature representations in both visual and semantic spaces, for direct visual reconstruction and semantic categorization, respectively. These results corroborate, generalize, and extend previous findings, and highlight the value of using deep learning, as an all-in-one model of the visual cortex, to understand and decode natural vision.