UCSD spinoffs create lab-quality portable 64-channel BCI headset

January 13, 2016

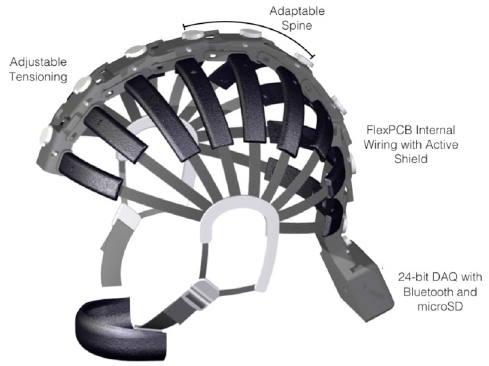

A dry-electrode, portable 64-channel wearable EEG headset (credit: Jacobs School of Engineering/UC San Diego)

The first dry-electrode, portable 64-channel wearable brain-computer interface (BCI) has been developed by bioengineers and cognitive scientists associated with UCSD Jacobs School.

The system is comparable to state-of-the-art equipment found in research laboratories, but with portability, allowing for tracking brain states throughout the day and augmenting the brain’s capabilities, the researchers say. Current BCI devices require gel-based electrodes or fewer than 64 channels.

The dry EEG sensors are easier to apply than wet sensors, while still providing high-density/low-noise brain activity data, according to the researchers. The headset includes a Bluetooth transmitter, eliminating the usual array of wires. The system also includes a sophisticated software suite for data interpretation and analysis for applications including research, neuro-feedback, and clinical diagnostics.

Cognionics HD-72 64-channel mobile EEG system (credit: Cognionics)

“This is going to take neuroimaging to the next level by deploying on a much larger scale,” including use in homes and even while driving, said Mike Yu Chi, a Jacobs School alumnus and CTO of Cognionics who led the team that developed the headset.

The researchers also envision a future when neuroimaging can be used to bring about new therapies for neurological disorders. “We will be able to prompt the brain to fix its own problems,” said Gert Cauwenberghs, a bioengineering professor at the Jacobs School and a principal investigator on a National Science Foundation grant. “We are trying to get away from invasive technologies, such as deep brain stimulation and prescription medications, and instead start up a repair process by using the brain’s synaptic plasticity.”

“In 10 years, using a brain-machine interface might become as natural as using your smartphone is today, said Tim Mullen, a UC San Diego alumnus, lead author on the study and a former researcher at the Swartz Center for Computational Neuroscience at UC San Diego.

The researchers from the Jacobs School of Engineering and Institute for Neural Computation at UC San Diego detailed their findings in an article of the Special Issue on Wearable Technologies published recently in IEEE Transactions on Biomedical Engineering.

EEG headset

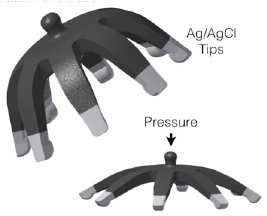

The innovative dry electrodes developed by the researchers eliminate the complexity and mess of affixing gel electrodes. By using silver/silver chloride tips, along with associated electronics and special headset mechanical construction, the dry electrodes reduce electrical noise and can be conveniently used through hair. (credit: Tim R. Mullen et al./IEEE Transactions on Biomedical Engineering)

For this vision of the future to become a reality, sensors will need to become not only wearable but also comfortable, and algorithms for data analysis will need to be able to cut through noise to extract meaningful data. The EEG headset developed by Chi and his team has an octopus-like shape, in which each arm is elastic, so that it fits on many different kinds of head shapes. The sensors at the end of each arm are designed to make optimal contact with the scalp while adding as little noise in the signal as possible.

The researchers spent four years perfecting the sensors’ materials. The sensors are designed to work on a subject’s hair. The material allows the sensors to remain flexible and durable while still conducting high-quality signals, thanks to a silver/silver-chloride coating. The design includes shielding from interference from electrical equipment and other electronics. The researchers also developed sensors intended for direct application to the scalp.

Software and data analysis

In the study, the data that the headset captured were analyzed with software developed by Mullen and Christian Kothe, another former researcher at the Swartz Center for Computational Neuroscience.

First, brain signals needed to be separated from noise in the EEG data. The tiny electrical currents originating from the brain are often contaminated by high-amplitude artifacts generated when subjects move, speak, or even blink. The researchers designed an algorithm that separates the EEG data in real-time into different components that are statistically unrelated to one another.

The algorithm then compares these elements with clean data obtained, for instance, when a subject is at rest. Abnormal data are labeled as noise and discarded. “The algorithm attempts to remove as much of the noise as possible while preserving as much of the brain signal as possible,” said Mullen.

The researchers were also able to track, in real time, how signals from different areas of the brain interact with one another, building an ever-changing network map of brain activity. They then used machine learning to connect specific network patterns in brain activity to cognition and behavior.

Future plans

Mullen’s start-up, Qusp, has developed NeuroScale, a cloud-based software platform that provides continuous real-time interpretation of brain and body signals through an Internet application program interface (API). The goal is to enable brain-computer interface and advanced signal processing methods to be easily integrated with various everyday applications and wearable devices.

“A Holy Grail in our field is to track meaningful changes in distributed brain networks at the ‘speed of thought’,” Mullen said. “We’re closer to that goal, but we’re not quite there yet.”

Cognionics is selling the headset to research groups, especially for use in neuro-feedback. Next steps include improving the headset’s performance while subjects are moving. The device can reliably capture signals while subjects walk but less so during more strenuous activities such as running. Electronics also need improvement to function for longer time periods — days and even weeks instead of hours.

The ultimate goal is to get the headset into the clinic to help diagnose a range of conditions, such as strokes and seizures, says Chi.

These researchers’ development and testing projects have been funded in part by a five-year Emerging Frontiers of Research Innovation grant from the National Science Foundation, DARPA, and the Army Research Laboratory (Aberdeen, MD) Collaborative Technology Alliance (CTA).

Abstract of Real-time neuroimaging and cognitive monitoring using wearable dry EEG

Goal: We present and evaluate a wearable high-density dry-electrode EEG system and an open-source software framework for online neuroimaging and state classification. Methods: The system integrates a 64-channel dry EEG form factor with wireless data streaming for online analysis. A real-time software framework is applied, including adaptive artifact rejection, cortical source localization, multivariate effective connectivity inference, data visualization, and cognitive state classification from connectivity features using a constrained logistic regression approach (ProxConn). We evaluate the system identification methods on simulated 64-channel EEG data. Then, we evaluate system performance, using ProxConn and a benchmark ERP method, in classifying response errors in nine subjects using the dry EEG system. Results: Simulations yielded high accuracy (AUC = 0.97 ± 0.021) for real-time cortical connectivity estimation. Response error classification using cortical effective connectivity [short-time directdirected transfer function (sdDTF)] was significantly above chance with similar performance (AUC) for cLORETA (0.74 ± 0.09) and LCMV (0.72 ± 0.08) source localization. Cortical ERPbased classification was equivalent to ProxConn for cLORETA (0.74 ± 0.16) butsignificantlybetterforLCMV (0.82 ± 0.12). Conclusion: We demonstrated the feasibility for real-time cortical connectivity analysis and cognitive state classification from highdensity wearable dry EEG. Significance: This paper is the first validated application of these methods to 64-channel dry EEG. This study addresses a need for robust real-time measurement and interpretation of complex brain activity in the dynamic environment of the wearable setting. Such advances can have broad impact in research, medicine, and brain-computer interfaces. The pipelines are made freely available in the open-source SIFT and BCILAB toolboxes.