2014 Nobel Prize in Physiology or Medicine awarded for ‘inner GPS’ research

October 6, 2014

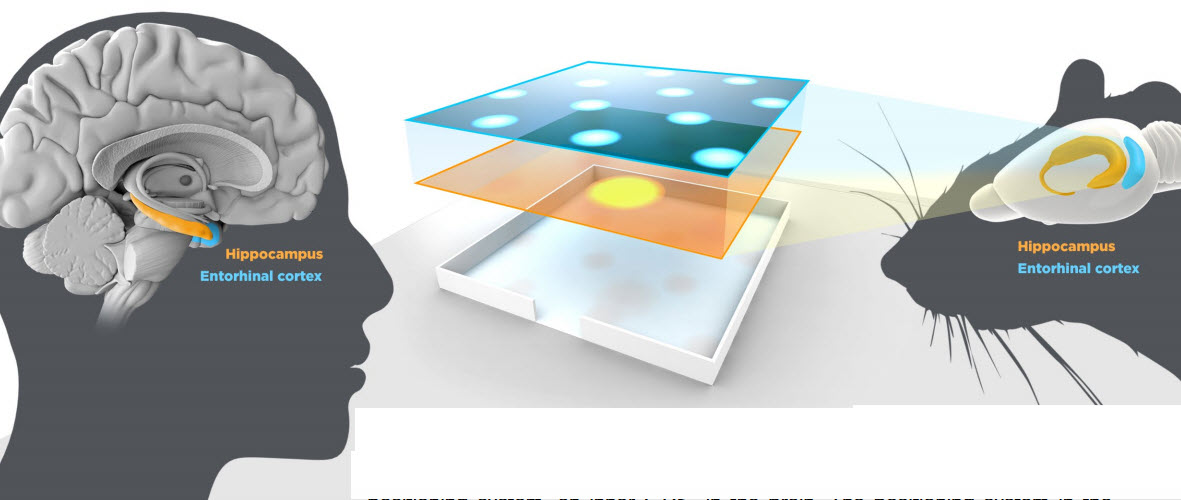

Grid cells, together with other cells in the entorhinal cortex of the brain that recognize the direction of the head and the border of the room, form networks with the place cells in the hippocampus. This circuitry constitutes a comprehensive positioning system in the brain that appears to have components similar to those of the rat brain. (Credit: Mattias Karlén/The Nobel Committee for Physiology or Medicine)

The 2014 Nobel Prize in Physiology or Medicine has just been awarded to John O´Keefe and jointly to May-Britt Moser and Edvard I. Moser for the discovery of a positioning system in the brain.

This “inner GPS” makes it possible to orient ourselves in space, demonstrating a cellular basis for higher cognitive function. According to the Nobel Committee for Physiology or Medicine:

“Grid cells” (left) combine with “Place cells” (right) to form an “inner GPS” (credit: Mattias Karlén/The Nobel Committee for Physiology or Medicine)

John O’Keefe discovered, in 1971, that certain nerve cells in the brain were activated when a rat assumed a particular place in the environment. Other nerve cells were activated at other places. He proposed that these “place cells” build up an inner map of the environment. Place cells are located in a part of the brain called the hippocampus.

May-Britt and Edvard I. Moser discovered in 2005 that other nerve cells in a nearby part of the brain, the entorhinal cortex, were activated when the rat passed certain locations. Together, these locations formed a hexagonal grid, each “grid cell” reacting in a unique spatial pattern. Collectively, these grid cells form a coordinate system that allows for spatial navigation.

Human eyes + rat brain + place recognition algorithm –> smart navigating robot

Bringing the research up to date, robotic-vision expert Michael Milford from the Queensland University of Technology (QUT) Science and Engineering Faculty has developed a “place recognition algorithm” that allows robots to navigate intelligently, unrestricted by high-density buildings or tunnels.

To do that, he asked the question: “What would happen if you combined the eyes of a human with a rat brain, both in a robot brain, in what he calls a “very Frankenstein type of project” (or maybe The Island of Doctor Moreau?).

Michael Milford with one of the all-terrain robots to benefit from brain-inspired modeling (credit: Queensland University of Technology)

“A rodent’s spatial memory is strong but has very poor vision, while humans can easily recognize where they are because of eyesight,” he explained. “We have existing software algorithms in robots to model the human and rat brain. We’ll plug in the two pieces of software together on a robot moving around in an environment and see what happens.”

What happens was just revealed in the journal Philosophical Transactions of the Royal Society B.

Spaced out

Milford said the research would also study how the human brain degrades, failing to recognize familiar places. “The brain’s spatial navigation capabilities degrade early in diseases like Alzheimer’s,” he said.

Milford, who is chief investigator at the QUT-based headquarters of the Australian Research Council Centre of Excellence in Robotic Vision, said place-recognition technology, a key component of navigation, has been limited to date.

“GPS only works in open outdoor areas, lasers are expensive, and cameras are highly sensitive; but in contrast, nature has evolved superb navigation systems.”

Smarter cars too

He said the research project could also have benefits for manufacturing, environmental management — and presumably for autonomous vehicles.

The interdisciplinary research project involves collaborations between QUT and the University of Queensland, and other institutions, including Harvard, Boston, and Antwerp universities. Milford was awarded a $676,174 Australian Research Council Future Fellowship to support the study.

Queensland University of Technology | Superhuman robot navigation with a Frankenstein model…and why I think it’s a great idea

Abstract of Condition-Invariant, Top-Down Visual Place Recognition

In this paper we present a novel, condition-invariant place recognition algorithm inspired by recent discoveries in human visual neuroscience. The algorithm combines intolerant but fast low resolution whole image matching with highly tolerant, sub-image patch matching processes. The approach does not require prior training and works on single images, alleviating the need for either a velocity signal or image sequence, differentiating it from current state of the art methods. We conduct an exhaustive set of experiments evaluating the relationship between place recognition performance and computational resources using part of the challenging Alderley sunny day – rainy night dataset, which has only been previously solved by integrating over 320 frame long image sequences. We achieve recall rates of up to 51% at 100% precision, matching places that have undergone drastic perceptual change while rejecting match hypotheses between highly aliased images of different places. Human trials demonstrate the performance is approaching human capability. The results provide a new benchmark for single image, condition-invariant place recognition.

Abstract of Mapping a Suburb With a Single Camera Using a Biologically Inspired SLAM System

This paper describes a biologically inspired approach to vision-only simultaneous localization and mapping (SLAM) on ground-based platforms. The core SLAM system, dubbed RatSLAM, is based on computational models of the rodent hippocampus, and is coupled with a lightweight vision system that provides odometry and appearance information. RatSLAM builds a map in an online manner, driving loop closure and relocalization through sequences of familiar visual scenes. Visual ambiguity is managed by maintaining multiple competing vehicle pose estimates, while cumulative errors in odometry are corrected after loop closure by a map correction algorithm. We demonstrate the mapping performance of the system on a 66 km car journey through a complex suburban road network. Using only a web camera operating at 10 Hz, RatSLAM generates a coherent map of the entire environment at real-time speed, correctly closing more than 51 loops of up to 5 km in length.

Abstract of Principles of goal-directed spatial robot navigation in biomimetic models

Mobile robots and animals alike must effectively navigate their environments in order to achieve their goals. For animals goal-directed navigation facilitates finding food, seeking shelter or migration; similarly robots perform goal-directed navigation to find a charging station, get out of the rain or guide a person to a destination. This similarity in tasks extends to the environment as well; increasingly, mobile robots are operating in the same underwater, ground and aerial environments that animals do. Yet despite these similarities, goal-directed navigation research in robotics and biology has proceeded largely in parallel, linked only by a small amount of interdisciplinary research spanning both areas. Most state-of-the-art robotic navigation systems employ a range of sensors, world representations and navigation algorithms that seem far removed from what we know of how animals navigate; their navigation systems are shaped by key principles of navigation in ‘real-world’ environments including dealing with uncertainty in sensing, landmark observation and world modelling. By contrast, biomimetic animal navigation models produce plausible animal navigation behaviour in a range of laboratory experimental navigation paradigms, typically without addressing many of these robotic navigation principles. In this paper, we attempt to link robotics and biology by reviewing the current state of the art in conventional and biomimetic goal-directed navigation models, focusing on the key principles of goal-oriented robotic navigation and the extent to which these principles have been adapted by biomimetic navigation models and why.