A brain-computer interface for controlling an exoskeleton

August 18, 2015

A volunteer calibrating the exoskeleton brain-computer interface (credit: (c) Korea University/TU Berlin)

Scientists at Korea University and TU Berlin have developed a brain-computer interface (BCI) for a lower limb exoskeleton used for gait assistance by decoding specific signals from the user’s brain.

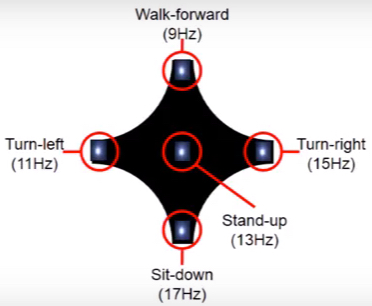

LEDs flickering at five different frequencies code for five different commands (credit: Korea University/TU Berlin)

Using an electroencephalogram (EEG) cap, the system allows users to move forward, turn left and right, sit, and stand, simply by staring at one of five flickering light emitting diodes (LEDs).

Each of the five LEDs flickers at a different frequency, corresponding to five types of movements. When the user focuses their attention on a specific LED, the flickering light generates a visual evoked potential in the EEG signal, which is then identified by a computer and used to control the exoskeleton to move in the appropriate manner (forward, left, right, stand, sit).

Korea University/TU Berlin | A brain-computer interface for controlling an exoskeleton

The results are published in an open-access paper today (August 18) in the Journal of Neural Engineering.

“A key problem is designing such a system is that exoskeletons create lots of electrical ‘noise,'” explains Klaus Muller, an author of the paper. “The EEG signal [from the brain] gets buried under all this noise, but our system is able to separate out the EEG signal and the frequency of the flickering LED within this signal.”

“People with amyotrophic lateral sclerosis (ALS) (motor neuron disease) or spinal cord injuries face difficulties communicating or using their limbs,” he said. This system could let them walk again, he believes. He suggests that the control system could be added on to existing BCI devices, such as Open BCI devices.

In experiments with 11 volunteers, it only took them a few minutes to be trained in operating the system. Because of the flickering LEDs, they were carefully screened for epilepsy prior to taking part in the research. The researchers are now working to reduce the “visual fatigue” associated with longer-term use.

Abstract of A lower limb exoskeleton control system based on steady state visual evoked potentials

Objective. We have developed an asynchronous brain–machine interface (BMI)-based lower limb exoskeleton control system based on steady-state visual evoked potentials (SSVEPs).

Approach. By decoding electroencephalography signals in real-time, users are able to walk forward, turn right, turn left, sit, and stand while wearing the exoskeleton. SSVEP stimulation is implemented with a visual stimulation unit, consisting of five light emitting diodes fixed to the exoskeleton. A canonical correlation analysis (CCA) method for the extraction of frequency information associated with the SSVEP was used in combination with k-nearest neighbors.

Main results. Overall, 11 healthy subjects participated in the experiment to evaluate performance. To achieve the best classification, CCA was first calibrated in an offline experiment. In the subsequent online experiment, our results exhibit accuracies of 91.3 ± 5.73%, a response time of 3.28 ± 1.82 s, an information transfer rate of 32.9 ± 9.13 bits/min, and a completion time of 1100 ± 154.92 s for the experimental parcour studied.

Significance. The ability to achieve such high quality BMI control indicates that an SSVEP-based lower limb exoskeleton for gait assistance is becoming feasible.