A machine-learning system that trains itself by surfing the web

December 8, 2016

MIT researchers have designed a new machine-learning system that can learn by itself to extract text information for statistical analysis when available data is scarce.

This new “information extraction” system turns machine learning on its head. It works like humans do. When we run out of data in a study (say, differentiating between fake and real news), we simply search the Internet for more data, and then we piece the new data together to make sense out of it all.

That differs from most machine-learning systems, which are fed as many training examples as possible to increase the chances that the system will be able to handle difficult problems by looking for patterns compared to training data.

Andrew Ng, Associate Professor of Computer Science at Stanford, Chief Scientist of Baidu, and Chairman and Co-founder of Coursera, is writing an introductory book, Machine Learning Yearning, intended to help readers build highly effective AI and machine learning systems. If you want to download a free draft copy of each chapter as it is finished (and previous chapters), you can sign up here for his mailing list. Ng is currently up to chapter 14.

“In information extraction, traditionally, in natural-language processing, you are given an article and you need to do whatever it takes to extract correctly from this article,” says Regina Barzilay, the Delta Electronics Professor of Electrical Engineering and Computer Science and senior author of a new paper presented at the recent Association for Computational Linguistics’ Conference on Empirical Methods on Natural Language Processing.

“That’s very different from what you or I would do. When you’re reading an article that you can’t understand, you’re going to go on the web and find one that you can understand.”

Confidence boost from web data

Machine learning systems determine whether they have enough data by assigning each of its classifications a confidence score — a measure of the statistical likelihood that the classification is correct, given the patterns discerned in the training data. If not, additional training data is required.

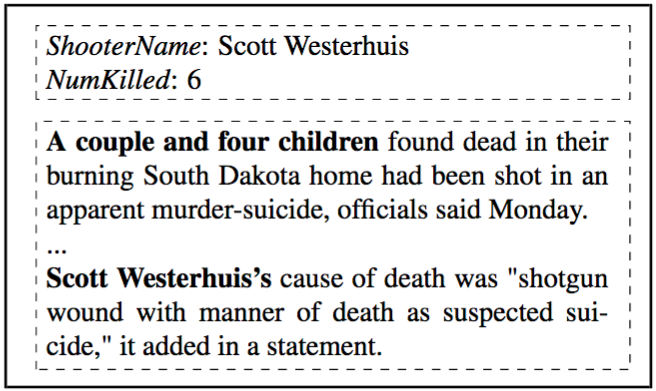

Fig 1. Sample news article on a shooting case. Note how the article contains both the name of the shooter and the number of people killed, but both pieces of information require complex extraction schemes to make sense out of the information. (credit: Karthik Narasimhan et al.)

In the real world, that’s not always easy. For example, the researchers note in the paper that the example news article excerpt in Fig. 1, “does not explicitly mention the shooter (Scott Westerhuis), but instead refers to him as a suicide victim. Extraction of the number of fatally shot victims is similarly difficult as the system needs to infer that ‘A couple and four children’ means six people. Even a large annotated training set may not provide sufficient coverage to capture such challenging cases.”

Instead, with the researchers’ new information-extraction system, if the confidence score is too low, the system automatically generates a web search query designed to pull up texts likely to contain the data it’s trying to extract. It then attempts to extract the relevant data from one of the new texts and reconciles the results with those of its initial extraction. If the confidence score remains too low, it moves on to the next text pulled up by the search string, and so on.

The system learns how to generate search queries, gauge the likelihood that a new text is relevant to its extraction task, and determine the best strategy for fusing the results of multiple attempts at extraction.

Testing the new information-extraction system

The MIT researchers say they tested their system with two information-extraction tasks. In each case, the system was trained on about 300 documents. One task was focused on collecting and analyzing data on mass shootings in the U.S. (an essential resource for any epidemiological study of the effects of gun-control measures).

“We collected data from the Gun Violence archive, a website tracking shootings in the United States,” the authors note. “The data contains a news article on each shooting and annotations for (1) the name of the shooter, (2) the number of people killed, (3) the number of people wounded, and (4) the city where the incident took place.”

The other task was the collection of similar data on instances of food contamination. The researchers used the Foodshield EMA database, “documenting adulteration incidents since 1980.” The researchers extracted food type, type of contaminant, and location.

For the mass-shootings task, the researchers asked the system to extract from websites (such as news articles, as in Fig. 1) the name of the shooter, the location of the shooting, the number of people wounded, and the number of people killed.

The goal was to find other documents that contain the information sought, expressed in a form that a basic extractor can “understand.”

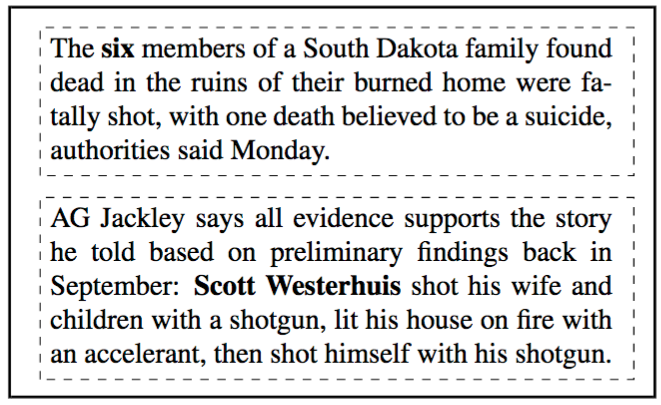

Fig. 2. Two other articles on the same shooting case. The first article clearly mentions that six people were killed. The second one portrays the shooter in an easily extractable form. (credit: Karthik Narasimhan et al.)

For instance, Figure 2 shows two other articles describing the same event in which the entities of interest — the number of people killed and the name of the shooter — are expressed explicitly. That simplifies things.

From those articles, the system learned clusters of search terms that tended to be associated with the data items it was trying to extract. For instance, the names of mass shooters were correlated with terms like “police,” “identified,” “arrested,” and “charged.” During training, for each article the system was asked to analyze, it pulled up, on average, another nine or 10 news articles from the web.

The researchers then compared their system’s performance to that of several extractors trained using more conventional machine-learning techniques. For every data item extracted in both tasks, the new system outperformed its predecessors, usually by about 10 percent.

“The challenges … lie in (1) performing event coreference (retrieving suitable articles describing the same incident) and (2) reconciling the entities extracted from these different documents,” the authors note in the paper. “We address these challenges using a Reinforcement Learning (RL) approach that combines query formulation, extraction from new sources, and value reconciliation.”

UPDATE Dec. 8, 2016 — Added sources for test data.

Abstract of Improving Information Extraction by Acquiring External Evidence with Reinforcement Learning

Most successful information extraction systems operate with access to a large collection of documents. In this work, we explore the task of acquiring and incorporating external evidence to improve extraction accuracy in domains where the amount of training data is scarce. This process entails issuing search queries, extraction from new sources and reconciliation of extracted values, which are repeated until sufficient evidence is collected. We approach the problem using a reinforcement learning framework where our model learns to select optimal actions based on contextual information. We employ a deep Qnetwork, trained to optimize a reward function that reflects extraction accuracy while penalizing extra effort. Our experiments on two databases – of shooting incidents, and food adulteration cases – demonstrate that our system significantly outperforms traditional extractors and a competitive meta-classifier baseline.