Google machine-learning system is first to defeat professional Go player

January 27, 2016

Go is played on a grid of black lines (usually 19×19). Game pieces, called stones, are played on the line intersections. (credit: Goban1/Wikipedia)

A deep-learning computer system called AlphaGo created by Google’s DeepMind team has defeated reigning three-time European Go champion Fan Hui 5 games to 0 — the first time a computer program has ever beaten a professional Go player, reports Google Research blog today (Jan. 27) — a feat previously thought to be at least a decade away.

“AlphaGo uses general machine-learning techniques to allow it to improve itself, just by watching and playing games,” according to David Silver and Demis Hassabis of Google DeepMind. Using a vast collection of more than 30 million Go moves from expert players, DeepMind researchers trained their system to play Go on its own.

To achieve that, AlphaGo, as described in a paper in Nature today, combines a state-of-the-art tree search with two deep neural networks, each containsing many layers with millions of neuron-like connections needed to deal with Go’s vast search space — more than a googol (10100) times larger than chess (a number greater than there are atoms in the universe).

“We first trained [one of the two networks] on 30 million moves from games played by human experts, until it could predict the human move 57% of the time …. But our goal is to beat the best human players, not just mimic them, Silver and Hassabis said. “To do this, AlphaGo learned to discover new strategies for itself, by playing thousands of games between its neural networks, and gradually improving them using a trial-and-error process known as reinforcement learning.”

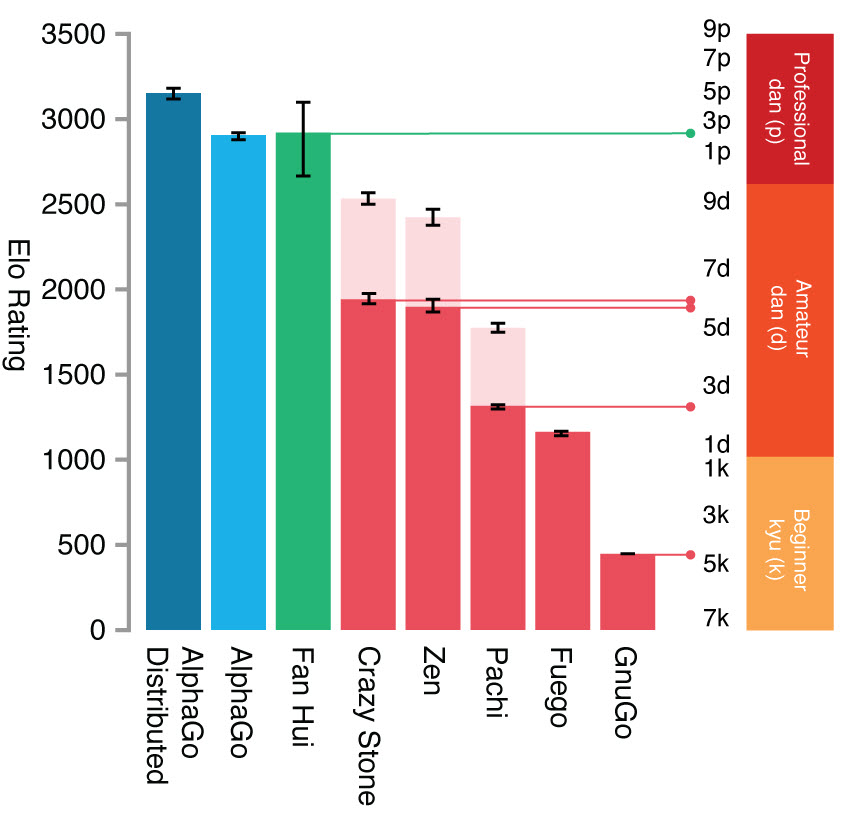

This figure from the Nature article shows the Elo rating (a 230 point gap corresponds to a 79% probability of winning) and approximate rank of AlphaGo (both single machine and distributed versions), the European champion Fan Hui (a professional 2-dan), and the strongest other Go programs, evaluated over thousands of games. Pale pink bars show the performance of other programs when given a four move headstart. (credit: David Silver et al./Nature)

AlphaGo’s next challenge will be to play the top Go player in the world over the last decade, Lee Sedol. The match will take place this March in Seoul, South Korea.

“While games are the perfect platform for developing and testing AI algorithms quickly and efficiently, ultimately we want to apply these techniques to important real-world problems,” the researchers say. “Because the methods we have used are general purpose, our hope is that one day they could be extended to help us address some of society’s toughest and most pressing problems, from climate modelling to complex disease analysis.”

Google DeepMind | Ground-breaking AlphaGo masters the game of Go

Abstract of Mastering the game of Go with deep neural networks and tree search

The game of Go has long been viewed as the most challenging of classic games for artificial intelligence owing to its enormous search space and the difficulty of evaluating board positions and moves. Here we introduce a new approach to computer Go that uses ‘value networks’ to evaluate board positions and ‘policy networks’ to select moves. These deep neural networks are trained by a novel combination of supervised learning from human expert games, and reinforcement learning from games of self-play. Without any lookahead search, the neural networks play Go at the level of state-of-the-art Monte Carlo tree search programs that simulate thousands of random games of self-play. We also introduce a new search algorithm that combines Monte Carlo simulation with value and policy networks. Using this search algorithm, our program AlphaGo achieved a 99.8% winning rate against other Go programs, and defeated the human European Go champion by 5 games to 0. This is the first time that a computer program has defeated a human professional player in the full-sized game of Go, a feat previously thought to be at least a decade away.