Head-mounted cameras could help robots understand social interactions

December 17, 2012

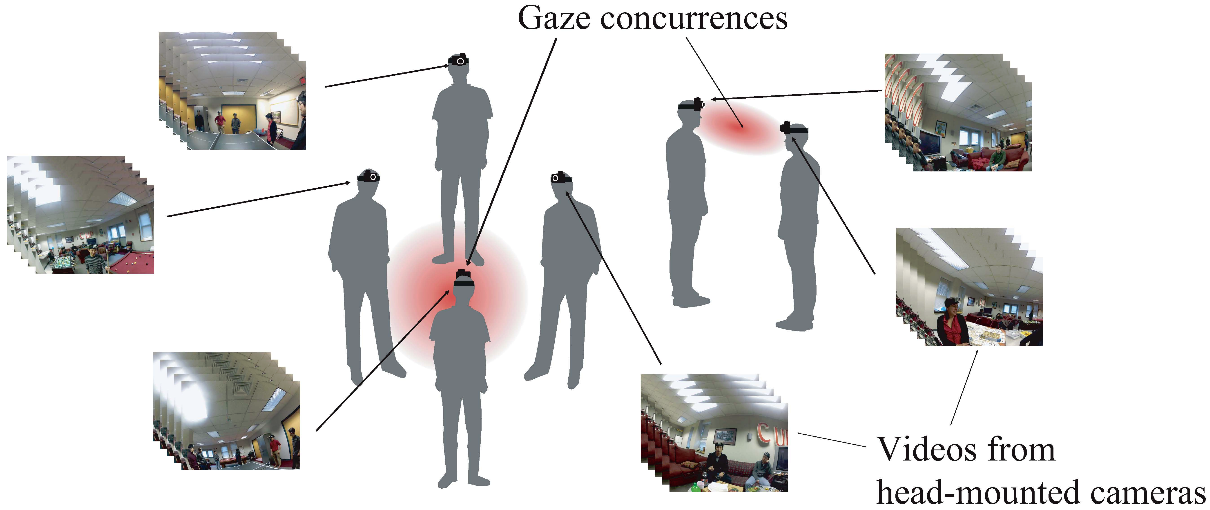

A method to reconstruct 3D gaze concurrences from videos taken by head-mounted cameras (credit: Hyun Soo Park, Eakta Jain, and Yaser Sheikh)

Robots (and some people) have trouble understanding what’s going on in a social setting.

But it may become essential for robots designed to interact with humans, so researchers at Carnegie Mellon University‘s Robotics Institute have developed a method for detecting where people’s gazes intersect.

The researchers tested the method using groups of people with head-mounted video cameras. By noting where their gazes converged in three-dimensional space, the researchers could determine if they were listening to a single speaker, interacting as a group, or even following the bouncing ball in a ping-pong game.

The system thus uses crowdsourcing to provide subjective information about social groups that would otherwise be difficult or impossible for a robot to ascertain.

The researchers’ algorithm for determining “social saliency” could ultimately be used to evaluate a variety of social cues, such as the expressions on people’s faces or body movements, or data from other types of visual or audio sensors.

“In the future, robots will need to interact organically with people and to do so they must understand their social environment, not just their physical environment,” said Hyun Soo Park, a Ph.D. student in mechanical engineering, who worked on the project with Yaser Sheikh, assistant research professor of robotics, and Eakta Jain of Texas Instruments, Ph.D. (robotics).

Though head-mounted cameras are still unusual, police officers, soldiers, search-and-rescue personnel,and even surgeons are among those who have begun to wear body-mounted cameras. Head-mounted systems, such as those integrated into eyeglass frames, are poised to become more common. Even if person-mounted cameras don’t become ubiquitous, Sheikh noted that these cameras someday might be used routinely by people who work in cooperative teams with robots.

A party for robots?

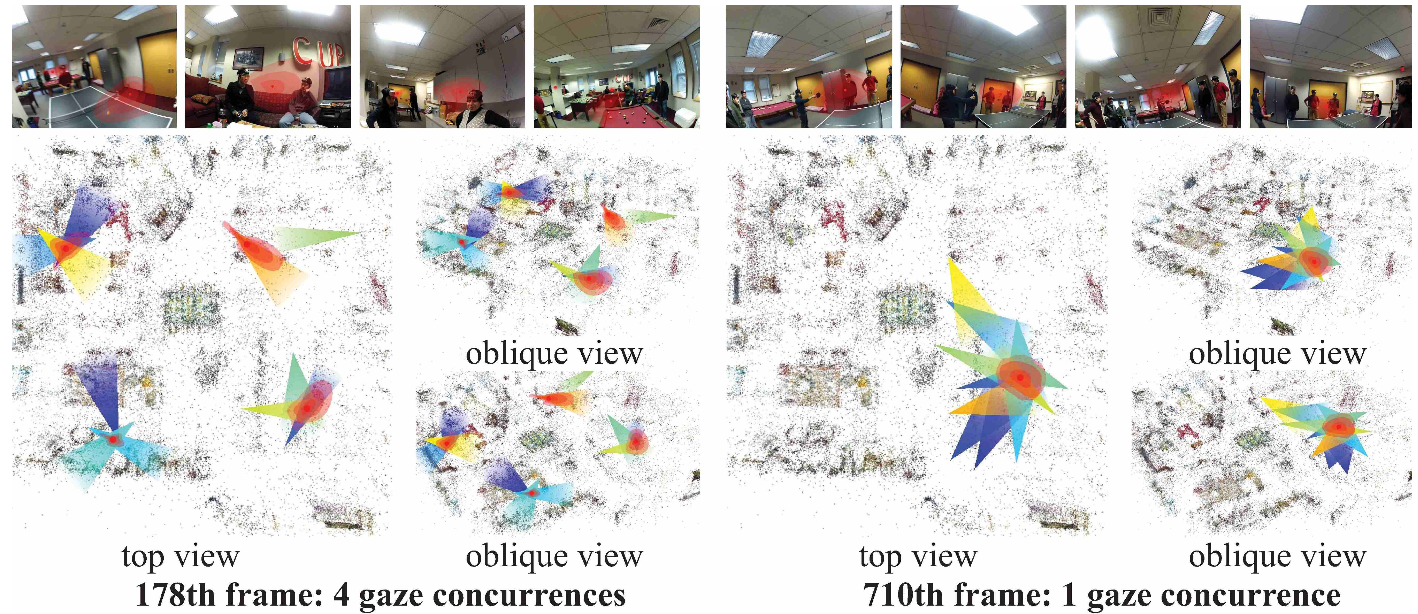

Gaze concurrences for the party scene reconstructed. 11 head-mounted cameras were used to capture the scene. Top row: images with the re-projection of the gaze concurrences, bottom row: rendering of the 3D gaze concurrences with cone-shaped gaze models. (Credit: Hyun Soo Park, Eakta Jain, and Yaser Sheikh)

The technique was tested in three real-world settings: a meeting involving two work groups, a musical performance, and a party in which participants played pool and ping-pong and chatted in small groups.

The head-mounted cameras provided precise data about what people were looking at in social settings. The algorithm developed by the research team was able to automatically estimate the number and 3D position of “gaze concurrences” — positions where the gazes of multiple people intersected.

But the researchers were surprised by the level of detail they were able to detect. In the party setting, for instance, the algorithm didn’t just indicate that people were looking at the ping-pong table; the gaze concurrence video actually shows the flight of the ball as it bounces and is batted back and forth.

That finding suggests another possible application for monitoring gaze concurrence: player-level views of ball games. Park said if basketball players all wore head-mounted cameras, for instance, it might be possible to reconstruct the game, not from the point of view of a single player, but from a collective view of the players as they all keep their eyes on the ball.

Another potential use is the study of social behavior, such as group dynamics and gender interactions, and research into behavioral disorders, such as autism, according to the researchers.

Other possible future uses (with algorithm changes) might be real-time automated analysis of a crime or terrorism scene, where multiple people are recording on video and broadcasting live (to USTREAM, for example), post-event reconstruction, as an adjunct to face-reading technology, and operational group training. — Ed.