How robots aided by deep learning could help autism therapists

June 29, 2018

MIT Media Lab (no sound) | Intro: Personalized Machine Learning for Robot Perception of Affect and Engagement in Autism Therapy. This is an example of a therapy session augmented with SoftBank Robotics’ humanoid robot NAO and deep-learning software. The 35 children with autism who participated in this study ranged in age from 3 to 13. They reacted in various ways to the robots during their 35-minute sessions — from looking bored and sleepy in some cases to jumping around the room with excitement, clapping their hands, and laughing or touching the robot.

Robots armed with personalized “deep learning” software could help therapists interpret behavior and personalize therapy of autistic children, while making the therapy more engaging and natural. That’s the conclusion of a study by an international team of researchers at MIT Media Lab, Chubu University, Imperial College London, and University of Augsburg.*

Children with autism-spectrum conditions often have trouble recognizing the emotional states of people around them — distinguishing a happy face from a fearful face, for instance. So some therapists use a kid-friendly robot to demonstrate those emotions and to engage the children in imitating the emotions and responding to them in appropriate ways.

Personalized autism therapy

But the MIT research team realized that deep learning would help the therapy robots perceive the children’s behavior more naturally, they report in a Science Robotics paper.

Personalization is especially important in autism therapy, according to the paper’s senior author, Rosalind Picard, PhD, a professor at MIT who leads research in affective computing: “If you have met one person, with autism, you have met one person with autism,” she said, citing a famous adage.

“Computers will have emotional intelligence by 2029”… by which time, machines will “be funny, get the joke, and understand human emotion.” — Ray Kurzweil

“The challenge of using AI [artificial intelligence] that works in autism is particularly vexing, because the usual AI methods require a lot of data that are similar for each category that is learned,” says Picard, in explaining the need for deep learning. “In autism, where heterogeneity reigns, the normal AI approaches fail.”

How personalized robot-assisted therapy for autism would work

Robot-assisted therapy** for autism often works something like this: A human therapist shows a child photos or flash cards of different faces meant to represent different emotions, to teach them how to recognize expressions of fear, sadness, or joy. The therapist then programs the robot to show these same emotions to the child, and observes the child as she or he engages with the robot. The child’s behavior provides valuable feedback that the robot and therapist need to go forward with the lesson.

“Therapists say that engaging the child for even a few seconds can be a big challenge for them. [But] robots attract the attention of the child,” says lead author Ognjen Rudovic, PhD, a postdoctorate fellow at the MIT Media Lab. “Also, humans change their expressions in many different ways, but the robots always do it in the same way, and this is less frustrating for the child because the child learns in a very structured way how the expressions will be shown.”

SoftBank Robotics | The researchers used NAO humanoid robots in this study. Almost two feet tall and resembling an armored superhero or a droid, NAO conveys different emotions by changing the color of its eyes, the motion of its limbs, and the tone of its voice.

However, this type of therapy would work best if the robot could also smoothly interpret the child’s own behavior — such as excited or paying attention — during the therapy, according to the researchers. To test this assertion, researchers at the MIT Media Lab and Chubu University developed a personalized deep learning network that helps robots estimate the engagement and interest of each child during these interactions, they report.**

The researchers built a personalized framework that could learn from data collected on each individual child. They captured video of each child’s facial expressions, head and body movements, poses and gestures, audio recordings and data on heart rate, body temperature, and skin sweat response from a monitor on the child’s wrist.

Most of the children in the study reacted to the robot “not just as a toy but related to NAO respectfully, as it if was a real person,” said Rudovic, especially during storytelling, where the therapists asked how NAO would feel if the children took the robot for an ice cream treat.

In the study, the researchers found that the robots’ perception of the children’s responses agreed with assessments by human experts with a high correlation score of 60 percent, the scientists report.*** (It can be challenging for human observers to reach high levels of agreement about a child’s engagement and behavior. Their correlation scores are usually between 50 and 55 percent, according to the researchers.)

Ref.: Science Robotics (open-access). Source: MIT

* The study was funded by grants from the Japanese Ministry of Education, Culture, Sports, Science and Technology; Chubu University; and the European Union’s HORIZON 2020 grant (EngageME).

** A deep-learning system uses hierarchical, multiple layers of data processing to improve its tasks, with each successive layer amounting to a slightly more abstract representation of the original raw data. Deep learning has been used in automatic speech and object-recognition programs, making it well-suited for a problem such as making sense of the multiple features of the face, body, and voice that go into understanding a more abstract concept such as a child’s engagement.

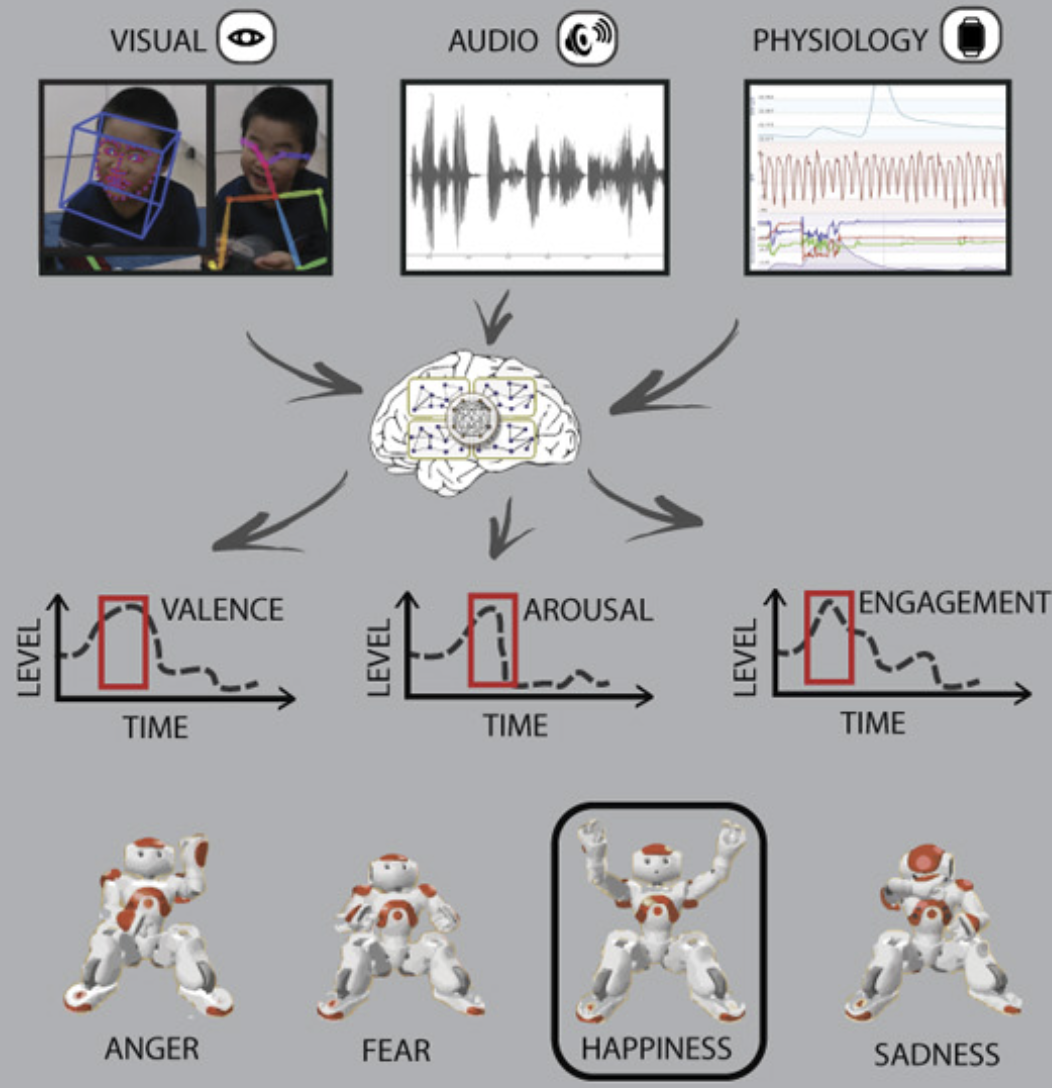

Overview of the key stages (sensing, perception, and interaction) during robot-assisted autism therapy.

Data from three modalities (audio, visual, and autonomic physiology) were recorded using unobtrusive audiovisual sensors and sensors worn on the child’s wrist, providing the child’s heart-rate, skin-conductance (EDA), body temperature, and accelerometer data. The focus of this work is the robot perception, for which we designed the personalized deep learning framework that can automatically estimate levels of the child’s affective states and engagement. These can then be used to optimize the child-robot interaction and monitor the therapy progress (see Interpretability and utility). The images were obtained by using Softbank Robotics software for the NAO robot. (credit: Ognjen Rudovic et al./Science Robotics)

“In the case of facial expressions, for instance, what parts of the face are the most important for estimation of engagement?” Rudovic says. “Deep learning allows the robot to directly extract the most important information from that data without the need for humans to manually craft those features.”

The robots’ personalized deep learning networks were built from layers of these video, audio, and physiological data, information about the child’s autism diagnosis and abilities, their culture and their gender. The researchers then compared their estimates of the children’s behavior with estimates from five human experts, who coded the children’s video and audio recordings on a continuous scale to determine how pleased or upset, how interested, and how engaged the child seemed during the session.

*** Trained on these personalized data coded by the humans, and tested on data not used in training or tuning the models, the networks significantly improved the robot’s automatic estimation of the child’s behavior for most of the children in the study, beyond what would be estimated if the network combined all the children’s data in a “one-size-fits-all” approach, the researchers found. Rudovic and colleagues were also able to probe how the deep learning network made its estimations, which uncovered some interesting cultural differences between the children. “For instance, children from Japan showed more body movements during episodes of high engagement, while in Serbs large body movements were associated with disengagement episodes,” Rudovic notes.