How to control a robotic arm with your mind — no implanted electrodes required

December 14, 2016

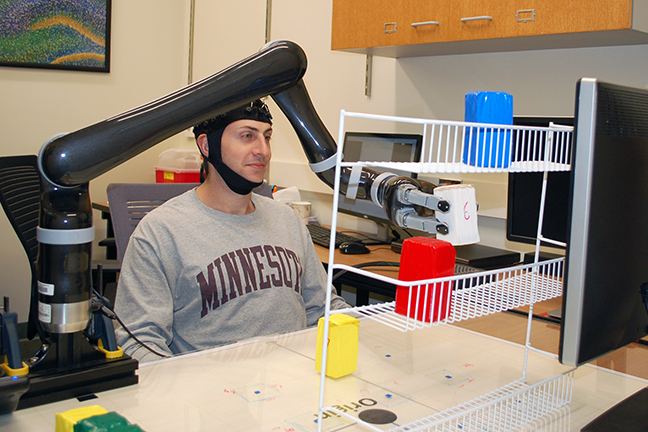

Research subjects at the University of Minnesota fitted with a specialized noninvasive EEG brain cap were able to move a robotic arm in three dimensions just by imagining moving their own arms (credit: University of Minnesota College of Science and Engineering)

Researchers at the University of Minnesota have achieved a “major breakthrough” that allows people to control a robotic arm in three dimensions, using only their minds. The research has the potential to help millions of people who are paralyzed or have neurodegenerative diseases.

The open-access study is published online today in Scientific Reports, a Nature research journal.

College of Science and Engineering, UMN | Noninvasive EEG-based control of a robotic arm for reach and grasp tasks

“This is the first time in the world that people can operate a robotic arm to reach and grasp objects in a complex 3D environment, using only their thoughts without a brain implant,” said Bin He, a University of Minnesota biomedical engineering professor and lead researcher on the study. “Just by imagining moving their arms, they were able to move the robotic arm.”

The noninvasive technique, based on a brain-computer interface (BCI) using electroencephalography (EEG), records weak electrical activity of the subjects’ brain through a specialized, high-tech EEG cap fitted with 64 electrodes. A computer then converts the “thoughts” into actions by advanced signal processing and machine learning.

An example of an implanted electrode array, allowing for a patient to control a robot arm with her thoughts (credit: UPMC)

The system solves problems with previous BCI systems. Early efforts used invasive electrode arrays implanted in the cortex to control robotic arms, or the patient’s own arm using neuromuscular electrical stimulation. These early systems face the risk of post-surgery complications and infections and are difficult to keep working over time. They also limit broad use.

An EEG-based device that allows an amputee to grasp with a bionic hand, powered only by his thoughts (credit: University of Houston)

More recently, noninvasive EEG has been used. It doesn’t require risky, expensive surgery and it’s easy and fast to attach scalp electrodes. For example, in 2015, University of Houston researchers developed an EEG-based system that allows users to successfully grasp objects, including a water bottle and a credit card. The subject grasped the selected objects 80 percent of the time using a high-tech bionic hand fitted to the amputee’s stump.

Other EEG-based systems for patients have included ones capable of controlling a lower-limb exoskelton and a thought-controlled robotic exoskeleton for the hand. However, these systems have not been suitable for multi-dimensional control of a robotic arm, allowing the patient to reach, grasp, and move an object in three-dimensional (3D) space.

Full 3D control or a robotic arm by just thinking — no implants

The new University of Minnesota EEG BCI system was developed to enable such natural, unimpeded movements of a robotic arm in 3D space, such as picking up a cup, moving it around on a table, and drinking from it — similar to the robot arm controlled by implanted-electrodes shown in the photo above of a patient served a chocolate bar.*

The new technology basically works in the same way as the robot system using implanted electrodes. It’s based on the motor cortex, the area of the brain that governs movement. When humans move, or think about a movement, neurons in the motor cortex produce tiny electric currents. Thinking about a different movement activates a new assortment of neurons, a phenomenon confirmed by cross-validation using functional MRI in He’s previous study. In the new study, the researchers sorted out the possible movements, using advanced signal processing.

User controls flight of a 3D virtual helicopter using brain waves (credit: Bin He/University of Minnesota)

This robotic-arm research builds upon He’s research published in 2011, in which subjects were able to fly a virtual quadcopter using noninvasive EEG technology, and later research allowing for flying a physical quadcopter.

The next step of He’s research will be to further develop this BCI technology, using a brain-controlled robotic prosthetic limb attached to a person’s body for patients who have had a stroke or are paralyzed.

The University of Minnesota study was funded by the National Science Foundation (NSF), the National Center for Complementary and Integrative Health, National Institute of Biomedical Imaging and Bioengineering, and National Institute of Neurological Disorders and Stroke of the National Institutes of Health (NIH), and the University of Minnesota’s MnDRIVE (Minnesota’s Discovery, Research and InnoVation Economy) Initiative funded by the Minnesota Legislature.

* Eight healthy human subjects completed the experimental sessions of the study wearing the EEG cap. Subjects gradually learned to imagine moving their own arms without actually moving them to control a robotic arm in 3D space. They started from learning to control a virtual cursor on computer screen and then learned to control a robotic arm to reach and grasp objects in fixed locations on a table. Eventually, they were able to move the robotic arm to reach and grasp objects in random locations on a table and move objects from the table to a three-layer shelf by only thinking about these movements.

All eight subjects could control a robotic arm to pick up objects in fixed locations with an average success rate above 80 percent and move objects from the table onto the shelf with an average success rate above 70 percent.

Abstract of Noninvasive Electroencephalogram Based Control of a Robotic Arm for Reach and Grasp Tasks

Brain-computer interface (BCI) technologies aim to provide a bridge between the human brain and external devices. Prior research using non-invasive BCI to control virtual objects, such as computer cursors and virtual helicopters, and real-world objects, such as wheelchairs and quadcopters, has demonstrated the promise of BCI technologies. However, controlling a robotic arm to complete reach-and-grasp tasks efficiently using non-invasive BCI has yet to be shown. In this study, we found that a group of 13 human subjects could willingly modulate brain activity to control a robotic arm with high accuracy for performing tasks requiring multiple degrees of freedom by combination of two sequential low dimensional controls. Subjects were able to effectively control reaching of the robotic arm through modulation of their brain rhythms within the span of only a few training sessions and maintained the ability to control the robotic arm over multiple months. Our results demonstrate the viability of human operation of prosthetic limbs using non-invasive BCI technology.