Memory capacity of brain is 10 times more than previously thought

January 20, 2016

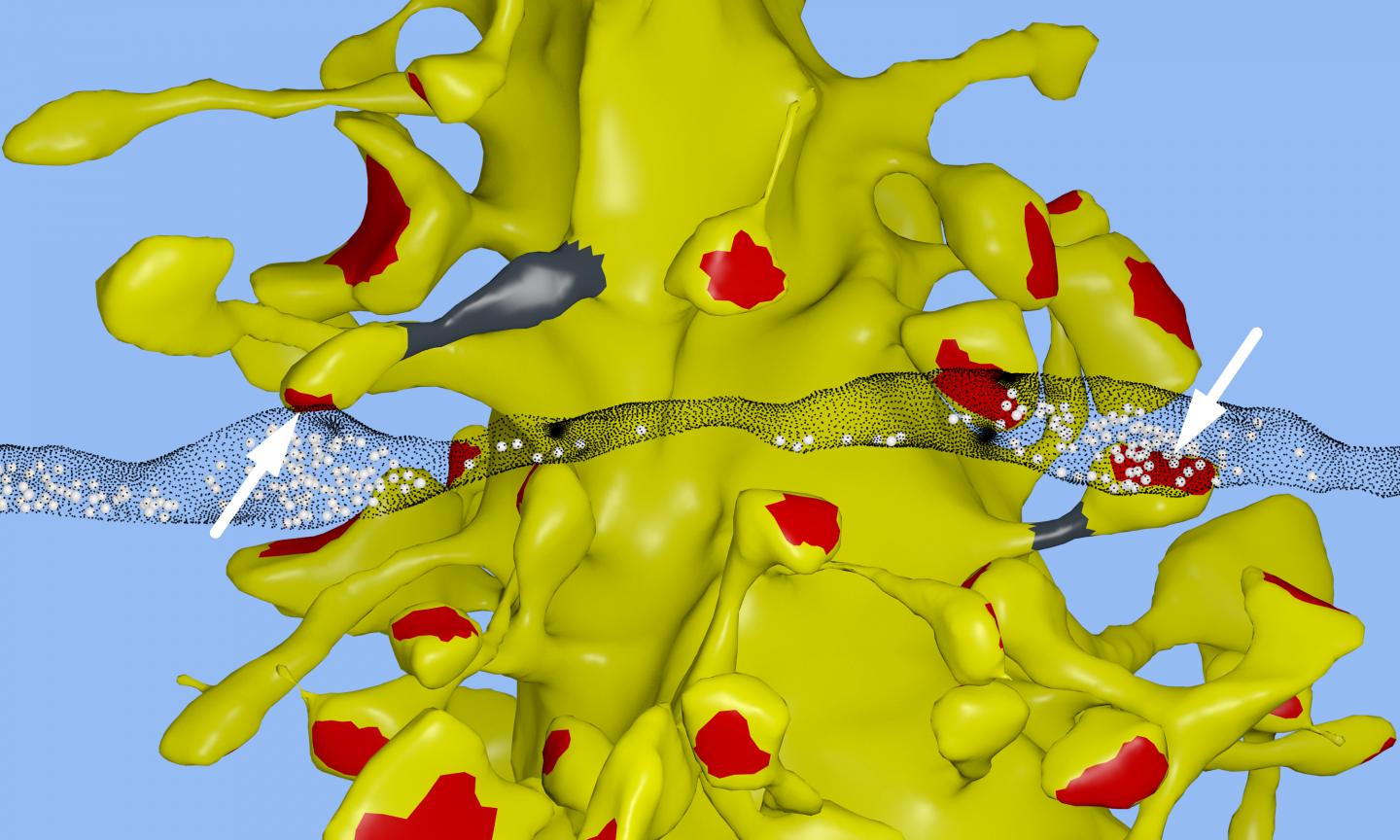

In a computational reconstruction of brain tissue in the hippocampus, Salk and UT-Austin scientists found the unusual occurrence of two synapses from the axon of one neuron (translucent black strip) forming onto two spines on the same dendrite of a second neuron (yellow). Separate terminals from one neuron’s axon are shown in synaptic contact with two spines (arrows) on the same dendrite of a second neuron in the hippocampus. The spine head volumes, synaptic contact areas (red), neck diameters (gray) and number of presynaptic vesicles (white spheres) of these two synapses are almost identical. (credit: Salk Institute)

Salk researchers and collaborators have achieved critical insight into the size of neural connections, putting the memory capacity of the brain far higher than common estimates. The new work also answers a longstanding question as to how the brain is so energy efficient, and could help engineers build computers that are incredibly powerful but also conserve energy.

“This is a real bombshell in the field of neuroscience,” says Terry Sejnowski, Salk professor and co-senior author of the paper, which was published in eLife. “We discovered the key to unlocking the design principle for how hippocampal neurons function with low energy but high computation power. Our new measurements of the brain’s memory capacity increase conservative estimates by a factor of 10 to at least a petabyte (1 quadrillion or 1015 bytes), in the same ballpark as the World Wide Web.”

“When we first reconstructed every dendrite, axon, glial process, and synapse* from a volume of hippocampus the size of a single red blood cell, we were somewhat bewildered by the complexity and diversity amongst the synapses,” says Kristen Harris, co-senior author of the work and professor of neuroscience at the University of Texas, Austin. “While I had hoped to learn fundamental principles about how the brain is organized from these detailed reconstructions, I have been truly amazed at the precision obtained in the analyses of this report.”

10 times more discrete sizes of synapses discovered

The Salk team, while building a 3D reconstruction of rat hippocampus tissue (the memory center of the brain), noticed something unusual. In some cases, a single axon from one neuron formed two synapses reaching out to a single dendrite of a second neuron, signifying that the first neuron seemed to be sending a duplicate message to the receiving neuron.

At first, the researchers didn’t think much of this duplicity, which occurs about 10 percent of the time in the hippocampus. But Tom Bartol, a Salk staff scientist, had an idea: if they could measure the difference between two very similar synapses such as these, they might glean insight into synaptic sizes, which so far had only been classified in the field as small, medium and large.

To do this, researchers used advanced microscopy and computational algorithms they had developed to image rat brains and reconstruct the connectivity, shapes, volumes and surface area of the brain tissue down to a nanomolecular level.

The scientists expected the synapses would be roughly similar in size, but were surprised to discover the synapses were nearly identical.

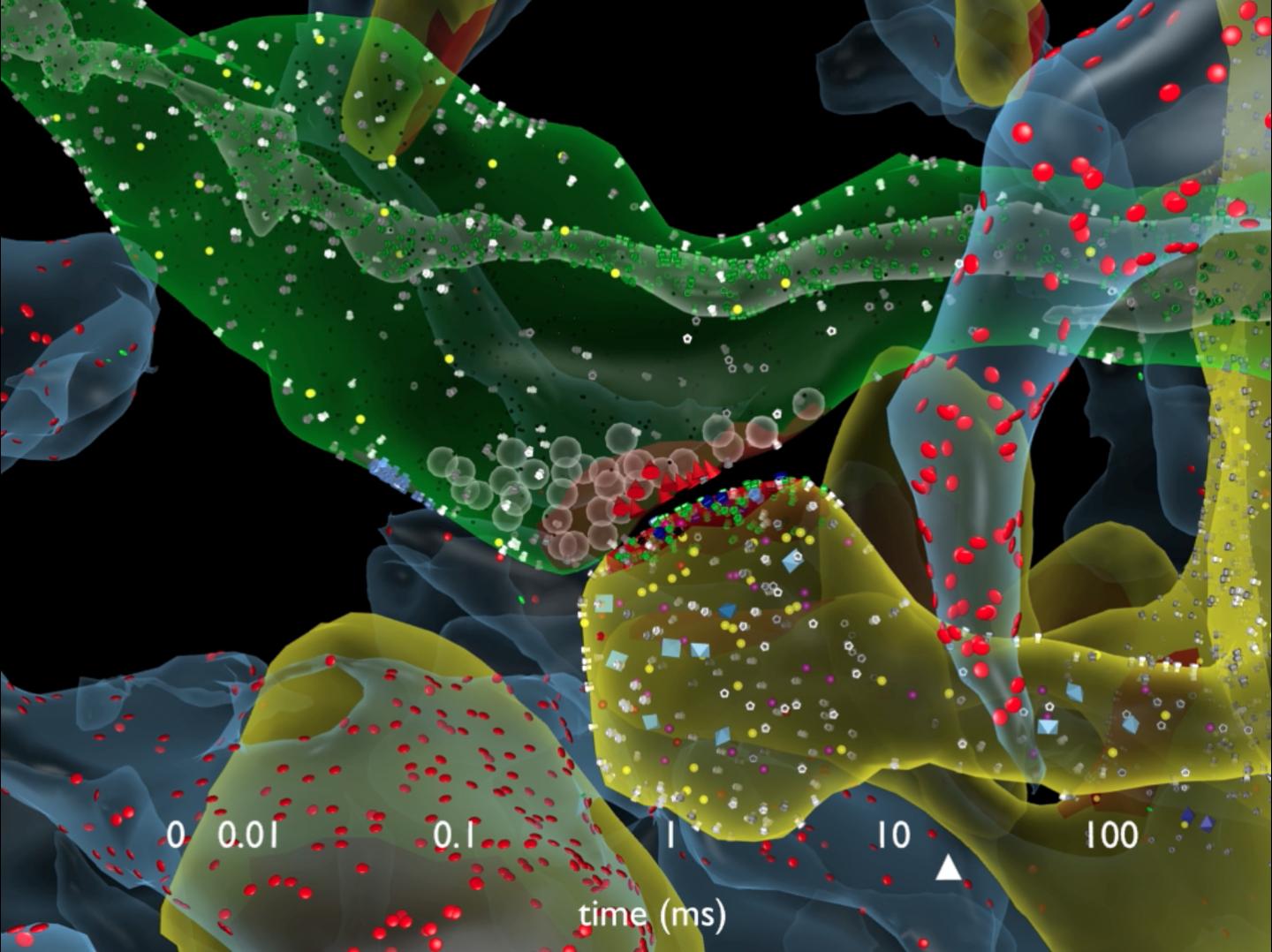

Salk scientists computationally reconstructed brain tissue in the hippocampus to study the sizes of connections (synapses). The larger the synapse, the more likely the neuron will send a signal to a neighboring neuron. The team found that there are actually 26 discrete sizes that can change over a span of a few minutes, meaning that the brain has a far great capacity at storing information than previously thought. Pictured here is a synapse between an axon (green) and dendrite (yellow). (credit: Salk Institute)

“We were amazed to find that the difference in the sizes of the pairs of synapses were very small, on average, only about eight percent different in size. No one thought it would be such a small difference. This was a curveball from nature,” says Bartol.

Because the memory capacity of neurons is dependent upon synapse size, this eight percent difference turned out to be a key number the team could then plug into their algorithmic models of the brain to measure how much information could potentially be stored in synaptic connections.

It was known before that the range in sizes between the smallest and largest synapses was a factor of 60 and that most are small.

But armed with the knowledge that synapses of all sizes could vary in increments as little as eight percent between sizes within a factor of 60, the team determined there could be about 26 categories of sizes of synapses, rather than just a few.

“Our data suggests there are 10 times more discrete sizes of synapses than previously thought,” says Bartol. In computer terms, 26 sizes of synapses correspond to about 4.7 “bits” of information. Previously, it was thought that the brain was capable of just one to two bits for short and long memory storage in the hippocampus.

“This is roughly an order of magnitude of precision more than anyone has ever imagined,” says Sejnowski.

What makes this precision puzzling is that hippocampal synapses are notoriously unreliable. When a signal travels from one neuron to another, it typically activates that second neuron only 10 to 20 percent of the time.

“We had often wondered how the remarkable precision of the brain can come out of such unreliable synapses,” says Bartol. One answer, it seems, is in the constant adjustment of synapses, averaging out their success and failure rates over time. The team used their new data and a statistical model to find out how many signals it would take a pair of synapses to get to that eight percent difference.

The researchers calculated that for the smallest synapses, about 1,500 events cause a change in their size/ability (20 minutes) and for the largest synapses, only a couple hundred signaling events (1 to 2 minutes) cause a change.

“This means that every 2 or 20 minutes, your synapses are going up or down to the next size. The synapses are adjusting themselves according to the signals they receive,” says Bartol.

“Our prior work had hinted at the possibility that spines and axons that synapse together would be similar in size, but the reality of the precision is truly remarkable and lays the foundation for whole new ways to think about brains and computers,” says Harris. “The work resulting from this collaboration has opened a new chapter in the search for learning and memory mechanisms.” Harris adds that the findings suggest more questions to explore, for example, if similar rules apply for synapses in other regions of the brain and how those rules differ during development and as synapses change during the initial stages of learning.

“The implications of what we found are far-reaching,” adds Sejnowski. “Hidden under the apparent chaos and messiness of the brain is an underlying precision to the size and shapes of synapses that was hidden from us.”

A model for energy-efficient computers

The findings also offer a valuable explanation for the brain’s surprising efficiency. The waking adult brain generates only about 20 watts of continuous power—as much as a very dim light bulb. The Salk discovery could help computer scientists build powerful and ultraprecise, but energy-efficient, computers, particularly ones that employ “deep learning” and artificial neural nets—techniques capable of sophisticated learning and analysis, such as speech, object recognition and translation.

“This trick of the brain absolutely points to a way to design better computers,” says Sejnowski. “Using probabilistic transmission turns out to be as accurate and require much less energy for both computers and brains.”

Other authors on the paper were Cailey Bromer of the Salk Institute; Justin Kinney of the McGovern Institute for Brain Research; and Michael A. Chirillo and Jennifer N. Bourne of the University of Texas, Austin.

The work was supported by the NIH and the Howard Hughes Medical Institute.

* Our memories and thoughts are the result of patterns of electrical and chemical activity in the brain. A key part of the activity happens when branches of neurons, much like electrical wire, interact at certain junctions, known as synapses. An output ‘wire’ (an axon) from one neuron connects to an input ‘wire’ (a dendrite) of a second neuron. Signals travel across the synapse as chemicals called neurotransmitters to tell the receiving neuron whether to convey an electrical signal to other neurons. Each neuron can have thousands of these synapses with thousands of other neurons. Synapses are still a mystery, though their dysfunction can cause a range of neurological diseases. Larger synapses — with more surface area and vesicles of neurotransmitters — are stronger, making them more likely to activate their surrounding neurons than medium or small synapses.

UPDATE 1/22/2016 “in the same ballpark as the World Wide Web” removed; appears to be inaccurate. The Internet Archive, a subset of the Web, currently stores 50 petabytes, for example.

Salk Institute | Salk scientists computationally reconstructed brain tissue in the hippocampus to study the sizes of connections (synapses). The larger the synapse, the more likely the neuron will send a signal to a neighboring neuron. The team found that there are actually 26 discrete sizes that can change over a span of a few minutes, meaning that the brain has a far great capacity at storing information than previous

Abstract of Nanoconnectomic upper bound on the variability of synaptic plasticity

Information in a computer is quantified by the number of bits that can be stored and recovered. An important question about the brain is how much information can be stored at a synapse through synaptic plasticity, which depends on the history of probabilistic synaptic activity. The strong correlation between size and efficacy of a synapse allowed us to estimate the variability of synaptic plasticity. In an EM reconstruction of hippocampal neuropil we found single axons making two or more synaptic contacts onto the same dendrites, having shared histories of presynaptic and postsynaptic activity. The spine heads and neck diameters, but not neck lengths, of these pairs were nearly identical in size. We found that there is a minimum of 26 distinguishable synaptic strengths, corresponding to storing 4.7 bits of information at each synapse. Because of stochastic variability of synaptic activation the observed precision requires averaging activity over several minutes.