Proteins remember the past to predict the future

October 5, 2012

The most efficient machines remember what has happened to them, and use that memory to predict what the future holds.

That is the conclusion of a theoretical study by Susanne Still, a computer scientist at the University of Hawaii at Manoa and her colleagues, and it should apply equally to “machines” ranging from molecular enzymes to computers, Nature News reports. The finding could help to improve scientific models such as those used to study climate change.

Information that provides clues about the future state of the environment is useful, because it enables the machine to ‘prepare’ — to adapt to future circumstances, and thus to work as efficiently as possible. “My thinking is inspired by dance, and sports in general, where if I want to move more efficiently then I need to predict well,” says Still.

Alternatively, think of a vehicle fitted with a smart driver-assistance system that uses sensors to anticipate its imminent environment and react accordingly — for example, by recording whether the terrain is wet or dry, and thus predicting how best to brake for safety and fuel efficiency.

That sort of predictive function costs only a tiny amount of processing energy compared with the total energy consumption of a car.

But for a biomolecule it can be very costly to store information, so its memory needs to be highly selective. Environments are full of random noise, and there is no gain in the machine ‘remembering’ all the details. “Some information just isn’t useful for making predictions,” says Crooks.

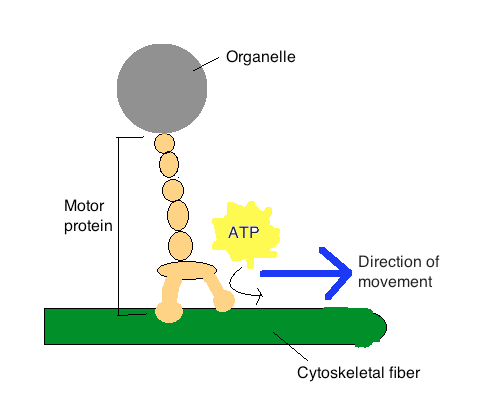

Because biochemical motors and pumps have indeed evolved to be efficient, says Still, “they must therefore be doing something clever — something tied to the cognitive ability we pride ourselves with: the capacity to construct concise representations of the world we have encountered, which allow us to say something about things yet to come”.

This balance, and the search for concision, is precisely what scientific models have to negotiate. If you are trying to devise a computer model of a complex system, in principle there is no end to the information that it might incorporate. But in doing that you risk simply constructing a one-to-one map of the real world — not really a model at all, just a mass of data, many of which might be irrelevant to prediction.

Efficient models should achieve good predictive power without remembering everything. “This is the same as saying that a model should not be overly complicated — that is, Occam’s razor,” says Still. She hopes that knowledge of this connection between energy dissipation, prediction and memory might help researchers to improve algorithms that minimize the complexity of their models.