Robots and prostheses learn human touch

April 9, 2015

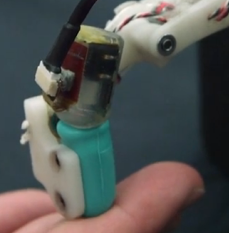

Touch-sensitive robotic hand (Credit: Science Nation)

Research engineers and students in the University of California, Los Angeles (UCLA) Biomechatronics Lab are designing artificial limbs that are more touch-sensitive.

The team, led by mechanical engineer Veronica J. Santos, is constructing a language of touch and quantifying it with mechanical touch sensors that interact with objects of various shapes, sizes. and textures.

Using an array of instrumentation, Santos’ team is able to translate that interaction into data a computer can understand.

The data is used to create a formula or algorithm that gives the computer the ability to identify patterns among the items it has in its library of experiences and something it has never felt before. This research will help the team develop what Santos calls “artificial haptic intelligence.”

The research is supported by the NSF award #1208519, NRI-Small: Context-Driven Haptic Inquiry of Objects Based on Task Requirements for Artificial Grasp and Manipulation. NRI is the acronym for the National Robotics Initiative.

New AI algorithms developed at UCLA record pressure, location (distance from end of finger in this case), and skin deformation, and “learn” to recognize human skin, for example (credit: Science Nation)

NSF