Seeing sound: visual cortex processes auditory information too

May 27, 2014

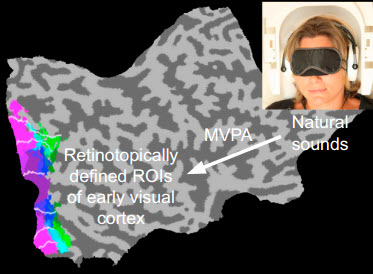

Ten healthy subjects wearing blindfolds were given solely auditory stimulation in the absence of visual stimulation. In a separate session, retinotopic mapping was performed for all subjects to define early visual areas of the brain. Sound-induced blood-oxygen-level-dependent activation patterns from these regions of interest (ROIs) were fed into a multivariate pattern analysis. (Credit: Petra Vetter, Fraser W. Smith, nd Lars Muckli/Current Biology)

University of Glasgow scientists studying brain process involved in sight have discovered that the visual cortex also uses information gleaned from the ears when viewing the world.

They suggest this auditory input enables the visual system to predict incoming information and could confer a survival advantage.

“Sounds create visual imagery, mental images, and automatic projections,” said Professor Lars Muckli, of the University of Glasgow’s Institute of Neuroscience and Psychology, who led the research. “For example, if you are in a street and you hear the sound of an approaching motorbike, you expect to see a motorbike coming around the corner.”

The study, published in the journal Current Biology (open access), involved conducting five different experiments using functional Magnetic Resonance Imaging (fMRI) to examine the activity in the early visual cortex in 10 volunteer subjects.

In one experiment they asked the blindfolded volunteers to listen to three different sounds: birdsong, traffic noise and a talking crowd. Using a special algorithm that can identify unique patterns in brain activity, the researchers were able to discriminate between the different sounds being processed in early visual cortex activity.

A second experiment revealed that even imagined images, in the absence of both sight and sound, evoked activity in the early visual cortex.

“This research enhances our basic understanding of how interconnected different regions of the brain are,” Muckli said. “The early visual cortex hasn’t previously been known to process auditory information, and while there is some anatomical evidence of interconnectedness in monkeys, our study is the first to clearly show a relationship in humans.

“This might provide insights into mental health conditions such as schizophrenia or autism and help us understand how sensory perceptions differ in these individuals.”

The project was part of a five-year study funded by a €1.5m European Research Council consolidator grant and the Biotechnology and Biological Sciences Research Council.

Abstract of Current Biology paper

- Early visual cortex receives nonretinal input carrying abstract information

- Both auditory perception and imagery generate consistent top-down input

- Information feedback may be mediated by multisensory areas

- Feedback is robust to attentional, but not visuospatial, manipulation

Human early visual cortex was traditionally thought to process simple visual features such as orientation, contrast, and spatial frequency via feedforward input from the lateral geniculate nucleus (e.g., [1]). However, the role of nonretinal influence on early visual cortex is so far insufficiently investigated despite much evidence that feedback connections greatly outnumber feedforward connections [2–5]. Here, we explored in five fMRI experiments how information originating from audition and imagery affects the brain activity patterns in early visual cortex in the absence of any feedforward visual stimulation. We show that category-specific information from both complex natural sounds and imagery can be read out from early visual cortex activity in blindfolded participants. The coding of nonretinal information in the activity patterns of early visual cortex is common across actual auditory perception and imagery and may be mediated by higher-level multisensory areas. Furthermore, this coding is robust to mild manipulations of attention and working memory but affected by orthogonal, cognitively demanding visuospatial processing. Crucially, the information fed down to early visual cortex is category specific and generalizes to sound exemplars of the same category, providing evidence for abstract information feedback rather than precise pictorial feedback. Our results suggest that early visual cortex receives nonretinal input from other brain areas when it is generated by auditory perception and/or imagery, and this input carries common abstract information. Our findings are compatible with feedback of predictive information to the earliest visual input level (e.g., [6]), in line with predictive coding models [7–10].