The cosmological supercomputer

October 3, 2012

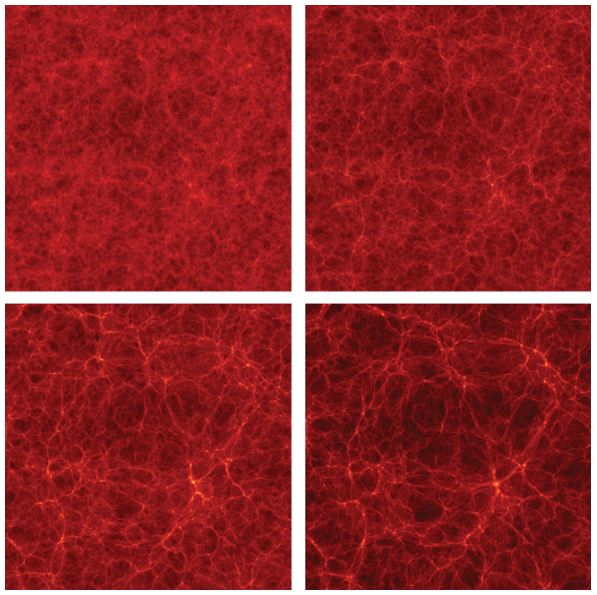

The Bolshoi simulation models the evolution of dark matter, which is responsible for the large-scale structure of the universe. Here, snapshots from the simulation show the dark matter distribution at 500 million and 2.2 billion years [top] and 6 billion and 13.7 billion years [bottom] after the big bang. These images are 50-million-light-year-thick slices of a cube of simulated universe that today would measure roughly 1 billion light-years on a side and encompass about 100 galaxy clusters. (Credit: Simulation, Anatoly Klypin and Joel R. Primack; Visualization, Stefan Gottlöber/Leibniz Institute for Astrophysics Potsdam)

When astronomers look out into the night sky with their most powerful telescopes, they can see no more than about 10 percent of the ordinary matter that’s out there.

To identify the hidden dark matter and dark energy, cosmologists need theoretical models of how the universe evolved and a way to test those models. Fortunately, thanks to progress in supercomputing, it’s now possible to simulate the entire evolution of the universe numerically.

My colleagues and I recently completed one such simulation, which we dubbed Bolshoi, the Russian word for “great” or “grand.” We started Bolshoi in a state that matched what the universe was like some 13.7 billion years ago, not long after the big bang, and simulated the evolution of dark matter and dark energy all the way up to the present day.

We did that using 14 000 central processing units (CPUs) on the Pleiades machine at NASA’s Ames Research Center, in Moffett Field, Calif., the space agency’s largest and fastest supercomputer.

To simulate the universe inside a computer, you have to know where to start. Fortunately, cosmologists have a pretty good idea of what the universe’s first moments were like. There’s good reason to believe that for an outrageously brief period — lasting far less than 10-30 second, a thousandth of a trillionth of a femtosecond — the universe ballooned exponentially, taking what were then minute quantum variations in the density of matter and energy and inflating them tremendously in size. According to this theory of “cosmic inflation,” tiny fluctuations in the distribution of dark matter eventually spawned all the galaxies.

It turns out that reconstructing the early growth phase of these fluctuations — up to about 30 million years after the big bang — demands nothing more than a laptop computer. That’s because the early universe was extremely uniform, the differences in density from place to place amounting to no more than a few thousandths of a percent.

Over time, gravity magnified these subtle density differences. Dark matter particles were attracted to one another, and regions with slightly higher density expanded more slowly than average, while regions of lower density expanded more rapidly. Astrophysicists can model the growth of density fluctuations at these early times easily enough using simple linear equations to approximate the relevant gravitational effects.

The Bolshoi simulation kicks in before the gravitational interactions in this increasingly lumpy universe start to show nonlinear effects.

Once the simulation began, every particle started to attract every other particle. With nearly 10 billion (1010) of them, that would have resulted in roughly 1020 interactions that needed to be evaluated at each time step. Performing that many calculations would have slowed our simulation to a crawl, so we took some computational shortcuts.

As Bolshoi ran, we logged the position and velocity of each of the 8.6 billion particles representing dark matter, producing 180 snapshots of the state of our simulated universe more or less evenly spaced in time. This small sampling still amounts to a lot of data — roughly 80 terabytes. All told, the Bolshoi simulation required 400 000 time steps and about 6 million CPU-hours to finish — the equivalent of about 18 days using 14 000 cores and 12 terabytes of RAM on the Pleiades supercomputer. But just as in observational astronomy, most of the hard work comes not in collecting mountains of data but in sorting through it all later.

As you might imagine, no one simulation can do everything. Each must make a trade-off between resolution and the overall size of the region to be modeled. The Bolshoi simulation was of intermediate size. It considered a cubic volume of space about 1 billion light-years on edge, which is only about 0.00005 percent of the volume of the visible universe. But it still produced a good 10 million halos — an ample number to evaluate the general evolution of galaxies.

One of the biggest hurdles going forward will be adapting to supercomputing’s changing landscape. The speed of individual microprocessor cores hasn’t increased significantly since 2004. Instead, today’s computers pack more cores on each chip and often supplement them with accelerators like graphics processing units. Writing efficient programs for such computer architectures is an ongoing challenge, as is handling the increasing amounts of data from astronomical simulations and observations.

Despite those difficulties, I have every reason to think that numerical experiments like Bolshoi will only continue to get better. With any luck, the toy universes I and other astrophysicists create will help us make better sense of what we see in our most powerful telescopes — and help answer some of the grandest questions we can ask about the universe we call our home.