The hivemind Singularity

July 18, 2012

In New Model Army, a near-future science fiction novel by Adam Roberts, human intelligence evolves into a hivemind that makes people the violent cells of a collective being, says Alan Jacobs in The Atlantic.

In New Model Army, a near-future science fiction novel by Adam Roberts, human intelligence evolves into a hivemind that makes people the violent cells of a collective being, says Alan Jacobs in The Atlantic.

New Model Army raises a set of discomfiting questions: Are our electronic technologies on the verge of enabling truly collective human intelligence? And if that happens, will we like the results?

Roberts asks us to imagine a near future when electronic communications technologies enable groups of people to communicate with one another instantaneously, and on secure private networks invulnerable, or nearly so, to outside snooping. Such groups are self-organized and (at first) self-funding and devoted to radical democracy, arm themselves, and fight on behalf of those who pay them.

In short, imagine groups arising that resemble Anonymous, whose extemporaneous self-organizing projects have recently been brilliantly chronicled by Quinn Norton, but with better communications and an interest, not in hacking websites, but in fighting and killing for money, says Jacobs.

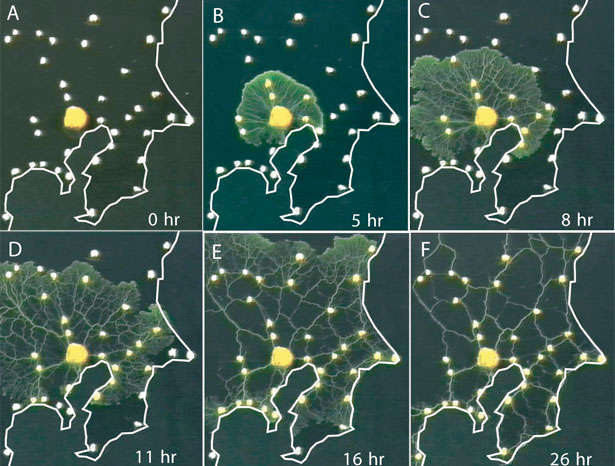

A giant slime mold

Imagine that each NMA organizes itself and makes decisions collectively: no commander establishes strategy and gives orders, but instead all members of the NMA communicate with what amounts to an advanced audio form of the IRC protocol, debate their next step, and vote.

Results of a vote are shared to all immediately and automatically, at which point the soldiers start doing what they voted to do.

Those who cannot accept group decisions tend to drift out of the NMA, but Roberts shows convincingly how powerfully group identity links the soldiers to one another — how readily they accept the absorption of individual consciousness into a far greater one. They are proud of their shared identity, and tend to smirk when officers of more traditional armies want to know who their “ringleaders” are.

Its soldiers can form into one enormous mass in order to attack a city — acting for the time much like a traditional army — but then at need dissolve into mist. Soldiers just go away and find shelter somewhere, bunking with friends or in abandoned buildings. They stay in touch with one another and when Pantagral decides to reform, they rise up to strike once more.

In short, they behave like a slime mold, which changes size, splits and combines, according to need, in such a way that it’s hard to say whether the slime mold is one big thing or a bunch of little things. Slime molds and social insects behave with an intelligence that ought to be impossible for such apparently simple organisms, but, as Steven Johnson points out in his fascinating book Emergence, simple organisms obeying simple rules can collectively manifest astonishingly complex behavior.

The book asks: what happens when human beings, not just slime molds or ants, submit themselves to collective will and become part of an immense shared intelligence? If complex behavior can simply “emerge” through the simple decisions of simple creatures, what might happen if much more complex creatures become absorbed into a collectivity?

The first answer that science-fiction fans are likely to give is: The Borg. Which is to say, the prospect of any single human intelligence being lost in a collective mind fills us with fear. We fear that the transcending of human intelligence will also mark the transcending of human feeling, that all of our familiar and deeply-treasured ideas about what constitutes human flourishing will be simply cast aside by a superior intelligence that has other and supposedly greater concerns.

What if this is the Singularity?

“Not simply our machines becoming smarter than we are, but the machines we use to communicate with one another enabling our own translation to a supposedly “higher” sphere of being? What if the “posthuman” isn’t being a cyborg but instead being a cell in a giant’s body, helping to enable a vast consciousness that you’re never aware of and that is never aware of you?

“What if the price exacted by the Singularity is the elimination of human individuality altogether, either voluntarily or, if you happen to have retained your individuality at the moment when the playful giants come through, involuntarily? We tend to talk easily and happily about crowdsourcing, the wisdom of crowds, the hive mind. New Model Army makes me think that we could benefit from a little more uneasiness.”